I hope you enjoyed the WWDC keynote and Platforms State of the Union yesterday – I know I did!

Whether we were at WWDC, or watching the live steam, the raywenderlich.com team and I loved finding out about the new tech and sharing our reactions. We had some especially fun discussions about some of the odd naming choices! :]

As the iOS team lead at raywenderlich.com, I thought it would be useful to write a quick post sharing some of my initial reactions to all of the new announcements.

Feel free to post any of your own thoughts, or post anything I may have missed!

Xcode 9

Personally, the thing I get most excited for each WWDC is the new version of Xcode. This year, Xcode 9 was announced, and it represents a huge update with a lot of major changes we’re all going to love.

New Editor

Xcode 9 has a brand new Source Editor, entirely written in Swift. In the new editor you can use the Fix interface to fix multiple issues at once. Also, when mousing around your projects, you can hold the Command key and visually see how structures in your code are organized:

One announcement that received a strong ovation was Xcode 9 will now increase or decrease font size in the Source Editor with Command-+ or Command– – the keyboard shortcut shared by many other text editors.

And as an added bonus, the new source editor also includes an integrated Markdown editor (which will really help create some nice looking GitHub READMEs).

Refactoring

I’ve been excitedly anticipating Xcode’s ability to refactor Swift code for as long as Swift has been a thing. IDE-supported refactoring has long been a standard for top-tier development environments, and it’s so good to see this is now available in Xcode 9 for Swift code (in addition to Objective-C, C++ and C). One of the most basic refactorings is to rename a class:

Notice the class itself is renamed, and all references to that class in the project are renamed as well, including references in the Storyboard and the filename itself! Sure, renaming something isn’t that tough, but you should lean on your IDE wherever possible to make your life easier.

There’s a bunch of other refactoring options available as well, and what’s even cooler is Apple will be open sourcing the refactoring engine so others can collaboratively extend and enhance it.

Swift 4

Xcode 9 comes with Swift 4 support by default. In fact, it has a single Swift compiler that can compile both Swift 3.2 and Swift 4, and can even support different versions of Swift across different targets in the same project!

We’ll be posting a detailed roundup about What’s New in Swift 4 on raywenderlich.com soon.

Xcode ❤️s GitHub

Xcode 9 now connects easily with your GitHub account (GitHub.com, or GitHub Enterprise) making it very easy to see a list of your existing projects, clone projects, manage branches, use tags, and work with remotes.

Wireless Debugging

Xcode 9 no longer requires you to connect your debugging device to your computer via USB. Now you can debug your apps on real devices over your local network. This will also work with Instruments, Accessibility Inspector, Quicktime Player, and Console.

Simulator Enhancements

There’s some really neat changes to the iOS Simulator. Now you can run multiple simulators at once!

Yes, this is a screenshot I just took, and these are all different simulators running at the same time! In addition to each simulator being resizable, simulators also include a new bezel where you can simulate different hardware interactions that weren’t possible in the past.

Testing

There were two improvements to Xcode’s automated testing support that caught my eye:

-

Xcode UI tests can access other apps – It hasn’t been possible in the past to write Xcode UI tests for app functionality that lives inside other apps, like Settings, or Extensions. It’s now possible for your Xcode UI tests to access other apps for providing deeper verification of behavior.

For example, if your app leverages a Share Extension to receive photos, it was not possible to write an Xcode UI test to open the Share Sheet in the Photos app to share a picture into your app. This is now possible.

- Tests Can Run On Simulators In Parallel – Taking advantage of the ability to run more than one iOS Simulator at the same time, automated tests can now run on more than one simulator at the same time. For example, this will be useful for running your test suite against an iOS 10 Simulator while simultaneously running the same suite on an iOS 11 Simulator.

Speed

Several changes were made to Xcode that will speed up your app development process:

- The Xcode team put some special effort into the Source Editor to ensure high performance editing for files of all sizes.

- Xcode comes with a new build system with much improved speed. It’s beta so it’s off by default; be sure to enable in File->Workspace Settings.

- Additionally, if you use Quick Open or the Search Navigator, you’ll see near-instantaneous results for whatever you are searching for.

iOS 11

iOS 11 was announced today and beta 1 is already available for download. There were several features that peaked my interest.

Drag and Drop

Perhaps the most exciting iOS enhancement for me was the introduction of drag and drop to the iPad. You can now drag and drop things from one place to another, whether it’s within a single app, or even across separate apps!

As a user, you can also take advantage of other advanced multitouch interactions to continue grabbing additional items to eventually send to the destination app. UITableView and UICollectionView make it pretty easy to add drag and drop to lists in your app.

Take a look at Apple’s drag and drop documentation for a section called First Steps to understand how to add drag and drop to your app.

ARKit

WWDC 2017 introduced ARKit, a framework that provides APIs for integrating augmented reality into your apps. On iOS, augmented reality mashes up a live view from the camera, with objects you programmatically place in the view. This gives your app’s users the experience their content is a part of the real world.

Augmented reality programming without some sort of help can be very difficult. As a developer you need to figure out where your “virtual” objects should be placed in the “reality” view, how the objects should behave, and how they should appear. This is where ARKit comes to the rescue and simplifies things for you the developer.

ARKit can detect “planes” in the live camera view, essentially flat surfaces where you can programmatically place objects so it appears they’re actually sitting in the real world, while also using input from the device’s sensors to ensure these items remain in the correct place. Apple’s article on Understanding Augmented Reality is a good place to start for learning more.

Machine Learning

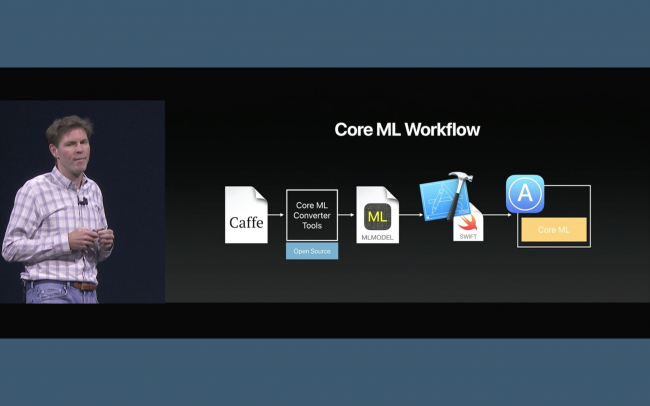

Machine learning is an incredibly complicated topic. Luckily, the Core ML framework was released today to help make this advanced programming technique available to a wider audience. Core ML provides an API where developers can provide a trained model, some input data, and then receive predictions about the input data based on the trained model.

Right from their documentation on Core ML, Apple provides a good example use case for Core ML: predicting real estate prices. There’s a vast amount of historical data on real estate sales. This is the “model.” Once in the proper format, Core ML can use this historical data to make a prediction of the price of a piece of real estate based on data about the house, like the number of bedrooms (assuming the model data contains both the ultimate sale price, and the number of windows for a piece of real estate). Apple even supports trained models created with certain supported third party packages.

Vision

The iOS 11 SDK introduces the Vision framework. The Vision framework provides a way for you to do high performance image analysis inside your applications.

While face detection has been available already in the CoreImage framework, the Vision framework goes further by providing the ability to not only detect faces in images, but also barcodes, text, the horizon, rectangular objects, and previously identified arbitrary objects. The Vision framework also has the ability to integrate with a Core ML model. This allows you to create entirely new detection capabilities with the Vision framework.

For example, you could integrate a Core ML trained machine learning model to identify dogs drinking water in pictures (you just need to obtain the model first which helps the Vision framework understand how to look for a dog drinking water). Take a look at the Vision framework documentation for more information.

HEVC and HEIF

Support for two new media formats was introduced with iOS 11: High Efficiency Video Coding (HEVC) and High Efficiency Image Format (HEIF). These are contemporary formats that have improvements in compression, while also recognizing today’s image and video assets are more complicated than they were in the past. Technologies like Live Photos and burst photos require more information be kept for a given image.

For example, a Live Photo may designate a “key frame” to be the thumbnail to represent the sequence of images. These new formats provide a more convenient, and smaller, way to store and represent this data. The following frameworks have been updated to support these new formats: VideoToolbox, Photos, and Core Image.

MusicKit

I’m a huge fan of music, and I’m sad to say that I traded in my Apple Music subscription for a Spotify subscription about two months ago. The changes coming with the new MusicKit framework could single-handedly bring me back as a paying Apple Music customer. One of my biggest frustrations as an Apple Music customer was the lack of access to the streamable music from within third-party applications.

There isn’t much documentation on MusicKit yet, but Apple presented that there will now be programmatic access to anything in Apple Music. Not just songs the user owns, but all the streamable music.

AirPlay 2

And related to music, AirPlay 2 is also new with iOS 11. It’s most notable feature is the added ability to stream audio across multiple devices. Think Sonos, but with your AirPlay 2 supported devices!

In for your music or podcast playing app to take advantage of this, you’ll need to call setCategory(_:mode:routeSharingPolicy:options:) on AVAudioSession with the AVAudioSessionRouteSharingPolicyLongForm parameter value.

iOS Rapid Fire

There were a bunch of other miscellaneous updates that caught my eye as well:

- App Store Redesign – Coming with iOS 11 is a redesigned App Store app. It looks a lot like Apple Music, and has separate tabs for Apps and Games.

- Promote in-app purchases on the App Store – Got a new in-app purchase that you would like users aware of? You can now promote in-app purchases on the App Store, and additionally, in-app purchases can also be featured on the App Store by Apple!

- Live Photo adjustments – You can now edit Live Photos to do things like loop the Live Photo, or blur the moving parts.

- New SiriKit Domains and Intents – Enhancements to SiriKit now make it possible to add notes, interact with to-do lists and reminders, cancel rides, and transfer money.

- Annotate Screenshots on iPad – When taking a screenshot on your iPad, you’ll be able to quickly access the screenshot via a thumbnail in the corner of the screen, and then annotate it with markup.

- Phased App Releases – Now you can slowly release your app over a period of time. If you’re worried about a new app update putting a heavy load on your server-side backend, this can be a way to mitigate that risk.

Hardware

Another question we love to ask ourselves each WWDC is “what new hardware will we buy?” :]

HomePod

Apple’s response to the Amazon Echo and Google Home was announced today, HomePod. It follows suit as an Internet connected speaker that has an always-listening virtual assistant.

What was most interesting to me was that Siri was totally underplayed, and instead it was the musical and audio capabilities of the speaker that were highlighted. It was almost as if it’s advertised primarily as a listening device, and as a smart device second – which is opposite of the Amazon Echo and Google Home.

HomePod can also serve as your HomeKit hub. One thing of note is until you say “Hey Siri” the HomePod will not send any information to Apple.

Refreshed Desktops

iMacs and Macbook Pros were updated with contemporary hardware and new prices.

iMac Pro

Apple announced a brand new iMac Pro that will be available in December 2017.

New iPad Pro

A new 10.5″ iPad Pro was announced effectively replacing the 9.7″ iPad, while also providing newer capabilities.

What Are People Excited About?

Ray ran a quick Twitter poll to see what people were the most excited about, and looks like quite a few people are planning on picking up a HomePod:

Where To Go From Here?

That wraps up my list of highlights from WWDC 2017, day one.

Whether you’re at WWDC, or catching the couch tour and watching videos at home, I’d love to hear from you. What were your impressions? Did I miss something important? Please let me know in the comments!

In the meantime, we’ll be working hard on making some new written tutorials, video tutorials, and books in the coming weeks. Stay tuned! :]

The post WWDC 2017 Initial Impressions appeared first on Ray Wenderlich.