You’re likely already a master of Swift, iOS 11, Kotlin, and Android Oreo. Perhaps you, like me, find yourself visiting raywenderlich.com to read the latest tutorial or continue with an awesome course you’ve been eager to try out. But do you give the same amount of attention and care to your mind, health, and fitness as you do to your technical skills? Most of us don’t.

In this article you’ll see why it’s very important to take care of your health. I’ll also share a personal story to drive home the fact that staying fit, caring for your posture, eating well, and exercising is just as important to your career as is learning the latest API or programming language.

Health and Fitness 101: Listen To Your Body And Mind

Do you ever wake up with neck or back pain? Are your hands, fingers, or joints achy at times? Maybe your leg has a pinched nerve or it goes numb when sitting for too long. It could also be that you lack energy and motivation, or you’re feeling a bit blue and have no real reason for it.

These signals are your body and mind gently trying to get your attention, telling you a tiny adjustment (or few) to your lifestyle may be due.

Not all aches, pains, or changes in your body mean you must go to a doctor. We all have those nights where our pillow gets funky and we wake up with neck pain, or sometimes have the occasional bloaty day.

It’s normal to have off days and occasional bouts where you lack energy and motivation. Sometimes it’s just a tinge of burnout or exhaustion that a week or two off might fix. Or maybe you’re beating back some kind of microbial attack.

What’s not normal is chronic, recurring problems that can’t be explained by a recent seasonal, dietary, emotional, or lifestyle change.

“But Felipe, these aches and pains are obviously not normal for you at just 30 years of age. Just wait until you’re my age, that’s when the real fun begins!”

You are totally correct, dear reader, which brings me to the topic of time and age: both huge factors which we often take for granted.

Our teens and twenties feel limitless and invincible. Being up all night working on a project or out with friends and sleeping in late is the norm, and juggling studies, work, and hobbies barely make it onto our personal tiredness radars.

As we age, however, our bodies change. We don’t sleep the same way, tolerate the same foods, or have the same amount of energy as we used to. These changes can often be slow and unnoticeable, but it’s guaranteed to happen. It sounds cliché, but enjoy every day to the fullest. Tomorrow you could have a cold, and only then will you be yearning for better days!

My Story

Once upon a time, my little aches, pains, or anomalies affected me more than they had in the past. I was incredibly tired all the time, and no amount of rest or vacation time had an effect on my body. I figured this was all due to taking on too many projects, while prepping for my wedding, while moving to a new home, while trying to get ready for Christmas and New Year’s!

Christmas came and went, but there was no change. January came and went as well — and again, no change. Once again, I figured things would improve later in February, after my wedding was over.

Things were just getting started.

The Epstein Barr Virus

Fast forward a month or two, and I was diagnosed with Chronic Fatigue Syndrome caused by the Epstein Barr Virus. The TL;DR is that Epstein Barr is a virus for which there is no medication. I was told it could take from six months to three years for the virus to go away, and all I could do was eat more fruits and vegetables, perhaps get into juicing, exercise (which was ironic, considering I barely had any energy to get through half a day), and try therapy to cope with the discomfort.

How Did I Cope?

I followed my doctor’s instructions. I kept going to my therapy sessions, started juicing at home, improved my diet, received acupuncture, walked more, and tried to be as patient as possible.

The days were long, and the anxiety was at times unbearable. After six or months, I would have a few days in a row where I felt noticeably better. I wasn’t feeling 100% better, but it was an improvement. The key takeaway here is discipline. Even though taking care of yourself may not be the most enjoyable task, remaining disciplined can help your health and prevent issues in the future.

What I Learned and How it Motivated Me

It’s been over a year since my doctor reviewed my bloodwork and told me I was cured, but only now, two years since I was diagnosed, can I say I’m fully cured and have been without symptoms for a few months.

In the next few sections, I want to share with you what I’ve learned — the hard way — about taking care of my health.

Posture and Ergonomics

Maintaining a proper posture and ensuring your chair, desk, or work environment is as ergonomic as possible is the first step to taking care of your body.

Why You Should Care And Why It Matters

There are both short and long-term complications that can develop from not paying attention to posture and ergonomics.

For instance, conditions that come and go, such as tendinitis or repetitive strain injury, could become a chronic problem that could affect you for the rest of your life.

Even without going to such extremes, ignoring your posture could create the right conditions for a silly injury that could prevent you from doing your job for days, or even weeks.

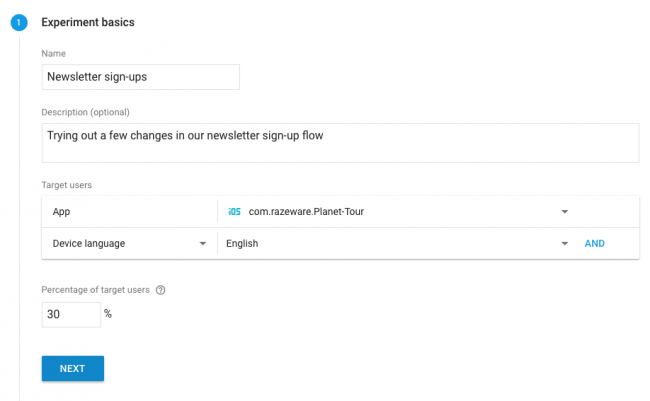

Tips on Good Posture and Ergonomics

Bad posture can lead to aches and pains that will eventually sap your energy and cost you productive hours at work and home.

- Put your monitor/display at eye level so you don’t have to constantly tilt your head up or down to see it properly.

- Maintain a proper distance between your eyes and the display; Otherwise, you will find yourself leaning forward, backward, or even slouching, which will cause problems in your neck and back.

![]()

As shown in the image, your arms and legs should be bent at a near-90 degree angle. You should also have some wrist support to help avoid repetitive-strain injuries.

- Ensure you have good lower-back support, and if you like, you could try a headrest as well.

For further reading: here’s a great article on five habits that can create bad posture.

Exercises and Remedies

Exercise is really good at healing and preventing problems related to posture in sedentary jobs, as programming jobs tend to be.

As you code all day long, you put a lot of stress on your back, neck, and all the muscles that are involved in maintaining your posture. Be aware of the signs of circulation problems, and learn how to sit to increase your circulation.

You don’t need to hit the gym twice daily or go all to feel the positive effects. A light walk every day, or even just several times a week, along with some simple crunches and push-ups should help keep you in relatively decent shape!

Here are some tips that can help you overcome the boredom of exercise:

- Prioritize exercises and activities you actually like. If you prefer swimming to circuit training, or find walking more relaxing than running, then by all means do more of that.

- Buddy up — exercise, run, or go to the gym with a friend. Mutual motivation and being able to chat with someone else are proven hacks that make exercise less tedious.

- Mix technology with exercise. Your phone or smart watch likely has support for fitness tracking. The Apple Watch even has achievements that can be earned on specific holidays. With support for a plethora of indoor or outdoor activities, social features, water resistance, and even wireless music, smart watches are definitely a great way to stay motivated when exercising. Alternatively, you could take your cell phone or handheld gaming device on a static bike ride, or even catch up on Netflix while on the elliptical or treadmill.

- Why not try exercise games like Just Dance? You can dance alone or with friends, burn calories, and have some fun! Pull the curtains tight if you’re concerned about neighbors catching a glimpse of you getting funky.

While I can’t deny that exercise can be tedious, I encourage you to try some of the above ideas — you’ll likely be more motivated and willing to stay active.

Home Remedies

When pain strikes and it’s too late for prevention, there are still some things you can do:

- Try alternating hot and cold therapy that you can do yourself with a warm cloth or compress and ice pack.

- Massages are another fantastic way to alleviate tension and stress.

- Stretching exercises can do wonders in the long term. I’ve often had finger, wrist, or arm pain due to typing or playing video games, and stretching exercises have helped me recover faster and kept me feeling better, longer. These same stretching exercises are something you can do every hour or two throughout your work day to help prevent pain from rearing its head.

- Finally, you can always resort to ibuprofen or a similar equivalent for pain relief.

One of my favorite resources on YouTube is Dr. Levi.

I’ve found the above video to be super-useful for preventing pain, or managing it when it strikes. Dr. Levi has several videos that cover different exercises you can do to stay healthy. He also has videos on exercises, prevention strategies, tips, and more.

Diet And Exercise

Have you ever felt super thirsty at some point in the day? Perhaps your lips are chapped. Or maybe you’ve been a bit constipated lately. These are signs you are dehydrated. Nutritionists usually recommend you drink half of your body weight in fluid ounces.

To give an example, my weight is around 145 lbs. So I take my weight in pounds (145) and divide it by two, which in my case comes out to 72.2 fluid ounces. To get that in quarts, simply divide by 32. So in my case the recommended fluid intake is 2.27 quarts.

The formula would be:

This isn’t a strict rule, so do what works best for you and what you feel comfortable drinking. A good sign of drinking enough fluid is not being crazy thirsty, or if your urine is not super yellow/dark but is instead more towards the clear side.

One thing that helps me estimate how much water I’ve had in a day is using a water bottle. This way I know if I’ve gone through two bottles, it’s X amount of water, and so on.

The Importance Of A Good Diet

Living off Doritos and ramen? How is your body supposed to fuel your magnificent brain and sustain your body through a day of debugging on those empty calories? Weight gain, lack of energy, disturbed sleep, or not feeling like your usual self can be signs you need changes to your diet.

Think about your computer for a moment. You don’t just buy the cheapest, jankiest possible RAM out there, or some cheap, used hard drive, and shove it into your brand-new laptop. So why would you fill your body with processed foods filled with chemicals, fat and sugar, and rely on unnatural foods for fuel?

There are a few key things to keep in mind when it comes to food:

- Choose natural, unprocessed, fresh foods whenever possible. If this sounds like more work than heating up a microwaveable pizza, then try planning your meals ahead of time. It’s helpful to keep pre-cooked foods in your fridge, or increase the portions you cook so that by prepping lunch, you have enough leftovers for dinner.

- Keep your portions in check. It takes your brain about 20 minutes from your first bite until you get the “full” signal. Chew slowly, stay relaxed while eating, and try to stop before you reach the point of OMG TOO FULL. I like to stay a tiny bit hungry as this is an indicator that I’ve eaten just enough for my body to digest.

- Snack in between meals; ideally, 2 to 2-1/2 hours after each meal. You won’t be as hungry when it’s time for a big meal, which reduces your chances of eating too-large portions, and the snack will help give you energy throughout the day. Snacks can also help prevent gastritis or stomach acids from damaging an empty stomach, and they can also help you lose weight by taming hunger.

- Remember that snacks are just that — snacks — not a meal! Eat just enough to give your body a little fuel until the next big meal. But DO NOT snack mindlessly!

- Apples, bananas, nuts, fruits, and veggies make great in-between snacks as they are easy to eat, require absolutely no preparation, provide nutrients and enzymes to your body, and are low-calorie.

- Color and variety are key. Vitamins are found in abundance in brightly-colored fruits and vegetables. Eat many different foods to keep your diet varied, and unless your doctor has specifically prohibited certain foods, then feel free to occasionally indulge in heavy foods such as sweets, baked goods, bread, or fried food. Everything in moderation.

- Start paying more attention to the nutritional details of the food you buy the next time you’re out shopping. You’ll be surprised at how much fat and sugar they can pack into something as simple as a sausage, ham, jam, or slice of bread!

Don’t Fear Food

Eat, and enjoy what you eat. One of the biggest mistakes I made when I fell ill was cutting out all foods that were outside the bounds of my diet. I stopped eating a plethora of foods that I’d always enjoyed without worry, as I now considered them too risky for maintaining my energy levels, digestion and mood, when in reality it was affecting me more to NOT eat them. There is truth to the notion that we always want what we can’t have!

Eating is one of the most amazing pleasures in life, and it’s not necessary to give up all the foods you love to be healthy. Moderation is key — for example, instead of starting each day with a creamy latte, opt for drip coffee with a bit of cream most days then splurge on a small latte once a week or so. Little changes like this add up quickly!

There are tons of resources and recipes online to help get you started. Services such as Blue Apron or Plated can help you eat healthier and enjoy more varied food without requiring hours and hours of food preparation and dreadful trips to the grocery store.

Here are a few great resources to get you started:

Where to Go From Here?

If I’d eaten better and been more conscious of my health, I may have avoided my woes, or at least reduced their impact. Skipping breakfast, barely drinking fluids throughout the day, not eating fruits or vegetables, and preferring processed foods to whole foods may have caused a compromised immune system.

Don’t wait to learn things the hard way, like I did. Take small steps today to improve your current health and maintain it in the future.

A flu or headache will go away eventually; osteoporosis, diabetes, and others will be with you forever. Don’t take your health for granted and be proactive about taking care of yourself.

Simple lifestyle tweaks can go a long way toward changing your health and fitness for the long term! If you make the changes fit your lifestyle and your schedule as opposed to to other way around, you’ll be better positioned for long-term success.

Start small and go from there, focus on what matters most to you, and remember that a happier you is a better you. And a better you means a better developer that produces better-quality code!

We hope you enjoyed this article. Let us know in the comments what resources you’d like to share with others, cool tips you have, or simply leave us your thoughts!

The post Health and Fitness for Developers appeared first on Ray Wenderlich.