In this video, you'll learn how to use multiple section controllers and how to update your list when your data has changed.

The post Screencast: IGListKit: Multiple Sections and Updates appeared first on Ray Wenderlich.

In this video, you'll learn how to use multiple section controllers and how to update your list when your data has changed.

The post Screencast: IGListKit: Multiple Sections and Updates appeared first on Ray Wenderlich.

In the first part of this HTC Vive in Unity tutorial, you learned how to create an interaction system and use it to grab, snap and throw objects.

In this second part of this advanced HTC Vive tutorial, you’ll learn how to:

This tutorial is intended for an advanced audience, and it will skip a lot of the details on how to add components and make new GameObjects, scripts and so on. It’s assumed you already know how to handle these things. If not, check out our series on beginning Unity here.

Download the starter project, unzip it somewhere and open the folder inside Unity. Here’s an overview of the folders in the Project window:

Here’s what each will be used for:

Open up the Game scene inside the Scenes folder to get started.

At the moment there’s not even a bow present in the scene.

Create a new empty GameObject and name it Bow.

Set the Bow‘s position to (X:-0.1, Y:4.5, Z:-1) and its rotation to (X:0, Y:270, Z:80).

Now drag the Bow model from the Models folder onto Bow in the Hierarchy to parent it.

Rename it BowMesh and set its position, rotation and scale to (X:0, Y:0, Z:0), (X:-90, Y:0, Z:-180) and (X:0.7, Y:0.7, Z:0.7) respectively.

It should now look like this:

Before moving on, I’d like to show you how the string of the bow works.

Select BowMesh and take a look at its Skinned Mesh Renderer. Unfold the BlendShapes field to reveal the Bend blendshape value. This is where the magic happens.

Keep looking at the bow. Change the Bend value from 0 to 100 and back by dragging and holding down your cursor on the word Bend in the Inspector. You should see the bow bending and the string being pulled back:

Set Bend back to 0 for now.

Remove the Animator component from the BowMesh, all animations are done using blendshapes.

Now add an arrow by dragging an instance of RealArrow from the Prefabs folder onto Bow.

Name it BowArrow and reset its Transform component to move it into position relative to the Bow.

This arrow won’t be used as a regular arrow, so break the connection to its prefab by selecting GameObject\Break Prefab Instance from the top menu.

Unfold BowArrow and delete its child, Trail. This particle system is used by normal arrows only.

Remove the Rigidbody, second Box Collider and RWVR_Snap To Controller components from BowArrow.

All that should be left is a Transform and a Box Collider component.

Set the Box Collider‘s Center to (X:0, Y:0, Z:-0.28) and set its size to (X:0.1, Y:0.1, Z:0.2). This will be the part the player can grab and pull back.

Select Bow again and add a Rigidbody and a Box Collider to it. This will make sure it has a physical presence in the world when not in use.

Change the Box Collider‘s Center and Size to (X:0, Y:0, Z:-0.15) and (X:0.1, Y:1.45, Z:0.45) respectively.

Now add a RWVR_Snap To Controller component to it. Enable Hide Controller Model, set Snap Position Offset to (X:0, Y:0.08, Z:0) and Snap Rotation Offset to (X:90, Y:0, Z:0).

Play the scene and test if you can pick up the bow.

Before moving on, set up the tags on the controllers so future scripts will function correctly.

Unfold [CameraRig], select both controllers and set their tag to Controller.

In the next part you’ll make the bow work by doing some scripting.

The bow system you’ll create consists of three key parts:

Each of these needs their own script to work together to make the bow shoot.

For starters, the normal arrows need some code to allow them to get stuck in objects and be picked up again later.

Create a new C# script inside the Scripts folder and name it RealArrow. Note this script doesn’t belong in the RWVR folder as it’s not a part of the interaction system.

Open it up and remove the Start() and Update() methods.

Add the following variable declarations below the class declaration:

public BoxCollider pickupCollider; // 1

private Rigidbody rb; // 2

private bool launched; // 3

private bool stuckInWall; // 4

Quite simply:

true when an arrow is launched from the bow.true when this arrow hits a solid object.Now add the Awake() method:

private void Awake()

{

rb = GetComponent<Rigidbody>();

}

This simply caches the Rigidbody component that’s attached to this arrow.

Add the following method below Awake() :

private void FixedUpdate()

{

if (launched && !stuckInWall && rb.velocity != Vector3.zero) // 1

{

rb.rotation = Quaternion.LookRotation(rb.velocity); // 2

}

}

This snippet will make sure the arrow will keep facing the direction it’s headed. This allows for some cool skill shots, like shooting arrows in the sky and then watching them come down upon the ground again with their heads stuck in the soil. It also makes things more stable and prevents arrows from getting stuck in awkward positions.

FixedUpdate does the following:

Add these methods below FixedUpdate():

public void SetAllowPickup(bool allow) // 1

{

pickupCollider.enabled = allow;

}

public void Launch() // 2

{

launched = true;

SetAllowPickup(false);

}

Looking at the two commented sections:

pickupCollider.launched flag to true and doesn’t allow the arrow to be picked up.Add the next method to make sure the arrow doesn’t move once it hits a solid object:

private void GetStuck(Collider other) // 1

{

launched = false; // 2

rb.isKinematic = true; // 3

stuckInWall = true; // 4

SetAllowPickup(true); // 5

transform.SetParent(other.transform); // 6

}

Taking each commented section in turn:

Collider as a parameter. This is what the arrow will attach itself to.stuckInWall flag to true.The final piece of this script to add is OnTriggerEnter(), which is called when the arrow’s trigger hits something:

private void OnTriggerEnter(Collider other)

{

if (other.CompareTag("Controller") || other.GetComponent<Bow>()) // 1

{

return;

}

if (launched && !stuckInWall) // 2

{

GetStuck(other);

}

}

You’ll get an error saying Bow doesn’t exist yet. Ignore this for now: you’ll create the Bow script next.

Here’s what’s the code above does:

Save this script, then create a new C# script in the Scripts folder named Bow. Open it in your code editor.

Remove the Start() method and add this line right above the class declaration:

[ExecuteInEditMode]

This will let this script execute its method, even while you’re working in the editor. You’ll see why this can be quite handy in just a bit.

Add these variables above Update():

public Transform attachedArrow; // 1

public SkinnedMeshRenderer BowSkinnedMesh; // 2

public float blendMultiplier = 255f; // 3

public GameObject realArrowPrefab; // 4

public float maxShootSpeed = 50; // 5

public AudioClip fireSound; // 6

This is what they’ll be used for:

blendMultiplier to get the final Bend value for the blend shape.Add the following encapsulated field below the variables:

bool IsArmed()

{

return attachedArrow.gameObject.activeSelf;

}

This simply returns true if the arrow is enabled. It’s much easier to reference this field than to write out attachedArrow.gameObject.activeSelf each time.

Add the following to the Update() method:

float distance = Vector3.Distance(transform.position, attachedArrow.position); // 1

BowSkinnedMesh.SetBlendShapeWeight(0, Mathf.Max(0, distance * blendMultiplier)); // 2

Here’s what each of these lines do:

blendMultiplier.Next, add these methods below Update():

private void Arm() // 1

{

attachedArrow.gameObject.SetActive(true);

}

private void Disarm()

{

BowSkinnedMesh.SetBlendShapeWeight(0, 0); // 2

attachedArrow.position = transform.position; // 3

attachedArrow.gameObject.SetActive(false); // 4

}

These methods handle the loading and unloading of arrows in the bow.

Add OnTriggerEnter() below Disarm():

private void OnTriggerEnter(Collider other) // 1

{

if (

!IsArmed()

&& other.CompareTag("InteractionObject")

&& other.GetComponent<RealArrow>()

&& !other.GetComponent<RWVR_InteractionObject>().IsFree() // 2

) {

Destroy(other.gameObject); // 3

Arm(); // 4

}

}

This handles what should happen when a trigger collides with the bow.

Collider as a parameter. This is the trigger that hit the bow.true if the bow is unarmed and is hit by a RealArrow. There’s a few checks to make sure it only reacts to arrows that are held by the player.This code is essential to make it possible for a player to rearm the bow once the intitally loaded arrow has been shot.

The final method shoots the arrow. Add this below OnTriggerEnter():

public void ShootArrow()

{

GameObject arrow = Instantiate(realArrowPrefab, transform.position, transform.rotation); // 1

float distance = Vector3.Distance(transform.position, attachedArrow.position); // 2

arrow.GetComponent<Rigidbody>().velocity = arrow.transform.forward * distance * maxShootSpeed; // 3

AudioSource.PlayClipAtPoint(fireSound, transform.position); // 4

GetComponent<RWVR_InteractionObject>().currentController.Vibrate(3500); // 5

arrow.GetComponent<RealArrow>().Launch(); // 6

Disarm(); // 7

}

This might seem like a lot of code, but it’s quite simple:

distance.distance. The further the string gets pulled back, the more velocity the arrow will have.Launch() method.Time to set up the bow in the inspector!

Save this script and return to the editor.

Select Bow in the Hierarchy and add a Bow component.

Expand Bow to reveal its children and drag BowArrow to the Attached Arrow field.

Now drag BowMesh to the Bow Skinned Mesh field and set Blend Multiplier to 353.

Drag a RealArrow prefab from the Prefabs folder onto the Real Arrow Prefab field and drag the FireBow sound from the Sounds folder to the Fire Sound.

This is what the Bow component should look like when you’re finished:

Remember how the skinned mesh renderer affected the bow model? Move the BowArrow in the Scene view on its local Z-axis to test what the full bow bend effect looks like:

That’s pretty sweet looking!

You’ll now need to set up the RealArrow to work as intended.

Select RealArrow in the Hierarchy and add a Real Arrow component to it.

Now drag the Box Collider with Is Trigger disabled to the Pickup Collider slot.

Click the Apply button at the top of the Inspector to apply this change to all RealArrow prefabs as well.

The final piece of the puzzle is the special arrow that sits in the bow.

The arrow in the bow needs to be pulled back by the player in order to bend the bow, and then released to fire an arrow.

Create a new C# script inside the Scripts \ RWVR folder and name it RWVR_ArrowInBow. Open it up in a code editor and remove the Start() and Update() methods.

Make this class derive from RWVR_InteractionObject by replacing the following line:

public class RWVR_ArrowInBow : MonoBehaviour

With this:

public class RWVR_ArrowInBow : RWVR_InteractionObject

Add these variables below the class declaration:

public float minimumPosition; // 1

public float maximumPosition; // 2

private Transform attachedBow; // 3

private const float arrowCorrection = 0.3f; // 4

Here’s what they’re for:

Add the following method below the variable declarations:

public override void Awake()

{

base.Awake();

attachedBow = transform.parent;

}

This calls the base class’ Awake() method to cache the transform and stores a reference to the bow in the attachedBow variable.

Add the following method to react while the player holds the trigger button:

public override void OnTriggerIsBeingPressed(RWVR_InteractionController controller) // 1

{

base.OnTriggerIsBeingPressed(controller); // 2

Vector3 arrowInBowSpace = attachedBow.InverseTransformPoint(controller.transform.position); // 3

cachedTransform.localPosition = new Vector3(0, 0, arrowInBowSpace.z + arrowCorrection); // 4

}

Taking it step-by-step:

OnTriggerIsBeingPressed() and get the controller that’s interacting with this arrow as a parameter.InverseTransformPoint(). This allows for the arrow to be pulled back correctly, even though the controller isn’t perfectly aligned with the bow on its local Z-axis.arrowCorrection to it on its Z-axis to get the correct value.Now add the following method:

public override void OnTriggerWasReleased(RWVR_InteractionController controller) // 1

{

attachedBow.GetComponent<Bow>().ShootArrow(); // 2

currentController.Vibrate(3500); // 3

base.OnTriggerWasReleased(controller); // 4

}

This method is called when the arrow is released.

OnTriggerWasReleased() method and get the controller that’s interacting with this arrow as a parameter.currentController.Add this method below OnTriggerWasReleased():

void LateUpdate()

{

// Limit position

float zPos = cachedTransform.localPosition.z; // 1

zPos = Mathf.Clamp(zPos, minimumPosition, maximumPosition); // 2

cachedTransform.localPosition = new Vector3(0, 0, zPos); // 3

//Limit rotation

cachedTransform.localRotation = Quaternion.Euler(Vector3.zero); // 4

if (currentController)

{

currentController.Vibrate(System.Convert.ToUInt16(500 * -zPos)); // 5

}

}

LateUpdate() is called at the end of every frame. It’s used to limit the position and rotation of the arrow and vibrates the controller to simulate the effort needed to pull the arrow back.

zPos.zPos between the minimum and maximum allowed position.Vector3.zero.Save this script and return to the editor.

Unfold Bow in the Hierarchy and select its child BowArrow. Add a RWVR_Arrow In Bow component to it and set Minimum Position to -0.4.

Save the scene, and get your HMD and controllers ready to test out the game!

Pick up the bow with one controller and pull the arrow back with the other one.

Release the controller to shoot an arrow, and try rearming the bow by dragging an arrow from the table onto it.

The last thing you’ll create in this tutorial is a backpack (or a quiver, in this case) from which you can grab new arrows to load in the bow.

For that to work, you’ll need some new scripts.

In order to know if the player is holding certain objects with the controllers, you’ll need a controller manager which references both controllers.

Create a new C# script in the Scripts/RWVR folder and name it RWVR_ControllerManager. Open it in a code editor.

Remove the Start() and Update() methods and add these variables:

public static RWVR_ControllerManager Instance; // 1

public RWVR_InteractionController leftController; // 2

public RWVR_InteractionController rightController; // 3

Here’s what the above variables are for:

public static reference to this script so it can be called from all other scripts.Add the following method below the variables:

private void Awake()

{

Instance = this;

}

This saves a reference to this script in the Instance variable.

Now add this method below Awake():

public bool AnyControllerIsInteractingWith<T>() // 1

{

if (leftController.InteractionObject && leftController.InteractionObject.GetComponent<T>() != null) // 2

{

return true;

}

if (rightController.InteractionObject && rightController.InteractionObject.GetComponent<T>() != null) // 3

{

return true;

}

return false; // 4

}

This helper method checks if any of the controllers have a certain component attached to them:

Save this script and return to the editor.

The final script is for the backpack itself.

Create a new C# script in the Scripts \ RWVR folder and name it RWVR_SpecialObjectSpawner.

Open it in your favorite code editor and replace this line:

public class RWVR_SpecialObjectSpawner : MonoBehaviour

With this:

public class RWVR_SpecialObjectSpawner : RWVR_InteractionObject

This makes the backpack inherit from RWVR_InteractionObject.

Now remove both the Start() and Update() methods and add the following variables in their place:

public GameObject arrowPrefab; // 1

public List<GameObject> randomPrefabs = new List<GameObject>(); // 2

These are the GameObjects which will be spawned out of the backpack.

Add the following method:

private void SpawnObjectInHand(GameObject prefab, RWVR_InteractionController controller) // 1

{

GameObject spawnedObject = Instantiate(prefab, controller.snapColliderOrigin.position, controller.transform.rotation); // 2

controller.SwitchInteractionObjectTo(spawnedObject.GetComponent<RWVR_InteractionObject>()); // 3

OnTriggerWasReleased(controller); // 4

}

This method attaches an object to the player’s controller, as if they grabbed it from behind their back.

prefab is the GameObject that will be spawned, while controller is the controller to which it will attach to.spawnedObject.InteractionObject to the object that was just spawned.The next method decides what kind of object should be spawned when the player pressed the trigger button over the backpack.

Add the following method below SpawnObjectInHand():

public override void OnTriggerWasPressed(RWVR_InteractionController controller) // 1

{

base.OnTriggerWasPressed(controller); // 2

if (RWVR_ControllerManager.Instance.AnyControllerIsInteractingWith<Bow>()) // 3

{

SpawnObjectInHand(arrowPrefab, controller);

}

else // 4

{

SpawnObjectInHand(randomPrefabs[UnityEngine.Random.Range(0, randomPrefabs.Count)], controller);

}

}

Here’s what each part does:

OnTriggerWasPressed() method.OnTriggerWasPressed() method.randomPrefabs list.Save this script and return to the editor.

Create a new Cube in the Hierarchy, name it BackPack and drag it onto [CameraRig]\ Camera (head) to parent it to the player’s head.

Set its position and scale to (X:0, Y:-0.25, Z:-0.45) and (X:0.6, Y:0.5, Z:0.5) respectively.

The backpack is now positioned right behind and under the player’s head.

Set the Box Collider‘s Is Trigger to true. this object doesn’t need to collide with anything.

Set Cast Shadows to Off and disable Receive Shadows on the Mesh Renderer component.

Now add a RWVR_Special Object Spawner component and drag a RealArrow from the Prefabs folder onto the Arrow Prefab field.

Finally, drag a Book and a Die prefab from the same folder to the Random Prefabs list.

Now add a new empty GameObject, name it ControllerManager and add a RWVR_Controller Manager component to it.

Expand [CameraRig] and drag Controller (left) to the Left Controller slot and Controller (right) to the Right Controller slot.

Now save the scene and test out the backpack. Try grabbing behind your back and see what stuff you’ll pull out!

That concludes this tutorial! You now have a fully functional bow and arrow and an interaction system you can expand with ease!

You can download the finished project here.

In this tutorial you’ve learned how to create the following features and updates for your HTC Vive game:

If you’re interested in learning more about creating killer games with Unity, check out our book, Unity Games By Tutorials.

In this book, you create four complete games from scratch:

By the end of this book, you’ll be ready to make your own games for Windows, macOS, iOS, and more!

This book is for complete beginners to Unity, as well as for those who’d like to bring their Unity skills to a professional level. The book assumes you have some prior programming experience (in a language of your choice).

If you have any comments or suggestions, please join the discussion below!

The post Advanced VR Mechanics With Unity and the HTC Vive – Part 2 appeared first on Ray Wenderlich.

Note: This tutorial requires Xcode 9 Beta 1 or later, Swift 4 and iOS 11.

Machine learning is all the rage. Many have heard about it, but few know what it is.

This iOS machine learning tutorial will introduce you to Core ML and Vision, two brand-new frameworks introduced in iOS 11.

Specifically, you’ll learn how to use these new APIs with the Places205-GoogLeNet model to classify the scene of an image.

Download the starter project. It already contains a user interface to display an image and let the user pick another image from their photo library. So you can focus on implementing the machine learning and vision aspects of the app.

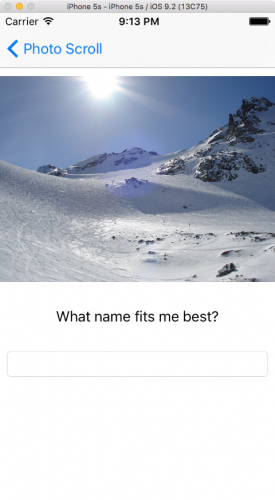

Build and run your project; you’ll see an image of a city at night, and a button:

Choose another image from the photo library in the Photos app. This starter project’s Info.plist already has a Privacy – Photo Library Usage Description, so you might be prompted to allow usage.

The gap between the image and the button contains a label, where you’ll display the model’s classification of the image’s scene.

Machine learning is a type of artificial intelligence where computers “learn” without being explicitly programmed. Instead of coding an algorithm, machine learning tools enable computers to develop and refine algorithms, by finding patterns in huge amounts of data.

Since the 1950s, AI researchers have developed many approaches to machine learning. Apple’s Core ML framework supports neural networks, tree ensembles, support vector machines, generalized linear models, feature engineering and pipeline models. However, neural networks have produced many of the most spectacular recent successes, starting with Google’s 2012 use of YouTube videos to train its AI to recognize cats and people. Only five years later, Google is sponsoring a contest to identify 5000 species of plants and animals. Apps like Siri and Alexa also owe their existence to neural networks.

A neural network tries to model human brain processes with layers of nodes, linked together in different ways. Each additional layer requires a large increase in computing power: Inception v3, an object-recognition model, has 48 layers and approximately 20 million parameters. But the calculations are basically matrix multiplication, which GPUs handle extremely efficiently. The falling cost of GPUs enables people to create multilayer deep neural networks, hence the term deep learning.

Neural networks need a large amount of training data, ideally representing the full range of possibilities. The explosion in user-generated data has also contributed to the renaissance of machine learning.

Training the model means supplying the neural network with training data, and letting it calculate a formula for combining the input parameters to produce the output(s). Training happens offline, usually on machines with many GPUs.

To use the model, you give it new inputs, and it calculates outputs: this is called inferencing. Inference still requires a lot of computing, to calculate outputs from new inputs. Doing these calculations on handheld devices is now possible because of frameworks like Metal.

As you’ll see at the end of this tutorial, deep learning is far from perfect. It’s really hard to construct a truly representative set of training data, and it’s all too easy to over-train the model so it gives too much weight to quirky characteristics.

Apple introduced NSLinguisticTagger in iOS 5 to analyze natural language. Metal came in iOS 8, providing low-level access to the device’s GPU.

Last year, Apple added Basic Neural Network Subroutines (BNNS) to its Accelerate framework, enabling developers to construct neural networks for inferencing (not training).

And this year, Apple has given you Core ML and Vision!

You can also wrap any image-analysis Core ML model in a Vision model, which is what you’ll do in this tutorial. Because these two frameworks are built on Metal, they run efficiently on the device, so you don’t need to send your users’ data to a server.

This tutorial uses the Places205-GoogLeNet model, which you can download from Apple’s Machine Learning page. Scroll down to Working with Models, and download the first one. While you’re there, take note of the other three models, which all detect objects — trees, animals, people, etc. — in an image.

After you download GoogLeNetPlaces.mlmodel, drag it from Finder into the Resources group in your project’s Project Navigator:

Select this file, and wait for a moment. An arrow will appear when Xcode has generated the model class:

Click the arrow to see the generated class:

Xcode has generated input and output classes, and the main class GoogLeNetPlaces, which has a model property and two prediction methods.

GoogLeNetPlacesInput has a sceneImage property of type CVPixelBuffer. Whazzat!?, we all cry together, but fear not, the Vision framework will take care of converting our familiar image formats into the correct input type. :]

The Vision framework also converts GoogLeNetPlacesOutput properties into its own results type, and manages calls to prediction methods, so out of all this generated code, your code will use only the model property.

Finally, you get to write some code! Open ViewController.swift, and import the two frameworks, just below import UIKit:

import CoreML

import Vision

Next, add the following extension below the IBActions extension:

// MARK: - Methods

extension ViewController {

func detectScene(image: CIImage) {

answerLabel.text = "detecting scene..."

// Load the ML model through its generated class

guard let model = try? VNCoreMLModel(for: GoogLeNetPlaces().model) else {

fatalError("can't load Places ML model")

}

}

}

Here’s what you’re doing:

First, you display a message so the user knows something is happening.

The designated initializer of GoogLeNetPlaces throws an error, so you must use try when creating it.

VNCoreMLModel is simply a container for a Core ML model used with Vision requests.

The standard Vision workflow is to create a model, create one or more requests, and then create and run a request handler. You’ve just created the model, so your next step is to create a request.

Add the following lines to the end of detectScene(image:):

// Create a Vision request with completion handler

let request = VNCoreMLRequest(model: model) { [weak self] request, error in

guard let results = request.results as? [VNClassificationObservation],

let topResult = results.first else {

fatalError("unexpected result type from VNCoreMLRequest")

}

// Update UI on main queue

let article = (self?.vowels.contains(topResult.identifier.first!))! ? "an" : "a"

DispatchQueue.main.async { [weak self] in

self?.answerLabel.text = "\(Int(topResult.confidence * 100))% it's \(article) \(topResult.identifier)"

}

}

VNCoreMLRequest is an image analysis request that uses a Core ML model to do the work. Its completion handler receives request and error objects.

You check that request.results is an array of VNClassificationObservation objects, which is what the Vision framework returns when the Core ML model is a classifier, rather than a predictor or image processor. And GoogLeNetPlaces is a classifier, because it predicts only one feature: the image’s scene classification.

A VNClassificationObservation has two properties: identifier — a String — and confidence — a number between 0 and 1 — it’s the probability the classification is correct. When using an object-detection model, you would probably look at only those objects with confidence greater than some threshold, such as 30%.

You then take the first result, which will have the highest confidence value, and set the indefinite article to “a” or “an”, depending on the identifier’s first letter. Finally, you dispatch back to the main queue to update the label. You’ll soon see the classification work happens off the main queue, because it can be slow.

Now, on to the third step: creating and running the request handler.

Add the following lines to the end of detectScene(image:):

// Run the Core ML GoogLeNetPlaces classifier on global dispatch queue

let handler = VNImageRequestHandler(ciImage: image)

DispatchQueue.global(qos: .userInteractive).async {

do {

try handler.perform([request])

} catch {

print(error)

}

}

VNImageRequestHandler is the standard Vision framework request handler; it isn’t specific to Core ML models. You give it the image that came into detectScene(image:) as an argument. And then you run the handler by calling its perform method, passing an array of requests. In this case, you have only one request.

The perform method throws an error, so you wrap it in a try-catch.

Whew, that was a lot of code! But now you simply have to call detectScene(image:) in two places.

Add the following lines at the end of viewDidLoad() and at the end of imagePickerController(_:didFinishPickingMediaWithInfo:):

guard let ciImage = CIImage(image: image) else {

fatalError("couldn't convert UIImage to CIImage")

}

detectScene(image: ciImage)

Now build and run. It shouldn’t take long to see a classification:

Well, yes, there are skyscrapers in the image. There’s also a train.

Tap the button, and select the first image in the photo library: a close-up of some sun-dappled leaves:

Hmmm, maybe if you squint, you can imagine Nemo or Dory swimming around? But at least you know the “a” vs. “an” thing works. ;]

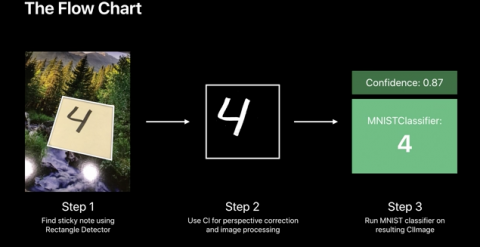

This tutorial’s project is similar to the sample project for WWDC 2017 Session 506 Vision Framework: Building on Core ML. The Vision + ML Example app uses the MNIST classifier, which recognizes hand-written numerals — useful for automating postal sorting. It also uses the native Vision framework method VNDetectRectanglesRequest, and includes Core Image code to correct the perspective of detected rectangles.

You can also download a different sample project from the Core ML documentation page. Inputs to the MarsHabitatPricePredictor model are just numbers, so the code uses the generated MarsHabitatPricer methods and properties directly, instead of wrapping the model in a Vision model. By changing the parameters one at a time, it’s easy to see the model is simply a linear regression:

137 * solarPanels + 653.50 * greenHouses + 5854 * acres

You can download the complete project for this tutorial here. If the model shows up as missing, replace it with the one you downloaded.

You’re now well-equipped to integrate an existing model into your app. Here’s some resources that cover this in more detail:

From 2016:

Thinking about building your own model? I’m afraid that’s way beyond the scope of this tutorial (and my expertise). These resources might help you get started:

Last but not least, I really learned a lot from this concise history of AI from Andreessen Horowitz’s Frank Chen: AI and Deep Learning a16z podcast.

I hope you found this tutorial useful. Feel free to join the discussion below!

The post Core ML and Vision: Machine Learning in iOS 11 Tutorial appeared first on Ray Wenderlich.

Update note: This tutorial has been updated to Swift 4 and Xcode 9 by Lyndsey Scott. The original tutorial was written by Marin Todorov.

Core Text is a low-level text engine that when used alongside the Core Graphics/Quartz framework, gives you fine-grained control over layout and formatting.

With iOS 7, Apple released a high-level library called Text Kit, which stores, lays out and displays text with various typesetting characteristics. Although Text Kit is powerful and usually sufficient when laying out text, Core Text can provide more control. For example, if you need to work directly with Quartz, use Core Text. If you need to build your own layout engines, Core Text will help you generate “glyphs and position them relative to each other with all the features of fine typesetting.”

This tutorial takes you through the process of creating a very simple magazine application using Core Text… for Zombies!

Oh, and Zombie Monthly’s readership has kindly agreed not to eat your brains as long as you’re busy using them for this tutorial… So you may want to get started soon! *gulp*

Note: To get the most out of this tutorial, you need to know the basics of iOS development first. If you’re new to iOS development, you should check out some of the other tutorials on this site first.

Open Xcode, create a new Swift universal project with the Single View Application Template and name it CoreTextMagazine.

Next, add the Core Text framework to your project:

Now the project is setup, it’s time to start coding.

For starters, you’ll create a custom UIView, which will use Core Text in its draw(_:) method.

Create a new Cocoa Touch Class file named CTView subclassing UIView .

Open CTView.swift, and add the following under import UIKit:

import CoreText

Next, set this new custom view as the main view in the application. Open Main.storyboard, open the Utilities menu on the right-hand side, then select the Identity Inspector icon in its top toolbar. In the left-hand menu of the Interface Builder, select View. The Class field of the Utilities menu should now say UIView. To subclass the main view controller’s view, type CTView into the Class field and hit Enter.

Next, open CTView.swift and replace the commented out draw(_:) with the following:

//1

override func draw(_ rect: CGRect) {

// 2

guard let context = UIGraphicsGetCurrentContext() else { return }

// 3

let path = CGMutablePath()

path.addRect(bounds)

// 4

let attrString = NSAttributedString(string: "Hello World")

// 5

let framesetter = CTFramesetterCreateWithAttributedString(attrString as CFAttributedString)

// 6

let frame = CTFramesetterCreateFrame(framesetter, CFRangeMake(0, attrString.length), path, nil)

// 7

CTFrameDraw(frame, context)

}

Let’s go over this step-by-step.

draw(_:) will run automatically to render the view’s backing layer.NSAttributedString, as opposed to String or NSString, to hold the text and its attributes. Initialize “Hello World” as an attributed string. CTFramesetterCreateWithAttributedString creates a CTFramesetter with the supplied attributed string. CTFramesetter will manage your font references and your drawing frames.CTFrame, by having CTFramesetterCreateFrame render the entire string within path.

CTFrameDraw draws the CTFrame in the given context.That’s all you need to draw some simple text! Build, run and see the result.

Uh-oh… That doesn’t seem right, does it? Like many of the low level APIs, Core Text uses a Y-flipped coordinate system. To make matters worse, the content is also flipped vertically!

Add the following code directly below the guard let context statement to fix the content orientation:

// Flip the coordinate system

context.textMatrix = .identity

context.translateBy(x: 0, y: bounds.size.height)

context.scaleBy(x: 1.0, y: -1.0)

This code flips the content by applying a transformation to the view’s context.

Build and run the app. Don’t worry about status bar overlap, you’ll learn how to fix this with margins later.

Congrats on your first Core Text app! The zombies are pleased with your progress.

If you’re a bit confused about the CTFramesetter and the CTFrame – that’s OK because it’s time for some clarification. :]

Here’s what the Core Text object model looks like:

When you create a CTFramesetter reference and provide it with an NSAttributedString, an instance of CTTypesetter is automatically created for you to manage your fonts. Next you use the CTFramesetter to create one or more frames in which you’ll be rendering text.

When you create a frame, you provide it with the subrange of text to render inside its rectangle. Core Text automatically creates a CTLine for each line of text and a CTRun for each piece of text with the same formatting. For example, Core Text would create a CTRun if you had several words in a row colored red, then another CTRun for the following plain text, then another CTRun for a bold sentence, etc. Core Text creates CTRuns for you based on the attributes of the supplied NSAttributedString. Furthermore, each of these CTRun objects can adopt different attributes, so you have fine control over kerning, ligatures, width, height and more.

Download and unarchive the zombie magazine materials.

Drag the folder into your Xcode project. When prompted make sure Copy items if needed and Create groups are selected.

To create the app, you’ll need to apply various attributes to the text. You’ll create a simple text markup parser which will use tags to set the magazine’s formatting.

Create a new Cocoa Touch Class file named MarkupParser subclassing NSObject.

First things first, take a quick look at zombies.txt. See how it contains bracketed formatting tags throughout the text? The “img src” tags reference magazine images and the “font color/face” tags determine text color and font.

Open MarkupParser.swift and replace its contents with the following:

import UIKit

import CoreText

class MarkupParser: NSObject {

// MARK: - Properties

var color: UIColor = .black

var fontName: String = "Arial"

var attrString: NSMutableAttributedString!

var images: [[String: Any]] = []

// MARK: - Initializers

override init() {

super.init()

}

// MARK: - Internal

func parseMarkup(_ markup: String) {

}

}

Here you’ve added properties to hold the font and text color; set their defaults; created a variable to hold the attributed string produced by parseMarkup(_:); and created an array which will eventually hold the dictionary information defining the size, location and filename of images found within the text.

Writing a parser is usually hard work, but this tutorial’s parser will be very simple and support only opening tags — meaning a tag will set the style of the text following it until a new tag is found. The text markup will look like this:

These are <font color="red">red<font color="black"> and <font color="blue">blue <font color="black">words.

and produce output like this:

These are red and blue words.

Lets’ get parsin’!

Add the following to parseMarkup(_:):

//1

attrString = NSMutableAttributedString(string: "")

//2

do {

let regex = try NSRegularExpression(pattern: "(.*?)(<[^>]+>|\\Z)",

options: [.caseInsensitive,

.dotMatchesLineSeparators])

//3

let chunks = regex.matches(in: markup,

options: NSRegularExpression.MatchingOptions(rawValue: 0),

range: NSRange(location: 0,

length: markup.characters.count))

} catch _ {

}

attrString starts out empty, but will eventually contain the parsed markup.regex matches, then produce an array of the resulting NSTextCheckingResults.Note: To learn more about regular expressions, check out NSRegularExpression Tutorial.

Now you’ve parsed all the text and formatting tags into chunks, you’ll loop through chunks to build the attributed string.

But before that, did you notice how matches(in:options:range:) accepts an NSRange as an argument? There’s going to be lots of NSRange to Range conversions as you apply NSRegularExpression functions to your markup String. Swift’s been a pretty good friend to us all, so it deserves a helping hand.

Still in MarkupParser.swift, add the following extension to the end of the file:

// MARK: - String

extension String {

func range(from range: NSRange) -> Range<String.Index>? {

guard let from16 = utf16.index(utf16.startIndex,

offsetBy: range.location,

limitedBy: utf16.endIndex),

let to16 = utf16.index(from16, offsetBy: range.length, limitedBy: utf16.endIndex),

let from = String.Index(from16, within: self),

let to = String.Index(to16, within: self) else {

return nil

}

return from ..< to

}

}

This function converts the String's starting and ending indices as represented by an NSRange, to String.UTF16View.Index format, i.e. the positions in a string’s collection of UTF-16 code units; then converts each String.UTF16View.Index to String.Index format; which when combined, produces Swift's range format: Range. As long as the indices are valid, the method will return the Range representation of the original NSRange.

Your Swift is now chill. Time to head back to processing the text and tag chunks.

Inside parseMarkup(_:) add the following below let chunks (within the do block):

let defaultFont: UIFont = .systemFont(ofSize: UIScreen.main.bounds.size.height / 40)

//1

for chunk in chunks {

//2

guard let markupRange = markup.range(from: chunk.range) else { continue }

//3

let parts = markup.substring(with: markupRange).components(separatedBy: "<")

//4

let font = UIFont(name: fontName, size: UIScreen.main.bounds.size.height / 40) ?? defaultFont

//5

let attrs = [NSAttributedStringKey.foregroundColor: color, NSAttributedStringKey.font: font] as [NSAttributedStringKey : Any]

let text = NSMutableAttributedString(string: parts[0], attributes: attrs)

attrString.append(text)

}

chunks.NSTextCheckingResult's range, unwrap the Range<String.Index> and proceed with the block as long as it exists.chunk into parts separated by "<". The first part contains the magazine text and the second part contains the tag (if it exists).fontName, currently "Arial" by default, and a size relative to the device screen. If fontName doesn't produce a valid UIFont, set font to the default font.parts[0] to create the attributed string, then append that string to the result string.To process the "font" tag, insert the following after attrString.append(text):

// 1

if parts.count <= 1 {

continue

}

let tag = parts[1]

//2

if tag.hasPrefix("font") {

let colorRegex = try NSRegularExpression(pattern: "(?<=color=\")\\w+",

options: NSRegularExpression.Options(rawValue: 0))

colorRegex.enumerateMatches(in: tag,

options: NSRegularExpression.MatchingOptions(rawValue: 0),

range: NSMakeRange(0, tag.characters.count)) { (match, _, _) in

//3

if let match = match,

let range = tag.range(from: match.range) {

let colorSel = NSSelectorFromString(tag.substring(with:range) + "Color")

color = UIColor.perform(colorSel).takeRetainedValue() as? UIColor ?? .black

}

}

//5

let faceRegex = try NSRegularExpression(pattern: "(?<=face=\")[^\"]+",

options: NSRegularExpression.Options(rawValue: 0))

faceRegex.enumerateMatches(in: tag,

options: NSRegularExpression.MatchingOptions(rawValue: 0),

range: NSMakeRange(0, tag.characters.count)) { (match, _, _) in

if let match = match,

let range = tag.range(from: match.range) {

fontName = tag.substring(with: range)

}

}

} //end of font parsing

tag.tag starts with "font", create a regex to find the font's "color" value, then use that regex to enumerate through tag's matching "color" values. In this case, there should be only one matching color value.enumerateMatches(in:options:range:using:) returns a valid match with a valid range in tag, find the indicated value (ex. <font color="red"> returns "red") and append "Color" to form a UIColor selector. Perform that selector then set your class's color to the returned color if it exists, to black if not.fontName to that string.Great job! Now parseMarkup(_:) can take markup and produce an NSAttributedString for Core Text.

It's time to feed your app to some zombies! I mean, feed some zombies to your app... zombies.txt, that is. ;]

It's actually the job of a UIView to display content given to it, not load content. Open CTView.swift and add the following above draw(_:):

// MARK: - Properties

var attrString: NSAttributedString!

// MARK: - Internal

func importAttrString(_ attrString: NSAttributedString) {

self.attrString = attrString

}

Next, delete let attrString = NSAttributedString(string: "Hello World") from draw(_:).

Here you've created an instance variable to hold an attributed string and a method to set it from elsewhere in your app.

Next, open ViewController.swift and add the following to viewDidLoad():

// 1

guard let file = Bundle.main.path(forResource: "zombies", ofType: "txt") else { return }

do {

let text = try String(contentsOfFile: file, encoding: .utf8)

// 2

let parser = MarkupParser()

parser.parseMarkup(text)

(view as? CTView)?.importAttrString(parser.attrString)

} catch _ {

}

Let’s go over this step-by-step.

zombie.txt file into a String.ViewController's CTView.Build and run the app!

That's awesome? Thanks to about 50 lines of parsing you can simply use a text file to hold the contents of your magazine app.

If you thought a monthly magazine of Zombie news could possibly fit onto one measly page, you'd be very wrong! Luckily Core Text becomes particularly useful when laying out columns since CTFrameGetVisibleStringRange can tell you how much text will fit into a given frame. Meaning, you can create a column, then once its full, you can create another column, etc.

For this app, you'll have to print columns, then pages, then a whole magazine lest you offend the undead, so... time to turn your CTView subclass into a UIScrollView.

Open CTView.swift and change the class CTView line to:

class CTView: UIScrollView {

See that, zombies? The app can now support an eternity of undead adventures! Yep -- with one line, scrolling and paging is now available.

Up until now, you've created your framesetter and frame inside draw(_:), but since you'll have many columns with different formatting, it's better to create individual column instances instead.

Create a new Cocoa Touch Class file named CTColumnView subclassing UIView.

Open CTColumnView.swift and add the following starter code:

import UIKit

import CoreText

class CTColumnView: UIView {

// MARK: - Properties

var ctFrame: CTFrame!

// MARK: - Initializers

required init(coder aDecoder: NSCoder) {

super.init(coder: aDecoder)!

}

required init(frame: CGRect, ctframe: CTFrame) {

super.init(frame: frame)

self.ctFrame = ctframe

backgroundColor = .white

}

// MARK: - Life Cycle

override func draw(_ rect: CGRect) {

guard let context = UIGraphicsGetCurrentContext() else { return }

context.textMatrix = .identity

context.translateBy(x: 0, y: bounds.size.height)

context.scaleBy(x: 1.0, y: -1.0)

CTFrameDraw(ctFrame, context)

}

}

This code renders a CTFrame just as you'd originally done in CTView. The custom initializer, init(frame:ctframe:), sets:

CTFrame to draw into the context.Next, create a new swift file named CTSettings.swift which will hold your column settings.

Replace the contents of CTSettings.swift with the following:

import UIKit

import Foundation

class CTSettings {

//1

// MARK: - Properties

let margin: CGFloat = 20

var columnsPerPage: CGFloat!

var pageRect: CGRect!

var columnRect: CGRect!

// MARK: - Initializers

init() {

//2

columnsPerPage = UIDevice.current.userInterfaceIdiom == .phone ? 1 : 2

//3

pageRect = UIScreen.main.bounds.insetBy(dx: margin, dy: margin)

//4

columnRect = CGRect(x: 0,

y: 0,

width: pageRect.width / columnsPerPage,

height: pageRect.height).insetBy(dx: margin, dy: margin)

}

}

pageRect.pageRect's width by the number of columns per page and inset that new frame with the margin for columnRect.Open, CTView.swift, replace the entire contents with the following:

import UIKit

import CoreText

class CTView: UIScrollView {

//1

func buildFrames(withAttrString attrString: NSAttributedString,

andImages images: [[String: Any]]) {

//3

isPagingEnabled = true

//4

let framesetter = CTFramesetterCreateWithAttributedString(attrString as CFAttributedString)

//4

var pageView = UIView()

var textPos = 0

var columnIndex: CGFloat = 0

var pageIndex: CGFloat = 0

let settings = CTSettings()

//5

while textPos < attrString.length {

}

}

}

buildFrames(withAttrString:andImages:) will create CTColumnViews then add them to the scrollview.CTFramesetter framesetter will create each column's CTFrame of attributed text.UIView pageViews will serve as a container for each page's column subviews; textPos will keep track of the next character; columnIndex will keep track of the current column; pageIndex will keep track of the current page; and settings gives you access to the app's margin size, columns per page, page frame and column frame settings.attrString and lay out the text column by column, until the current text position reaches the end.Time to start looping attrString. Add the following within while textPos < attrString.length {.:

//1

if columnIndex.truncatingRemainder(dividingBy: settings.columnsPerPage) == 0 {

columnIndex = 0

pageView = UIView(frame: settings.pageRect.offsetBy(dx: pageIndex * bounds.width, dy: 0))

addSubview(pageView)

//2

pageIndex += 1

}

//3

let columnXOrigin = pageView.frame.size.width / settings.columnsPerPage

let columnOffset = columnIndex * columnXOrigin

let columnFrame = settings.columnRect.offsetBy(dx: columnOffset, dy: 0)

settings.pageRect and offset its x origin by the current page index multiplied by the width of the screen; so within the paging scrollview, each magazine page will be to the right of the previous one.pageIndex.pageView's width by settings.columnsPerPage to get the first column's x origin; multiply that origin by the column index to get the column offset; then create the frame of the current column by taking the standard columnRect and offsetting its x origin by columnOffset.Next, add the following below columnFrame initialization:

//1

let path = CGMutablePath()

path.addRect(CGRect(origin: .zero, size: columnFrame.size))

let ctframe = CTFramesetterCreateFrame(framesetter, CFRangeMake(textPos, 0), path, nil)

//2

let column = CTColumnView(frame: columnFrame, ctframe: ctframe)

pageView.addSubview(column)

//3

let frameRange = CTFrameGetVisibleStringRange(ctframe)

textPos += frameRange.length

//4

columnIndex += 1

CGMutablePath the size of the column, then starting from textPos, render a new CTFrame with as much text as can fit.CTColumnView with a CGRect columnFrame and CTFrame ctframe then add the column to pageView.CTFrameGetVisibleStringRange(_:) to calculate the range of text contained within the column, then increment textPos by that range length to reflect the current text position.Lastly set the scroll view's content size after the loop:

contentSize = CGSize(width: CGFloat(pageIndex) * bounds.size.width,

height: bounds.size.height)

By setting the content size to the screen width times the number of pages, the zombies can now scroll through to the end.

Open ViewController.swift, and replace

(view as? CTView)?.importAttrString(parser.attrString)

with the following:

(view as? CTView)?.buildFrames(withAttrString: parser.attrString, andImages: parser.images)

Build and run the app on an iPad. Check that double column layout! Drag right and left to go between pages. Lookin' good. :]

You've columns and formatted text, but you're missing images. Drawing images with Core Text isn't so straightforward - it's a text framework after all - but with the help of the markup parser you've already created, adding images shouldn't be too bad.

Although Core Text can't draw images, as a layout engine, it can leave empty spaces to make room for images. By setting a CTRun's delegate, you can determine that CTRun's ascent space, descent space and width. Like so:

When Core Text reaches a CTRun with a CTRunDelegate it asks the delegate, "How much space should I leave for this chunk of data?" By setting these properties in the CTRunDelegate, you can leave holes in the text for your images.

First add support for the "img" tag. Open MarkupParser.swift and find "} //end of font parsing". Add the following immediately after:

//1

else if tag.hasPrefix("img") {

var filename:String = ""

let imageRegex = try NSRegularExpression(pattern: "(?<=src=\")[^\"]+",

options: NSRegularExpression.Options(rawValue: 0))

imageRegex.enumerateMatches(in: tag,

options: NSRegularExpression.MatchingOptions(rawValue: 0),

range: NSMakeRange(0, tag.characters.count)) { (match, _, _) in

if let match = match,

let range = tag.range(from: match.range) {

filename = tag.substring(with: range)

}

}

//2

let settings = CTSettings()

var width: CGFloat = settings.columnRect.width

var height: CGFloat = 0

if let image = UIImage(named: filename) {

height = width * (image.size.height / image.size.width)

// 3

if height > settings.columnRect.height - font.lineHeight {

height = settings.columnRect.height - font.lineHeight

width = height * (image.size.width / image.size.height)

}

}

}

tag starts with "img", use a regex to search for the image's "src" value, i.e. the filename.settings.columnRect.height - font.lineHeight.Next, add the following immediately after the if let image block:

//1

images += [["width": NSNumber(value: Float(width)),

"height": NSNumber(value: Float(height)),

"filename": filename,

"location": NSNumber(value: attrString.length)]]

//2

struct RunStruct {

let ascent: CGFloat

let descent: CGFloat

let width: CGFloat

}

let extentBuffer = UnsafeMutablePointer<RunStruct>.allocate(capacity: 1)

extentBuffer.initialize(to: RunStruct(ascent: height, descent: 0, width: width))

//3

var callbacks = CTRunDelegateCallbacks(version: kCTRunDelegateVersion1, dealloc: { (pointer) in

}, getAscent: { (pointer) -> CGFloat in

let d = pointer.assumingMemoryBound(to: RunStruct.self)

return d.pointee.ascent

}, getDescent: { (pointer) -> CGFloat in

let d = pointer.assumingMemoryBound(to: RunStruct.self)

return d.pointee.descent

}, getWidth: { (pointer) -> CGFloat in

let d = pointer.assumingMemoryBound(to: RunStruct.self)

return d.pointee.width

})

//4

let delegate = CTRunDelegateCreate(&callbacks, extentBuffer)

//5

let attrDictionaryDelegate = [(kCTRunDelegateAttributeName as NSAttributedStringKey): (delegate as Any)]

attrString.append(NSAttributedString(string: " ", attributes: attrDictionaryDelegate))

Dictionary containing the image's size, filename and text location to images.RunStruct to hold the properties that will delineate the empty spaces. Then initialize a pointer to contain a RunStruct with an ascent equal to the image height and a width property equal to the image width.CTRunDelegateCallbacks that returns the ascent, descent and width properties belonging to pointers of type RunStruct.CTRunDelegateCreate to create a delegate instance binding the callbacks and the data parameter together.attrString which holds the position and sizing information for the hole in the text.Now MarkupParser is handling "img" tags, you'll need to adjust CTColumnView and CTView to render them.

Open CTColumnView.swift. Add the following below var ctFrame:CTFrame! to hold the column's images and frames:

var images: [(image: UIImage, frame: CGRect)] = []

Next, add the following to the bottom of draw(_:):

for imageData in images {

if let image = imageData.image.cgImage {

let imgBounds = imageData.frame

context.draw(image, in: imgBounds)

}

}

Here you loop through each image and draw it into the context within its proper frame.

Next open CTView.swift and the following property to the top of the class:

// MARK: - Properties

var imageIndex: Int!

imageIndex will keep track of the current image index as you draw the CTColumnViews.

Next, add the following to the top of buildFrames(withAttrString:andImages:):

imageIndex = 0

This marks the first element of the images array.

Next add the following, attachImagesWithFrame(_:ctframe:margin:columnView), below buildFrames(withAttrString:andImages:):

func attachImagesWithFrame(_ images: [[String: Any]],

ctframe: CTFrame,

margin: CGFloat,

columnView: CTColumnView) {

//1

let lines = CTFrameGetLines(ctframe) as NSArray

//2

var origins = [CGPoint](repeating: .zero, count: lines.count)

CTFrameGetLineOrigins(ctframe, CFRangeMake(0, 0), &origins)

//3

var nextImage = images[imageIndex]

guard var imgLocation = nextImage["location"] as? Int else {

return

}

//4

for lineIndex in 0..<lines.count {

let line = lines[lineIndex] as! CTLine

//5

if let glyphRuns = CTLineGetGlyphRuns(line) as? [CTRun],

let imageFilename = nextImage["filename"] as? String,

let img = UIImage(named: imageFilename) {

for run in glyphRuns {

}

}

}

}

ctframe's CTLine objects.CTFrameGetOrigins to copy ctframe's line origins into the origins array. By setting a range with a length of 0, CTFrameGetOrigins will know to traverse the entire CTFrame.nextImage to contain the attributed data of the current image. If nextImage contain's the image's location, unwrap it and continue; otherwise, return early.Next, add the following inside the glyph run for-loop:

// 1

let runRange = CTRunGetStringRange(run)

if runRange.location > imgLocation || runRange.location + runRange.length <= imgLocation {

continue

}

//2

var imgBounds: CGRect = .zero

var ascent: CGFloat = 0

imgBounds.size.width = CGFloat(CTRunGetTypographicBounds(run, CFRangeMake(0, 0), &ascent, nil, nil))

imgBounds.size.height = ascent

//3

let xOffset = CTLineGetOffsetForStringIndex(line, CTRunGetStringRange(run).location, nil)

imgBounds.origin.x = origins[lineIndex].x + xOffset

imgBounds.origin.y = origins[lineIndex].y

//4

columnView.images += [(image: img, frame: imgBounds)]

//5

imageIndex! += 1

if imageIndex < images.count {

nextImage = images[imageIndex]

imgLocation = (nextImage["location"] as AnyObject).intValue

}

CTRunGetTypographicBounds and set the height to the found ascent.CTLineGetOffsetForStringIndex then add it to the imgBounds' origin.CTColumnView.nextImage and imgLocation so they refer to that next image.OK! Great! Almost there - one final step.

Add the following right above pageView.addSubview(column) inside buildFrames(withAttrString:andImages:) to attach images if they exist:

if images.count > imageIndex {

attachImagesWithFrame(images, ctframe: ctframe, margin: settings.margin, columnView: column)

}

Build and run on both iPhone and iPad!

Congrats! As thanks for all that hard work, the zombies have spared your brains! :]

Check out the finished project here.

As mentioned in the intro, Text Kit can usually replace Core Text; so try writing this same tutorial with Text Kit to see how it compares. That said, this Core Text lesson won't be in vain! Text Kit offers toll free bridging to Core Text so you can easily cast between the frameworks as needed.

Have any questions, comments or suggestions? Join in the forum discussion below!

The post Core Text Tutorial for iOS: Making a Magazine App appeared first on Ray Wenderlich.

In this screencast, you'll learn how to use the Charts framework to control the appearance of a line chart and bar chart.

The post Screencast: Charts: Format & Style appeared first on Ray Wenderlich.

Recently, I updated my Beginning Realm on iOS course, that introduces Realm, a popular cross-platform mobile database.

If you’re ready to use Realm in your real-world projects, I’m excited to announce that an update to my course, Intermediate Realm on iOS, is available today! This course is fully up-to-date with Swift 3, Xcode 8, and iOS 10.

This 7-part course covers a number of essential Realm features for production apps such as bundled, multiple, and encrypted Realm files, as well as schema migrations across several versions of published apps.

Let’s see what’s inside!

Video 1: Introduction

In this video, you will learn what topics will be covered in the Intermediate Realm on iOS video course.

Video 2: Bundled Data

Learn how to bundle with your app a Realm file with initial data for your users to use immediately upon the first launch.

Video 3: Multiple Realm Files

Split your app’s data persistence needs across several files to isolate data or just use different Realm features per file.

Video 4: Encrypted Realms

Sensitive data like medical records or financial information should be protected well – Realm makes that a breeze with built-in encryption.

Video 5: Migrations Part 1

Your app changes from version to version and so does your database – learn how to migrate Realm data to newer schema versions.

Video 6: Migrations Part 2

Learn even more how to handle more complex migrations across several app versions.

Video 7: Conclusion

In this course’s gripping conclusion you will look back at what you’ve learned and see where to go next.

Want to check out the course? You can watch the introduction for free!

The rest of the course is for raywenderlich.com subscribers only. Here’s how you can get access:

There’s much more in store for raywenderlich.com subscribers – if you’re curious, you can check out our full schedule of upcoming courses.

I hope you enjoy our new course, and stay tuned for many more new courses and updates to come!

The post Updated Course: Intermediate Realm on iOS appeared first on Ray Wenderlich.

Which books will we update for Swift 4?

The answer is — yes!

And on top of that, we’ll be releasing these books this year as free updates for existing PDF customers!

Here are the books we’ll be updating:

That’s 9 free updates in one year! You won’t find that kind of value anywhere else.

If you purchase any of these PDF books from our online store, you’ll get the existing iOS 10/Swift 3/Xcode 8 edition — but you’ll automatically receive a free update to the iOS 11/Swift 4/Xcode 9 edition once it’s available.

We’re targeting Fall 2017 for the release of the new editions of the books, so stay tuned for updates.

While you’re waiting, I suggest you check out some of the great Swift 4 and iOS 11 material we’ve already released:

Happy reading!

The post Will raywenderlich.com Books be Updated for Swift 4 and iOS 11? appeared first on Ray Wenderlich.

iOS 10’s new Speech Recognition API lets your app transcribe live or pre-recorded audio. It leverages the same speech recognition engine used by Siri and Keyboard Dictation, but provides much more control and improved access.

iOS 10’s new Speech Recognition API lets your app transcribe live or pre-recorded audio. It leverages the same speech recognition engine used by Siri and Keyboard Dictation, but provides much more control and improved access.

The engine is fast and accurate and can currently interpret over 50 languages and dialects. It even adapts results to the user using information about their contacts, installed apps, media and various other pieces of data.

Audio fed to a recognizer is transcribed in near real time, and results are provided incrementally. This lets you react to voice input very quickly, regardless of context, unlike Keyboard Dictation, which is tied to a specific input object.

Speech Recognizer creates some truly amazing possibilities in your apps. For example, you could create an app that takes a photo when you say “cheese”. You could also create an app that could automatically transcribe audio from Simpsons episodes so you could search for your favorite lines.

In this speech recognition tutorial for iOS, you’ll build an app called Gangstribe that will transcribe some pretty hardcore (hilarious) gangster rap recordings using speech recognition. It will also get users in the mood to record their own rap hits with a live audio transcriber that draws emojis on their faces based on what they say. :]

The section on live recordings will use AVAudioEngine. If you haven’t used AVAudioEngine before, you may want to familiarize yourself with that framework first. The 2014 WWDC session AVAudioEngine in Practice is a great intro to this, and can be found at apple.co/28tATc1. This session video explains many of the systems and terminology we’ll use in this speech recognition tutorial for iOS.

The Speech Recognition framework doesn’t work in the simulator, so be sure to use a real device with iOS 10 (or later) for this speech recognition tutorial for iOS.

Download the sample project here. Open Gangstribe.xcodeproj in the starter project folder for this speech recognition tutorial for iOS. Select the project file, the Gangstribe target and then the General tab. Choose your development team from the drop-down.

Connect an iOS 10 (or later) device and select it as your run destination in Xcode. Build and run and you’ll see the bones of the app.

From the master controller, you can select a song. The detail controller will then let you play the audio file, recited by none other than our very own DJ Sammy D!

The transcribe button is not currently operational, but you’ll use this later to kick off a transcription of the selected recording.

Tap Face Replace on the right of the navigation bar to preview the live transcription feature. You’ll be prompted for permission to access the camera; accept this, as you’ll need it for this feature.

Currently if you select an emoji with your face in frame, it will place the emoji on your face. Later, you’ll trigger this action with speech.

Take a moment to familiarize yourself with the starter project. Here are some highlights of classes and groups you’ll work with during this speech recognition tutorial for iOS:

handleTranscribeButtonTapped(_:) to have it kick off file transcription.

You’ll start this speech recognition tutorial for iOS by making the transcribe button work for pre-recorded audio. It will then feed the audio file to Speech Recognizer and present the results in a label under the player.

The latter half of the speech recognition tutorial for iOS will focus on the Face Replace feature. You’ll set up an audio engine for recording, tap into that input, and transcribe the audio as it arrives. You’ll display the live transcription and ultimately use it to trigger placing emojis over the user’s face.

You can’t just dive right in and start voice commanding unicorns onto your face though; you’ll need to understand a few basics first.

There are four primary actors involved in a speech transcription:

SFSpeechRecognizer is the primary controller in the framework. Its most important job is to generate recognition tasks and return results. It also handles authorization and configures locales.

SFSpeechRecognitionRequest is the base class for recognition requests. Its job is to point the SFSpeechRecognizer to an audio source from which transcription should occur. There are two concrete types: SFSpeechURLRecognitionRequest, for reading from a file, and SFSpeechAudioBufferRecognitionRequest for reading from a buffer.

SFSpeechRecognitionTask objects are created when a request is kicked off by the recognizer. They are used to track progress of a transcription or cancel it.

SFSpeechRecognitionResult objects contain the transcription of a chunk of the audio. Each result typically corresponds to a single word.

Here’s how these objects interact during a basic Speech Recognizer transcription:

The code required to complete a transcription is quite simple. Given an audio file at url, the following code transcribes the file and prints the results:

let request = SFSpeechURLRecognitionRequest(url: url)

SFSpeechRecognizer()?.recognitionTask(with: request) { (result, _) in

if let transcription = result?.bestTranscription {

print("\(transcription.formattedString)")

}

}

SFSpeechRecognizer kicks off a SFSpeechRecognitionTask for the SFSpeechURLRecognitionRequest using recognitionTask(with:resultHandler:). It returns partial results as they arrive via the resultHandler. This code prints the formatted string value of the bestTranscription, which is a cumulative transcription result adjusted at each iteration.

You’ll start by implementing a file transcription very similar to this.

Before you start reading and sending chunks of the user’s audio off to a remote server, it would be polite to ask permission. In fact, considering their commitment to user privacy, it should come as no surprise that Apple requires this! :]

You’ll kick off the the authorization process when the user taps the Transcribe button in the detail controller.

Open RecordingViewController.swift and add the following to the import statements at the top:

import Speech

This imports the Speech Recognition API.

Add the following to handleTranscribeButtonTapped(_:):

SFSpeechRecognizer.requestAuthorization {

[unowned self] (authStatus) in

switch authStatus {

case .authorized:

if let recording = self.recording {

//TODO: Kick off the transcription

}

case .denied:

print("Speech recognition authorization denied")

case .restricted:

print("Not available on this device")

case .notDetermined:

print("Not determined")

}

}

You call the SFSpeechRecognizer type method requestAuthorization(_:) to prompt the user for authorization and handle their response in a completion closure.

In the closure, you look at the authStatus and print error messages for all of the exception cases. For authorized, you unwrap the selected recording for later transcription.

Next, you have to provide a usage description displayed when permission is requested. Open Info.plist and add the key Privacy - Speech Recognition Usage Description providing the String value I want to write down everything you say:

Build and run, select a song from the master controller, and tap Transcribe. You’ll see a permission request appear with the text you provided. Select OK to provide Gangstribe the proper permission:

Of course nothing happens after you provide authorization — you haven’t yet set up speech recognition! It’s now time to test the limits of the framework with DJ Sammy D’s renditions of popular rap music.

Back in RecordingViewController.swift, find the RecordingViewController extension at the bottom of the file. Add the following method to transcribe a file found at the passed url:

fileprivate func transcribeFile(url: URL) {

// 1

guard let recognizer = SFSpeechRecognizer() else {

print("Speech recognition not available for specified locale")

return

}

if !recognizer.isAvailable {

print("Speech recognition not currently available")

return

}

// 2

updateUIForTranscriptionInProgress()

let request = SFSpeechURLRecognitionRequest(url: url)

// 3

recognizer.recognitionTask(with: request) {

[unowned self] (result, error) in

guard let result = result else {

print("There was an error transcribing that file")

return

}

// 4

if result.isFinal {

self.updateUIWithCompletedTranscription(

result.bestTranscription.formattedString)

}

}

}

Here are the details on how this transcribes the passed file:

SFSpeechRecognizer initializer provides a recognizer for the device’s locale, returning nil if there is no such recognizer. isAvailable checks if the recognizer is ready, failing in such cases as missing network connectivity.

updateUIForTranscriptionInProgress() is provided with the starter to disable the Transcribe button and start an activity indicator animation while the transcription is in process. A SFSpeechURLRecognitionRequest is created for the file found at url, creating an interface to the transcription engine for that recording.

recognitionTask(with:resultHandler:) processes the transcription request, repeatedly triggering a completion closure. The passed result is unwrapped in a guard, which prints an error on failure.

isFinal property will be true when the entire transcription is complete. updateUIWithCompletedTranscription(_:) stops the activity indicator, re-enables the button and displays the passed string in a text view. bestTranscription contains the transcription Speech Recognizer is most confident is accurate, and formattedString provides it in String format for display in the text view.

bestTranscription, there can of course be lesser ones. SFSpeechRecognitionResult has a transcriptions property that contains an array of transcriptions sorted in order of confidence. As you see with Siri and Keyboard Dictation, a transcription can change as more context arrives, and this array illustrates that type of progression.

Now you need to call this new code when the user taps the Transcribe button. In handleTranscribeButtonTapped(_:) replace //TODO: Kick off the transcription with the following:

self.transcribeFile(url: recording.audio)

After successful authorization, the button handler now calls transcribeFile(url:) with the URL of the currently selected recording.

Build and run, select Gangsta’s Paradise, and then tap the Transcribe button. You’ll see the activity indicator for a while, and then the text view will eventually populate with the transcription:

The results aren’t bad, considering Coolio doesn’t seem to own a copy of Webster’s Dictionary. Depending on the locale of your device, there could be another reason things are a bit off. The above screenshot was a transcription completed on a device configured for US English, while DJ Sammy D has a slightly different dialect.

But you don’t need to book a flight overseas to fix this. When creating a recognizer, you have the option of specifying a locale — that’s what you’ll do next.

Still in RecordingViewController.swift, find transcribeFile(url:) and replace the following two lines:

fileprivate func transcribeFile(url: URL) {

guard let recognizer = SFSpeechRecognizer() else {

with the code below:

fileprivate func transcribeFile(url: URL, locale: Locale?) {

let locale = locale ?? Locale.current

guard let recognizer = SFSpeechRecognizer(locale: locale) else {

You’ve added an optional Locale parameter which will specify the locale of the file being transcribed. If locale is nil when unwrapped, you fall back to the device’s locale. You then initialize the SFSpeechRecognizer with this locale.

Now to modify where this is called. Find handleTranscribeButtonTapped(_:) and replace the transcribeFile(url:) call with the following:

self.transcribeFile(url: recording.audio, locale: recording.locale)

You use the new method signature, passing the locale stored with the recording object.

recordingNames array up top. Each element contains the song name, artist, audio file name and locale. You can find information on how locale identifiers are derived in Apple’s Internationalization and Localization Guide here — apple.co/1HVWDQa

Build and run, and complete another transcription on Gangsta’s Paradise. Assuming your first run was with a locale other than en_GB, you should see some differences.

You can probably understand different dialects of languages you speak pretty well. But you’re probably significantly weaker when it comes to understanding languages you don’t speak. The Speech Recognition engine understands over 50 different languages and dialects, so it likely has you beat here.

Now that you are passing the locale of files you’re transcribing, you’ll be able to successfully transcribe a recording in any supported language. Build and run, and select the song Raise Your Hands, which is in Thai. Play it, and then tap Transcribe to see the transcribed content.

Flawless transcription! Presumably.

Live transcription is very similar to file transcription. The primary difference in the process is a different request type — SFSpeechAudioBufferRecognitionRequest — which is used for live transcriptions.

As the name implies, this type of request reads from an audio buffer. Your task will be to append live audio buffers to this request as they arrive from the source. Once connected, the actual transcription process will be identical to the one for recorded audio.

Another consideration for live audio is that you’ll need a way to stop a transcription when the user is done speaking. This requires maintaining a reference to the SFSpeechRecognitionTask so that it can later be canceled.

Gangstribe has some pretty cool tricks up its sleeve. For this feature, you’ll not only transcribe live audio, but you’ll use the transcriptions to trigger some visual effects. With the use of the FaceReplace library, speaking the name of a supported emoji will plaster it right over your face!