iOS devices provide rich multimedia experiences to users with vivid visual, audio and haptic interfaces. Despite the broad range of capabilities on offer, as developers we tend to focus almost exclusively on the visual design of our apps and neglect the audio side of the user experience.

AudioKit is a comprehensive audio framework built by audio designers, programmers and musicians for iOS. Under the hood, AudioKit is a mixture of Swift, Objective-C, C++ and C, interfacing with Apple’s Audio Unit API. All of this fantastic (and quite complex) technology is wrapped up in a super-friendly Swift API that you can use directly within Xcode Playgrounds!

This AudioKit tutorial doesn’t attempt to teach you all there is to know about AudioKit. Instead, you’ll be taken on a fun and gentle journey through the framework via the history of sound synthesis and computer audio. Along the way, you’ll learn the basic physics of sound, and how early synthesizers such as the Hammond Organ work. You’ll eventually arrive at the modern day where sampling and mixing dominate.

So pour yourself a coffee, pull up a chair and get ready for the journey!

Getting Started

Admittedly, the first step on your journey isn’t all that exciting. There’s some basic plumbing required in order to use AudioKit within a playground.

Open Xcode and create a new workspace via File\New\Workspace, name it Journey Through Sound, and save it in a convenient location.

At this point you’ll see an empty workspace. Click the + button in the bottom left-hand corner of the Navigator view. Select the New Playground… option, name it Journey, and save it in the same location as your workspace.

Your newly added playground will compile and execute, and look like the following:

Download the AudioKit source code and unzip the file into the same folder as your playground.

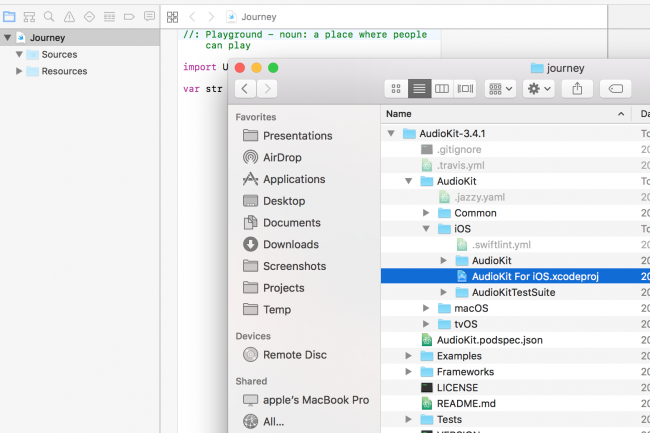

Open Finder, and drag the Xcode project located at AudioKit-3.4.1/AudioKit/iOS/AudioKit For iOS.xcodeproj into the root of your workspace.

Your Navigator view will look like the following:

Select iPhone 7 Plus as the build target:

Then select the menu option Product\Build to build the AudioKit framework. There are ~700 files in the framework, so expect this to take a little time!

Note: When using AudioKit within an application, as opposed to a playground, you can download and use the precompiled framework instead or use CocoaPods or Carthage. The only reason you need to follow this slightly more complex process here is that playgrounds don’t support frameworks just yet!

Once complete, click on Journey to open your playground. Replace the Xcode-generated code with the following:

import AudioKit let oscillator = AKOscillator() AudioKit.output = oscillator AudioKit.start() oscillator.start() sleep(10) |

Once built, you’ll hear your playground emit 10 seconds of a beeping sound. You can use the Play/Stop button at the bottom left of the playground window within the Debug Area to stop or repeat the playground.

Note: If the playground fails to execute, and you see errors in the Debug Area, try restarting Xcode. Unfortunately, using playgrounds in combination with frameworks can be a little error-prone and unpredictable. :[

Oscillators and the Physics of Sound

Humans have been making music from physical objects — through hitting, plucking and strumming them in various ways — for thousands of years. Many of our traditional instruments, such as drums and guitars, have been around for centuries. The first recorded use of an electronic instrument, or at least the first time electronic circuitry was used to make sound, was in 1874 by Elisha Gray who worked in the field of telecommunication. Elisha discovered the oscillator, the most basic of sound synthesizers, which is where your exploration will begins.

Right click your playground, select New Playground Page, and create a new page named Oscillators.

Replace the generated code with the following:

import AudioKit import PlaygroundSupport // 1. Create an oscillator let oscillator = AKOscillator() // 2. Start the AudioKit 'engine' AudioKit.output = oscillator AudioKit.start() // 3. Start the oscillator oscillator.start() PlaygroundPage.current.needsIndefiniteExecution = true |

The playground will emit a never-ending beep — how, er, lovely. You can press Stop if you like.

This is much the same as your test playground that you created in the previous step, but this time you are going to dig into the details.

Considering each point in turn:

- This creates an AudioKit oscillator, which subclasses

AKNode. Nodes form the main building blocks of your audio pipeline. - This associates your final output node, which is in your case your only node, with the AudioKit engine. This engine is similar to a physics or game engine: You must start it and keep it running in order to execute your audio pipeline.

- Finally, this starts the oscillator, which causes it to emit a sound wave.

An oscillator creates a repeating, or periodic signal that continues indefinitely. In this playground, the AKOscillator produces a sine wave. This digital sine wave is processed by AudioKit, which directs the output to your computer’s speakers or headphones, which physically oscillate with the exact same sine wave. This sound is transmitted to your ears by compression waves in the air around you, which is how you can hear that annoyingly sharp sound!

There are two parameters that determine what the oscillator sounds like: its amplitude, which is the height of the sine wave and determines how loud it is, and its frequency, which determines the pitch.

Within your playground, add the following after the line where you created the AKOscillator:

oscillator.frequency = 300 oscillator.amplitude = 0.5 |

Listen closely, and you’ll hear the sound is now half as loud and much lower in pitch. The frequency, measured in hertz (or cycles per second), determines the pitch of the note. The amplitude, with a scale from 0 to 1, gives the volume.

Elisha Gray was unfortunately beaten to the patent office by Alexander Graham Bell, narrowly missing out on going down in history as the inventor of the telephone. However, his accidental discovery of the oscillator did result in the very first patent for an electronic musical instrument.

Many years later, Léon Theremin invented a slightly strange musical instrument that is still used today. With the eponymous theremin, you can change the frequency of an electronic oscillator by waving your hand above the instrument. If you have no idea what this instrument sound like, I’d recommend listening to Good Vibrations by the Beach Boys; you can’t miss the distinctive theremin sound in that track!

You can simulate this effect by adding the following code to the end of your playground:

oscillator.rampTime = 0.2 oscillator.frequency = 500 AKPlaygroundLoop(every: 0.5) { oscillator.frequency = oscillator.frequency == 500 ? 100 : 500 } |

The rampTime property allows the oscillator to transition smoothly between property values (e.g. frequency or amplitude). AKPlaygroundLoop is a useful little utility provided by AudioKit for periodically executing code in playgrounds. In this case, you are simply switching the oscillator frequency from 500Hz to 100Hz every 0.5 seconds.

You just built your very own theremin!

Simple oscillators can create musical notes, but are not terribly pleasing to the ear. There are a number of other factors that give physical instruments, such as the piano, their distinctive sound. In the next few sections you’ll explore how these are constructed.

Sound Envelopes

When a musical instrument plays a note, the amplitude (or loudness) varies over time, and is different from instrument to instrument. A model that can be used to simulate this effect is an Attack-Decay-Sustain-Release (ADSR) Envelope:

The component parts of this envelope are:

- Attack: The time taken to ramp up to full volume

- Decay: The time taken to ramp down to the sustain level

- Sustain: The level maintained after the decay has finished and before release has begun

- Release: The time taken to ramp the volume down to zero

A piano, where strings are hit with a hammer, has a very brief attack and a rapid decay. A violin can have a longer attack, decay and sustain as the musician continues to bow the string.

One of the first electronic instruments that used an ADSR envelope was the Novachord. This instrument, built in 1939, contained 163 vacuum tubes and over 1,000 custom capacitors, and weighed in at 500 pounds (230 kg). Unfortunately, only one thousand Novachords were made and it was not a commercial success.

Image courtesy of Hollow Sun – CC attribution license.

Control-click the top element in your playground, Journey, select New Playground Page and create a new page named ADSR. Replace the generated content with the following:

import AudioKit import PlaygroundSupport let oscillator = AKOscillator() |

This create the oscillator that you are already familiar with. Next add the following code to the end of your playground:

let envelope = AKAmplitudeEnvelope(oscillator) envelope.attackDuration = 0.01 envelope.decayDuration = 0.1 envelope.sustainLevel = 0.1 envelope.releaseDuration = 0.3 |

This creates an AKAmplitudeEnvelope which defines an ADSR envelope. The duration parameters are specified in seconds and the level is an amplitude with a range of 0 – 1.

AKAmplitudeEnvelope subclasses the AKNode, just like AKOscillator. In the above code, you can see that the oscillator is passed to the envelope’s initializer, connecting the two nodes together.

Next add the following:

AudioKit.output = envelope AudioKit.start() oscillator.start() |

This starts the AudioKit engine, this time taking the output from the ADSR envelope, and starts the oscillator.

In order to hear the effect of the envelope you need to repeatedly start then stop the node. That’s the final piece to add to your playground:

AKPlaygroundLoop(every: 0.5) { if (envelope.isStarted) { envelope.stop() } else { envelope.start() } } PlaygroundPage.current.needsIndefiniteExecution = true |

You will now hear the same note played repeatedly, but this time with a sound envelope that sounds a little bit like a piano.

The loop executes two times per second, with each iteration either starting or stopping the ADSR. When the loop starts, the rapid attack to full volume will take just 0.01 seconds, followed by a 0.1-second decay to the sustain level. This is held for 0.5 seconds, then released with a final decay of 0.3 seconds.

Play around with the ADSR values to try and create some other sounds. How about a violin?

The sounds you have explored so far have been based on sine waves produced by AKOscillator. While you can play musical notes with this oscillator, and use an ADSR to soften its sharp tones, you wouldn’t exactly call it musical!

In the next section you’ll learn how to create a richer sound.

Additive Sound Synthesis

Each musical instrument has a distinctive sound quality, known as its timbre. This is what makes a piano sound quite different from a violin, even though they’re playing exactly the same note. An important property of timbre is the sound spectrum that an instrument produces; this describes the range of frequencies that combine to produce a single note. Your current playgrounds used oscillators that emit a single frequency, which sounds quite artificial.

You can create a realistic synthesis of an instrument by adding together the output of a bank of oscillators to play a single note. This is known as additive synthesis, and is the subject of your next playground.

Right click your playground, select New Playground Page and create a new page named Additive Synthesis. Replace the generated content with the following:

import AudioKit import PlaygroundSupport func createAndStartOscillator(frequency: Double) -> AKOscillator { let oscillator = AKOscillator() oscillator.frequency = frequency oscillator.start() return oscillator } |

For additive synthesis, you need multiple oscillators. createAndStartOscillator is a convenient way to create them.

Next add the following:

let frequencies = (1...5).map { $0 * 261.63 } |

This uses the Range Operator to create a range with the numbers from 1 to 5. You then map this range by multiplying each entry by 261.53. There is a reason for this magic number: It’s the frequency of middle C on a standard keyboard. The other frequencies are multiples of this value, which are known as harmonics.

Next add the following:

let oscillators = frequencies.map { createAndStartOscillator(frequency: $0) } |

This performs a further map operation to create your oscillators.

The next step is to combine them together. Add the following:

let mixer = AKMixer() oscillators.forEach { mixer.connect($0) } |

The AKMixer class is another AudioKit node; it takes the output of one or more nodes and combines them together.

Next add the following:

let envelope = AKAmplitudeEnvelope(mixer) envelope.attackDuration = 0.01 envelope.decayDuration = 0.1 envelope.sustainLevel = 0.1 envelope.releaseDuration = 0.3 AudioKit.output = envelope AudioKit.start() AKPlaygroundLoop(every: 0.5) { if (envelope.isStarted) { envelope.stop() } else { envelope.start() } } |

The above code should be quite familiar to you; it adds an ADSR to the output of the mixer, provides it to the AudioKit engine, then periodically starts and stops it.

To really learn how additive synthesis works, it would be nice if you could play around with the various combinations of these frequencies. The playground live-view is an ideal tool for this!

Add the following code:

class PlaygroundView: AKPlaygroundView { override func setup() { addTitle("Harmonics") oscillators.forEach { oscillator in let harmonicSlider = AKPropertySlider( property: "\(oscillator.frequency) Hz", value: oscillator.amplitude ) { amplitude in oscillator.amplitude = amplitude } addSubview(harmonicSlider) } } } PlaygroundPage.current.needsIndefiniteExecution = true PlaygroundPage.current.liveView = PlaygroundView() |

AudioKit has a number of classes that make it easy for you to create interactive playgrounds; you’re using several of them here.

The Playground class subclasses AKPlaygoundView, which constructs a vertical stack of subviews. Within the setup method, you iterate over the oscillators, and create an AKPropertySlider for each. The sliders are initialized with the frequency and amplitude of each oscillator and invoke a callback when you interact with the slider. The trailing closure that provides this callback updates the amplitude of the respective oscillator. This is a very simple method to making your playground interactive.

In order to see the results of the above code, you need to ensure the live view is visible. Click the button with the linked circles icon in the top right corner to show the assistant view. Also ensure that the live view is set to the correct playground output.

You can alter the amplitude of each slider to change the timbre of your instrument. For a more natural sound quality, I’d suggest a configuration similar to the one pictured above.

One of the earliest synthesizers to employ additive synthesis was the 200 ton (!) Teleharmonium. The immense size and weight of this instrument were almost certainly responsible for its demise. The more successful Hammond organ used a similar tonewheel technique, albeit in a smaller package, to achieve the same additive synthesis which was part of its distinctive sound. Invented in 1935, the Hammond is still well-known and was a popular instrument in the progressive rock era.

The C3 Hammond – public domain image.

Tonewheels are physical spinning disks with a number of smooth bumps on their rim, which rotate next to a pickup assembly. The Hammond organ has a whole bank of these tonewheels spinning at various different speeds. The musician uses drawbars to determine the exact mix of tones used to generate a musical note. This rather crude sounding way of creating sound is, strictly speaking, electromechanical rather than electronic!

There are a number of other techniques that can be used to create a more realistic sound spectrum, including Frequency Modulation (FM) and Pulse Width Modulation (PWM), both of which are available in AudioKit via the AKFMOscillator and AKPWMOscillator classes. I’d certainly encourage you to play around with both of these. Why not swap out the AKOscillator you are using in your current playgrounds for one of these?

Polyphony

The 1970s saw a shift away from modular synthesis, which uses separate oscillators, envelopes and filters, to the use of microprocessors. Rather than using analogue circuitry, sounds were instead synthesized digitally. This resulted in far cheaper and more portable sound synthesizers, with brands such as Yamaha becoming widely used by professionals and amateurs alike.

The 1983 Yamaha DX7 – public domain image.

All of your playgrounds so far have been limited a single note at a time. With many instruments, musicians are able to play more than one note simultaneously. These instruments are called polyphonic, whereas those that can only play a single note, just like your examples, are called monophonic.

In order to create polyphonic sound, you could create multiple oscillators, each playing a different note, and feed them through a mixer node. However, there is a much easier way to create the same effect: using AudioKit’s oscillator banks.

Ctrl-click your playground, select New Playground Page and create a new page named Polyphony. Replace the generated content with the following:

import PlaygroundSupport import AudioKit let bank = AKOscillatorBank() AudioKit.output = bank AudioKit.start() |

This simply creates the oscillator bank and sets it as the AudioKit output. If you Command-click the AKOscillatorBank class to navigate to its definition, you will find that it subclasses AKPolyphonicNode. If you follow this to its definition, you’ll find that it subclasses AKNode and adopts the AKPolyphonic protocol.

As a result, this oscillator bank is just like any other AudioKit node in that its output can be processed by mixers, envelopes and any other filters and effects. The AKPolyphonic protocol describes how you play notes on this polyphonic node, as you’ll see shortly.

In order to test this oscillator you need a way to play multiple notes in unison. That sounds a bit complicated doesn’t it?

Add the following to the end of your playground, and ensure the live view is visible:

class PlaygroundView: AKPlaygroundView { override func setup() { let keyboard = AKKeyboardView(width: 440, height: 100) addSubview(keyboard) } } PlaygroundPage.current.liveView = PlaygroundView() PlaygroundPage.current.needsIndefiniteExecution = true |

Once the playground has compiled you’ll see the following:

How cool is that? A playground that renders a musical keyboard!

The AKKeyboardView is another AudioKit utility that makes it really easy to ‘play’ with the framework and explore its capabilities. Click on the keys of the keyboard, and you’ll find it doesn’t make a sound.

Time for a bit more wiring-up.

Update setUp of your PlaygroundView to the following:

let keyboard = AKKeyboardView(width: 440, height: 100) keyboard.delegate = self addSubview(keyboard) |

This sets the keyboard view’s delegate to the PlaygroundView class. The delegate allows you to respond to these keypresses.

Update the class definition accordingly:

class PlaygroundView: AKPlaygroundView, AKKeyboardDelegate |

This adopts the AKKeyboardDelegate protocol. Finally add the following methods to the class, just after setup:

func noteOn(note: MIDINoteNumber) { bank.play(noteNumber: note, velocity: 80) } func noteOff(note: MIDINoteNumber) { bank.stop(noteNumber: note) } |

Each time you press a key, the keyboard invokes noteOn of the delegate. The implementation of this method is quite straightforward; it simply invokes play on the oscillator bank. noteOff, invokes the corresponding stop method.

Click and slide across the keyboard, and you’ll find it plays a beautiful crescendo. This oscillator bank already has ADSR capabilities built in. As a result, the decay from one note mixes with the attack, release and sustain of the next, creating quite a pleasing sound.

You’ll notice that the note supplied by the keyboard is not defined as a frequency. Instead, it uses the MIDINoteNumber type. If you Command-click to view its definition, you’ll see that it is simply an integer:

public typealias MIDINoteNumber = Int |

MIDI stands for Musical Instrument Digital Interface, which is a widely adopted communication format between musical instruments. The note numbers correspond to notes on a standard keyboard. The second parameter in the play method is velocity, another standard MIDI property which details how hard a note is struck. Lower values indicate a softer strike which result in a quieter sound.

The final step is to set the keyboard to polyphonic mode. Add the following to the end of the setup method:

keyboard.polyphonicMode = true |

You will find you can now play multiple notes simultaneously, just like the following:

…which is, incidentally, C-major.

AudioKit has a long history with its foundations in the early microprocessor era. The project uses Soundpipe, and code from Csound, an MIT project that started in 1985. It’s fascinating to think that audio code you can run in a playground and add to your iPhone apps started life over 30 years ago!

Sampling

The sound synthesis techniques you have explored so far all try to construct realistic sounds from quite basic building blocks: oscillators, filters and mixers. In the early 1970s, the increase in computer processing power and storage gave rise to a completely different approach — sound sampling — where the aim is to create a digital copy of the sound.

Sampling is a relatively simple concept and shares the same principles as digital photography. Natural sounds are smooth waveforms; the process of sampling simply records the amplitude of the soundwave at regularly spaced intervals:

There are two important factors that affect how faithfully a sound is captured:

- Bit depth: Describes the number of discrete amplitude levels a sampler can reproduce.

- Sample rate: Describes how often an amplitude measurement is taken, measured in hertz.

You’ll explore these properties with your next playground.

Right-click your playground, select New Playground Page and create a new page named Samples. Replace the generated content with the following:

import PlaygroundSupport import AudioKit let file = try AKAudioFile(readFileName: "climax-disco-part2.wav", baseDir: .resources) let player = try AKAudioPlayer(file: file) player.looping = true |

The above loads a sound sample, creates an audio player and sets it to repeatedly loop the sample.

The WAV file for this AudioKit tutorial is available within this zip file. Unzip the contents, then drag the WAV files into the resources folder of your playground:

Finally, add the following to the end of your playground:

AudioKit.output = player AudioKit.start() player.play() PlaygroundPage.current.needsIndefiniteExecution = true |

This wires up your audio player to the AudioKit engine and starts it playing. Turn up the volume and enjoy!

This brief sampled loop comprises a wide variety of sounds that would be a real challenge with the basic building blocks of oscillators.

The MP3 sound you’re using has a high bit depth and sample rate, giving it a crisp and clear sound. In order to experiment with these parameters, add the following code to your playground, just after you create your audio player:

let bitcrusher = AKBitCrusher(player) bitcrusher.bitDepth = 16 bitcrusher.sampleRate = 40000 |

And update the AudioKit output:

AudioKit.output = bitcrusher |

The output of the playground is now very different; it’s clearly the same sample, but it now sounds very tinny.

AKBitCrusher is an AudioKit effect that simulates a reduction of bit depth and sample rate. As a result, you can produce an audio output that is similar to the early samples produced by computers such as the ZX Spectrum or BBC Micro, which only had a few kilobytes of memory and processors that are millions of times slower than today’s!

For your final experiment, you’re going to assemble a number of nodes together to create a stereo delay effect. To start, remove the three lines of code that create and configure the bit crusher.

Next, add the following:

let delay = AKDelay(player) delay.time = 0.1 delay.dryWetMix = 1 |

This creates a delay effect of 0.1 seconds using your sample loop as an input. The wet/dry mix value lets you mix the delayed and non-delayed audio, in this case, a value of 1 ensures only the delayed audio is output by this node.

Next, add the following:

let leftPan = AKPanner(player, pan: -1) let rightPan = AKPanner(delay, pan: 1) |

The AKPanner node lets you pan audio to the left, to the right, or somewhere in between. The above pans the delayed audio to the left, and the non-delayed to the right.

The final step is to mix the two together, and configure the AudioKit output by adding the following two lines to replace the old line that configured the AudioKit to use bitcrusher:

let mix = AKMixer(leftPan, rightPan) AudioKit.output = mix |

This will play the same sound sample, but with a very short delay between the left and right speaker.

Where to Go From Here?

In this AudioKit Tutorial you’ve only scratched the surface of what’s possible with AudioKit. Start exploring — try a moog filter, a pitch shifter, a reverb, or a graphic equalizer to see what effects they have.

With a little creativity, you’ll be creating your own custom sounds, electronic instruments or game effects.

You can download the finished playground, although you’ll still have to add the AudioKit library to the workspace as described in the Getting Started section.

Finally, thanks to Aurelius Prochazka, the AudioKit project lead, for reviewing this article.

If you have any questions or comments on this AudioKit tutorial, feel free to join the discussion below!

The post AudioKit Tutorial: Getting Started appeared first on Ray Wenderlich.