![Droidcon Boston 2018 Conference Report]()

There are many awesome Android conferences that occur throughout the year. The Droidcon series of conferences are some of my favorites. The high quality of the presentations is consistently impressive, and for those that can’t be there in person, the conference videos are posted online soon after the events.

Droidcons occur throughout the year in Berlin, San Francisco, New York, and many other cities. In April 2017, Droidcon Boston held it’s first annual conference. I’ve had the opportunity to attend both Droidcon Boston 2017 and this year’s event, which was held just these past two days March 26-27, 2018.

This year’s Droidcon Boston was packed with attendees and excitement. Most of the sessions I attended were using Kotlin in their code examples. There were talks that were architecture focused, some doing deep dives into tools like ProGuard and Gradle, and others discussing newer technologies such as ARCore, TensorFlow, and coroutines.

In this article, I’ll give you highlights and summaries of the sessions I was able to attend. There were many other conflicting sessions and workshops I wanted to attend that aren’t included in this report, but luckily we should be able to check them all out online soon.

We’ll update this post with a link to the conference videos once they’re available.

![Conference Welcome Screen]()

Day 1

Community Addiction: The 2nd Edition

The conference was kicked off with an introduction by organizers Giorgio Natili, Software Development Manager at Amazon, Eliza Camberogiannis, Android Developer at Pixplicity, and Garima Jain, Senior Android Engineer at Fueled. They call themselves the EGG team, Eliza, Garima, Giorgio! :]

The introduction detailed the conference info: 2 tracks with 25 talks and 6 workshops, lightning talks at lunchtime, and a Facebook happy hour followed by the official party.

The organizers discussed the following principles of the conference: good people; diversity; cool stuff including Kotlin, Gradle, and ML; respect; trust; love; integrity; and, being together.

Giorgio ended the introduction with a quote:

Keep calm, learn all the things, and have fun at Droidcon Boston 2018.

Keynote: Tips for Library Development from a Startup Developer

Lisa Wray, Android Developer, GDE, Present

Lisa’s talk was all about the why, when, and how of writing libraries. It was her “Boston edition” and presented in the style of XKCD. :]

Lisa started off with a great question: why write open-source libraries for free on the Internet? She made the case that writing libraries not only benefits the community, but is really in your own self-interest, and proved the point with a cool self-interest Venn diagram. She explained that writing a library is like joining a startup, just without all the money, but that you can benefit in unexpected ways. You often get community input-other people writing code for you for free. Sometimes you write a library out of bare necessity, or sometimes just to learn something new in a sandbox.

The next question then becomes: so you want to write a library, how do you go about doing so? Lisa explained that you want to start small in accordance with Parkinsons Law of work expanding to fill all available time. Try to focus first on a single use case. Try not to use the word “and” when explaining what the library does. Using the library should be just a couple lines of code, just add a Gradle dependency and a dead simple example.

Lisa wrapped up her session with a great list of principles to follow as you develop and release the library. Be your own user and dogfood your library, because if it’s not good enough for you, it’s not good enough for others. Be honest about the library scope and size. Use semantic versioning, and use alpha and beta releases to get feedback. Don’t advertise your library all over the place, e.g. in Stack Overflow answers; let users find it on their own. Be sure to include sufficient testing and continuous integration, which will greatly help you know whether to accept pull requests when you receive them.

Lisa wrapped up her session with some things to watch out for. Determine ownership for the work you do building a library while employed at big, medium, and small companies. Be ready to get some competition. And also realize that you’re library may eventually get deprecated by the Android SDK taking over the features.

I really loved Lisa’s last few points. She pointed out that no library is too small. Writing them is a great way to practice your craft. Look inside your app for boilerplate code, utilities or extensions. The community will thank you for a well-focused library.

Pragmatic Gradle for Your Multi-Module Projects

Clive Lee, Android Developer, Ovia Health

Gradle is the powerful build tool we use to make Android apps, but not everyone has mastered all it’s intracacies, especially for multi-module products. Clive’s talked aimed at giving you pragmatic tips to help get you to Gradle mastery.

Clive started by defining some terminology, like the root project and it’s build.gradle file and dependency coordinates. He then explained that you need to keep Gradle code clean because, well, it’s code. He also discussed why you may want to use multiple modules, because you can then use the tools to enforce dependencies and clean architecture layers. You also end up with faster builds thanks to compilation avoidance and caching. And loading multi-module projects into Android Studio is getting faster.

Next, Clive walked through his set of tips. The first were about using parallel execution and caching, via adding org.grade.parallel=true and org.grade.caching=true to your gradle.properties file. He recommends the android-cache-fix-gradle-plugin tool. He pointed out that the project must be modularized for parallel Gradle execution to occur.

When using multiple modules, you don’t want dependency version numbers in multiple places. You need a better way. Clive discussed techniques progressing from putting the version numbers in the project-level build.gradle, to putting the entire dependency coordinate in the project-level build.gradle, to using a separate versions.gradle file, and finally, using a Java file to hold the coordinates, which gives you both code navigation and completion for the dependencies. Clive also mentioned using the Kotlin-Gradle DSL and that you can also store other constants like your minSdkVersion using these various techniques.

Clive then walked through how to get organized with your gradle files. Put build.gradle files in a directory. Use settings.gradle to add the name of the directory, and you can then access it easily in the Android Studio Project panel. Another great help is to rename each module’s build.gradle file to correspond to the module name, since it makes navigating and searching easier. You need to set buildFileName on the rootProject subProjects. It’s well worth 5 minutes of time. You can use a bash script for renaming, and be sure to do the renaming for any new modules you add to the project.

The end of the talk discussed pitfalls you may run into. Avoid using a “core” module for common dependencies, since it enlarges the surface between modules. The result is slower builds. Use a shared Gradle file instead. Also, proceed with caution when using nested modules, which, while they may make navigating easier, lead to a slower reload when switching branches. Also, they do not show up in the Project panel in Android Studio.

Lunch & a Lightning Talk: Background Processing

Karl Becker, Co-Founder / CTO, Third Iron

One really fun part of Droidcon Boston is hearing some talks during lunch. On the first day, Karl Becker gave some lessons learned adding notifications and background processing to an app.

Some key considerations are: platform differences, battery optimizations, notification specific issues, and targeting the latest APIs. You also need to avoid skewing your metrics, since running the app in the background may increase you analytics session count. Determine what your analytics library does specifically, and consider sending foreground and background to different buckets.

Once they rolled out the updated app with notifications, Karl and his team saw that Android notifications get tapped on 3 times as much as in their iOS app. He pointed out that even higher multiples are seen in articles online. Some possible reasons are: notifications are just a lot easier to get to on Android, and they go away quickly on iOS.

Key takeaways from Karl were: background processing is easy to implement, but hard to test. Schedule 2x time for testing. Support SDK 21 or newer if possible. Don’t expect background events to happen at specific times. And, notifications will be more impactful on Android than on iOS.

Practical Kotlin with a Disco Ball

Nicholas DiPatri, Principal Engineer, Comcast Corporation

In one of the first sessions after lunch on Day 1, Nicholas gave a great walkthrough of Kotlin from a Java developers perspective, and used a disco ball with some Bee Gees music to do so! He’s built an app named RoboButton in Kotlin, that uses bluetooth beacons to control nearby things with your phone, such as the aforementioned disco ball. :]

![Disco Ball]()

The first question answered was: what is Kotlin? It’s a new language from JetBrains that compiles to bytecode for the JVM, so it’s identical to Java at the bytecode level.

Next question: why use it? It’s way better than Java, fully supported by Google on Android, and Android leaders in the community use it. The only real risks with moving to Kotlin are the learning curve of a new language, and that the auto-converter in Android Studio from Java to Kotlin is imperfect.

Nicholas then gave a summary of syntactic Kotlin. You have mutable properties using var, which you can re-assign. The properties have synthesized accessors when being using from Java. You create a read-only property with val. It’s wrong to call a val immutable, it just can’t be re-assigned. Kotlin has type inference and compile-time null checking that help you avoid runtime null pointer exceptions. There are safety and “Elvis” operators and smart casts for use with nullable values. For object construction and initialization, you can combine the class declaration and constructor in one line. You can have named arguments, and an init block is used to contain initialization code. There is a subtle distinction between property and field in Kotlin: fields hold state, whereas properties expose state. Kotlin provides backing fields to properties.

Great places to begin learning Kotlin? The great documentation at kotlinlang.org, including a searchable language reference. The Kotlin style guide from Jake Wharton. And the online sandbox.

Nicholas pointed out that when moving to Kotlin, large legacy projects will be hybrid Java-Kotlin projects. Legacy Java can remain. New features can be written in Kotlin. Small projects can be completely converted, such as Nicholas converting the RoboButton project. You can use Code > Convert Java File to Kotlin File in Android Studio, but there is one bad thing: revision history is lost. Also, you may get some compile errors after the conversion, so you may need to sweep through and fix them.

Nicholas wrapped up his talk by discussing idiomatic Kotlin. When using Dagger for Dependency Injection, inject in the init block and fix any compile errors using lateinit. Perfom view injection using the Kotlin Android extensions. Use decompilation to get a better understanding of what Kotlin code is doing: create bytecode and then decompile to Java. Nicholas showed an example of discovering that Kotlin view extension uses a cached findViewById(). Use extension functions, which allow you to make local changes to a 3rd party API.

TensorFlow for Android Developers

Joe Birch, Senior Android Engineer, GDE, Buffer

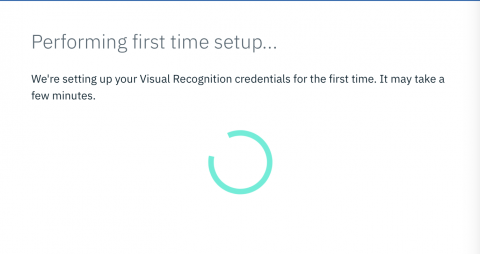

Like many developers, Joe Birch likes to explore new things that come out. Such was the case with Joe for TensorFlow, when a friend gave him a book on the topic. He was a little scared at first, but it turned out to be not so bad. His talk was not a deep dive into machine learning, but instead was showing how to use existing machine learning models in apps and how to re-train them. Joe used the TensorFlow library itself with Python on his dev machine for the retraining, and the TensorFlow Mobile library to use the re-trained model on a device.

Joe started with some machine learning 101: getting data, clean prep & manipulate, training a model based on patterns in the data, then improve. Joe discussed the differences between unsupervised learning and supervised learning, and then described the supervised learning topics of classification and regression. Some machine learning applications are photo search, smart replies in Gmail, Google Translate, and others like YouTube video recommendations.

Then Joe began a dive into TensorFlow. It’s open-source from Google, and they use it in their own applications. You use it to create a computational graph, a collection of nodes that all perform operations and compute values until the end predicted result. They’re also known as neural networks, a model that can learn but that needs to be taught first. The result of the training is a model that can be exported, then used and re-trained.

For the rest of talk, Joe detailed his re-training of an open source model that classifies images to specifically recognize images of fruit, and then the use of the re-trained model in a sample app that lets you add the recognized fruit to a shopping cart.

Joe walked through the use of the web-based TensorBoard suite of visualization tools to see training of your model in action. TensorBoard shows things like accuracy and loss rate. Joe listed all the steps you need to perform to retrain a model and examine the results of the re-training in TensorBoard. He started the re-training with a web site that gave him 500 images of each type of fruit at different angles. To test the re-trained model, you take images outside of the training set and run through the model. As an example, a banana was recognized at 94% probability in a few hundred milliseconds.

Before using the re-trained model, you want to optimize it using a Python script provided by TensorFlow, and also perform quantization via another Python script. Quantization normalizes values to improve compression so that the model file is smaller in your app. Joe achieved around 70% compression, from 50MB to 16MB. You want to check the model after optimization and quantization to make sure accuracy and performance were not impacted too much for your use case.

After re-training, you have a model for use in an app. Add a TensorFlow dependency in the app. You put model files into the app assets folder. Create a TensorFlow reference. Feed in data from a photo or the camera as bitmap pixels, and run inference. Handle confidence of the prediction by setting a threshold.

Workshop: Hands on Android Things

Kaan Mamikoglu, Mobile Software Engineer, MyDrive Solutions

James Coggan, Senior Mobile Engineer, MyDrive Solutions

Pascal How, Mobile Software Engineer, MyDrive Solutions

Of the 6 workshops at Droidcon Boston 2018, I was able to attend the one on Android Things, the IoT version of Android.

The workshop setup attendees with an NXP i.MX7D kit. We flashed Android Things onto the board. The board was attached to a breadboard with a temperature sensor and LEDs. The workshop walked us through creating an app for the device that communcates with Firebase and that, using DialogFlow and Google Assistant, allows you to ask “Hey Google, what’s the temperature at home?”

The workshop was hands-on, and consisted of opening the workshop apps in Android Studio and running through the following steps:

-

Connecting board to WiFi using an adb command.

-

Setup Firebase in Android Studio and your project, with Realtime Database support

-

Open the Firebase console and enable anonymous login

-

Setup Firebase on the common module

-

Create a HomeInformation data class with light, temperature and button

-

Observe changes to HomeInformation in the database using LiveData

-

Setup Firebase on the Things device

-

Setup Firebase on the mobile app

You can find more info on the workshop at James’s GitHub.

Day 2

Keynote: Design + Develop + Test

Kelly Shuster, Android Developer, GDE, Ibotta

The second day keynote by Kelly Shuster was about communication between designers, developers, and testers. She pointed out that we often get in weeds as software engineers, solving problems. We need to remember other things in order to build a successful product that people will actually use. It’s a responsibility to get good at effective cross-team communication. Kelly gave a great example of what happens when cross-team communication is missing from when she was a child actor in the Secret Garden and everyone forgot their lines in one scene. A painful experience but a lifelong memory.

Kelly explained the typical flow of app development: design mocks -> development begins -> development finalized. Often have another step with “developer questions”. A big problem is that you start to have a lot of these conversations, and much of the conversation is boilerplate. What if we could remove these boilerplate conversations? She came across a blog post about removing barriers from your life, and thought: what are the barriers to effective communication?

For barriers with designers, Kelly listed a number of tools that can help. Zeplin, which can give you details like sizes, fonts, and font sizes. A designer uploads a Sketch file to Zeplin, which parses out all the details. Another tool is a style guide with the design team, which serves as “boilerplate code completion” for the design world. A good design style guide has a color palette with common names, zero state and loading state designs, and button states. These things cover 80% of boilerplate design discussions right in the style guide.

Kelly then discussed how SVGs can help minimize back and forth with designers, and how a designer she worked with once offered to use Android Studio to learn how to work best with Vector Asset Studio. Conversely, as developers, Kelly pointed out that we often say a lot of negative things to designers. We should instead think about what can we do for them: be a relentless advocate for your designer’s vision (within reason).

Kelly discussed some surveys she’d done, one question being “if I could do one thing to make my product better it would be…” Most answers boiled down to “spend more time testing”. Even from designers and QA. Kelly talked about testing from two perspectives: pre-development and post-development. For pre-development, she suggested tools such as Usability Hub for user testing of designs, and Marvel, which takes pictures of paper designs and turns them into a clickable prototype.

“Post”-develoment testing is really post-design unit testing and integration testing. Kelly gave an anecdote about wanting to learn a song on piano for 5 years and not doing so until committing to practicing 5 minutes a day, and then compared it to how to get good at testing: write at least one test a week. You’ll be able to write tests fast within a few months.

Kelly finished up with results from another survey question: “the hardest part of working with developers is…” The consistent answer: their lack of smoke testing. Kelly gave great advice such as using “Day of the Week” emulators to test across API levels. She’s found many UI bugs by doing this.

fun() Talk

Nate Ebel, Android Developer, Udacity

Nate’s talk was all about exploring Kotlin functions. He pointed out that Kotlin loves functions. You can see it in docs and examples. The standard library is full of functions, including higher-order and extension functions. Functions in Kotlin are flexible and convenient. As Android developers, they give us the freedom to re-imagine how to build apps.

Nate dove into parameter and type freedom. Kotlin allows default parameter values, used when an argument is omitted. They give you overloads without the verbosity of writing them, and documents sensible defaults. For Java interop, you can use @JvmOverloads, which tells the compiler to generate the overloads for you.

You can also use named arguments in Kotlin. Without names, parameter meaning can be confusing without looking at source. Named arguments make it easer to understand. You can also switch the order when using named arguments. There are some limitations, for example, once named, all subsequent arguments must be named.

You can use variable number of arguments using vararg. They are treated as an Array of type T. You can use any number when calling, and they typically but not always will be the last parameter. They do not have to be last if using named arguments or if the last parameter is a function. You can use the spread operator * to pass existing arrays into vararg.

Nate described Kotlin return types. If there is no return value, the default is Unit. You append the return type to the function signature with a : if there is a non-Unit return value. Single-expression functions can infer return type.

Nate then discussed Generic functions, and how much of the standard library is built on generics. You add a generic type after the fun keyword.

Nate then explored how and when to create functions. He discussed top-level functions and how to rename files using @file:JvmName. He gave examples of member functions on a class or object, local functions which are functions inside functions, and companion object functions. He described function variations, such as infix functions, extension functions, and higher-order functions.

Nate finished up by re-imagining Android via the power of Kotlin functions. We can use fewer helper classes and less boilerplate. We can pass logic around for a try-catch block. We have libraries like Anko and Android KTX. We have DSLs like the Kotlin Gradle DSL.

Common Poor Coding Patterns and How to Avoid Them

Alice Yuan, Software Engineer, Pinterest

Alices’s talk focused on a number of problems her team has solved for the Pinterest app. She walked through each problem in detail, and then talked about how they found a solution.

Problem #1. Views with common components. They initially chose inheritance to combine the common components, but that led to many problems in the implementation. The solution: Be deliberate with inheritance and consider composition first.

Problem #2. So many bugs with the “follow” button in the app. They have many fragments, and were using an event bus library as a global event queue. It becomes confusing with more and more events and more fragments. The code looks simple, but it breaks due to different lifecycles and different conditions. Views require tight coupling, but an event bus is de-coupling. Event bus does not make sense for this scenario. Other use cases that are de-coupled make more sense. The solution was to use an Observer pattern. They reintroduced observers into code base. The key takeway was that event bus is often misued due to its simplicity. Only use it where it makes sense.

Problem #3. Do we need to send events to maintain data consistency? Why do we even need to send events? They were caching models on a per Fragment basis, which leads to data inconsistencies across the app. They had model dependent logic in the view layer. There are a lot of different ways to introduce data inconsistency: global static variables, utils class and singletons. The solution: have a central place to handle storing and retrieving of models using the Repository pattern. A repository checks memory and disk cache, and makes network calls if needed for the freshest model. You can also use the Repository pattern with RxJava, kind of like observables on steroids, more than just a simple listener pattern. The key takeway: build a central way to fetch and retrieve models.

Problem #4. Why is writing unit tests so difficult? Laziness. It’s a lot of work to write unit tests. Also, a typical fragment has too much logic, including business logic. You just want to unit test business logic. Things like mocking and Robolectric can be pains to use. The solution: separate concerns using a pattern like MVVM or MVP. Now you can communicate between classes without knowing internals. Kelly gave an example using MVP. Loose coupling is preferred here to make business logic more testable. It makes the code way cleaner and understandable and increases re-usability of the codebase. The key takeaway: unit testing is easier when you separate concerns. Consider MVP/MVVM/MVI. Use interfaces to abstract. You can then easily mock interfaces with Mockito.

The overall key takeway from Alice’s talk: have awareness to see if you’re making any of these mistakes.

Lunch & a Lightning Talk: Reactive Architecture

Dan Leonardis, Director of Engineering, Viacom & LEO LLC

The second day’s lunch talk was about Reactive Architecture. Dan chose to give all examples in Java to get maximum impact.

Dan explained that Reactive Architecture is simple but not easy. The goals are to make a system responsive (with background threads), resilient (with error scenarios built-in), elastic (easy to change), and message driven (with well-defined data types). It provides an asynchronous paradigm built on streams of information.

Dan walked though a history lesson on MVC, MVP, and MVVM. MVC was not meant to be a system architecture, but meant to just be for UI. MVP has kind of been killed off, with three nails: Android data binding, Rx, and ViewModel from Android Architecture Components which helps with lifecycle issues and is agnostic to Activity recreation on rotation.

Dan then emphasized how the whole point of reactive architecture is to funnel asynchronous events to update UI. Examples are button clicks, scrolls, and UI filters. Each are events. You flatmap them into specific event types that have data you need from the UI. Use merge to make them one stream. You use actions to translate events for testability using Transformers. See Dan Lew’s blog post.

The basic flow is a UI event -> transformer -> split stream into event types with publish operator in each stream, transform into action -> merge back to one stream -> end up with an observable stream of actions. What’s next is a Result, and you then use transformers to go back to the UI too.

Dan then gave a deep dive into a simple app and walked through code examples to see a Reactive Architecture in action.

ARCore for Android Developers

Kunal Khona, Android | AR Developer, Wayfair

Kunal started with a nice fun virtual version of himself that introduced himself on a projected device. His talk then introduced us to augmented reality, ARCore on Android, and showed some code examples written in C# with Unity.

Pokemon Go is the best example so far of AR affecting the real world. It’s an illusion to add annotations to the real physical world. It let’s you escape the boundaries of a 2D screen. But we’ve been wired for thousands of years to interact with the world in 3D.

Kunal then contrasted VR and AR. VR is virtual environment with virtual experience. AR is real environment with virtual experience. He said that in VR, the screen becomes your world, but in AR the world becomes your screen. AR uses your device as a window into the augmented world. Mobile phones are the most common device available, so you have to do AR on mobile if you want to hit scale.

Kunal gave some history of AR on Android. Tango used a wide-angle camera and a depth sensor. It could use motion tracking, area learning, and depth sensing to map indoor spaces with high accuracy. But it was only supported on a few phones, and Tango is dead as of March 2018.

ARCore replaces Tango. ARCore went to 1.0 stable in February 2018. It works without special hardware, with motion tracking technology using the phone camera to identify interesting points. It tracks the position of the device as it moves and builds an understanding of the real world. It’s scalable across the Android ecosystem, and currently works on a wide range of devices running Android 7.0 and above, about 100 million devices total. More phones will be supported over time. You can even run ARCore apps in the Android emulator in Android Studio 3.1 beta, with a simulated environment.

The fundamental concepts of ARCore are: motion tracking, environmental understanding, and light estimation. Motion tracking creates feature points and uses them to determine change in location. Environmental understanding begins with clusters of feature points that appear to live on common horizontal surfaces and are made available as planes. Light estimation in an environment gives an increased sense of realism by lighting a virtual object under the same conditions as the environment. Kunal showed a great example of a virutal lion getting scared when the lights turn off.

ARCore does have limitations. ARCore does not use a depth sensor. It has difficulty with flat surfaces without texture. And it cannot remember session information once a session is closed.

Kunal discussed using ARCore at Wayfair. How does a consumer know furniture will look good in a room? He showed an awesome demo of placing a couch in a room. A consumer can hit a cart button and purchase the couch. Kunal described many of the possible ARCore applications: shopping, games, education, entertainment, visualizers, and information/news.

The remainder of the talk was a code example using Unity to abstract away complex ARCore and OpenGL concepts. He showed motion tracking, using an ARCore.Session to track attachment points and plane detection, and placing an object with hit testing, transforming a 2D touch to a 3D position. He described using anchors to deal with the changing understanding of the environment even as the device moves around.

Why MVI? Model View Intent — The curious case of yet another pattern

Garima Jain, Senior Android Engineer, Fueled

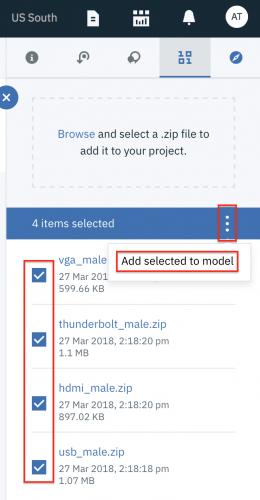

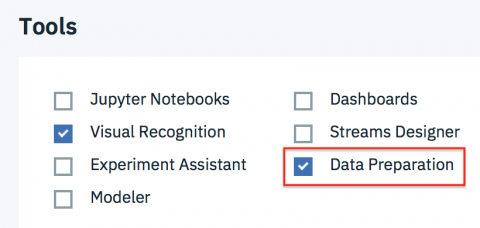

Garima gave a great talk on the Model-View-Intent (MVI) pattern. There were two parts to the talk: (1) Why MVI and (2) moving from MVP/MVVM to MVI.

First, why MVI? She defined MVI as a mapping from (Model, View, Intent) to (State, View, Intention). And add a user into the picture and MVI becomes different from other patterns. The user interacts with the View, the intent changes state, goes to the view, and is seen by user.

The key concept is State as a single source of truth. A common example of the State problem is: what is going on with state when doing a pull to refresh – the state of a list. The state problem is a main reason people are motivated to follow MVI. First there’s a loading state, then an empty state, then pull to refresh but with the empty state still showing. The View is not updating state correctly.

Garima used an MVP diagram to represent the problem. If you misuse MVP, some of the state is in the view and some is in the presenter. Multiple inputs and outputs cause the possibility of misuses. The MVI pattern helps here. MVI has single source of state truth. This is sometimes called the Hannes model, after Hannes Dorfmann.

Part 2 of the talk discussed moving from MVP/MVVM to MVI. How to move to MVI if you have other patterns already in place. You don’t have to go all the way to MVI right away. Call it “MVPI”. Build on top of MVP or MVVM.

Garima gave a great description of data flow in the MVI pattern. From a UI event in the View, the event gets transformed to an Action sent to the Presenter, then sent to a Reducer as a Result. The reducer creates new state which gets rendered to the View. She discussed applying the pattern to the loading problem. State is an immutable object. You create a new one to show in the view after a user interaction. Now there is only one state, one source of truth for the model. Changes only occur due to actions.

![MVI data flow]()

She gave some good rules of thumb. Action should be a plain object that has a type that is not undefined and conveys the intention of the user. State is an immutable list or object representing the state of the view. The Reducer is a function of the previous state and the result. It returns the current state for an unknown result. Use a Kotlin sealed class for the different kinds of results.

In summary, Garima mentioned that many consider that MVI is MVP/MVVM done right. It prevents us from misusing patterns like MVP and MVVM. She also gave some great refrerences:

![MVI References]()

Coroutines and RxJava: An asynchronicity comparison

Manuel Vicente Vivo, Principal Associate Software Engineer, Capital One

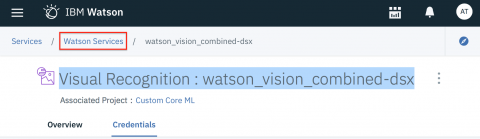

Manual is comparing coroutines and RxJava because they’re both trying to solve the same problem: asynchronous programming.

He started with a coroutines recap, compared the libraries, and demoed a Fibonacci calculator with fun facts from an API.

Coroutines simplify asynchronous programming. Computations can be suspended without blocking a thread. They’re conceptually similar to a thread. They start and finish and but are not bound to a particular thread.

The launch function is a coroutine builder. The first parameter is a coroutine context. It takes a suspending lambda as second parameter. For example, making a network request and updating a database with data. The user taps a button and starts a heavy computation. You suspend while waiting for a response from the network. You carry on with execution when the response comes back.

Manuel then gave a detailed coroutine and RxJava comparison.

He showed how to cancel execution in both. In RxJava, call disposable.dispose(). For coroutines, use the coroutine job which is returned from launch and call job.cancel(). You can concatenate coroutine contexts and can cancel them all at the same time. You can also use a parent named argument and cancel the parent.

Manuel defined Channels as the coroutine equivalent of an RxJava Observable and Reactive Streams Publisher. Channels can be shared between different coroutines, with a default capacity of 1. In RxJava, you get values when onNext is called. You use send and receive to send/receive elements through the channel, and they are suspending functions. You can close channels. And you can also offer elements. produce is another coroutine builder for a coroutine with a Channel built-in. It’s useful to create custom operators.

Channels have to deal with race conditions: which coroutine gets the values first? In RxJava, you use Subjects to handle this issue. BroadcastChannel can be used to send the same value to all coroutines. ConflatedBroadcastChannel behaves similar to an RxJava subject.

What about RxJava back-pressure? It’s handled by default in coroutines, since send and receive are suspending functions.

You can use publish for a “cold observable” behavior, but it’s in the interop library, a bridge between coroutines and RxJava. Another use case would be calling coroutines from Java.

An Actor is a coroutine with a channel built-in, but the opposite of produce. Think of it as a mailbox that will process and receive different events.

For the equivalent of RxJava operators, some are built-in to the language in Kotlin collections. Some others are easy to implement. And some are more work, such as zip.

For threading in RxJava, you use observeOn and subscribeOn. Threading in coroutines is done within the coroutine context. CommonPool, UI (Android), and Unconfined are examples of contexts. You can create your own contexts using ThreadPoolDispatcher.

Manuel finished up with his example application, a math app built with the MVI Architecture. He has three projects: one using coroutines, one using RxJava, and one with interop between the two.

![Coroutine architecture]()

The project uses the Android Architecture Component ViewModel to survive configuration changes, and can be found on GitHub.

Where to go from here?

You can find more info about the Droidcon series of conferences at the official site.

The full agenda for Droidcon Boston 2018, including sessions not covered in this report, can be found here.

The videos from the most recent Droidcon NYC can be found here.

The videos and slides from Droidcon Boston 2017 are here.

We’ll add a link to the videos from Droidcon Boston 2018 when they’re available.

Feel free to share your feedback, findings or ask any questions in the comments below or in the forums. I hope you enjoyed this summary of Droidcon Boston 2018! :]

The post Droidcon Boston 2018 Conference Report appeared first on Ray Wenderlich.