Let’s face it – we’ve all written code that just doesn’t work correctly, and debugging can be hard. It’s even more difficult when you’re talking to other systems over a network.

Fortunately, Charles Proxy can make network debugging much easier.

Charles Proxy sits between your app and the Internet. All networking requests and responses will be passed through Charles Proxy, so you’ll be able to inspect and even change data midstream to test how your app responds.

You’ll get hands-on experience with this (and more!) in this Charles Proxy tutorial.

Getting Started

You first need to download the latest version of Charles Proxy (v4.1.1 at the time of this writing). Double-click the dmg file and drag the Charles icon to your Applications folder to install it.

Charles Proxy isn’t free, but everyone is given a free 30-day trial. Charles will only run for 30 minutes in trial mode, so you may need to restart it throughout this Charles Proxy tutorial.

Note: Charles is a Java-based app and supports macOS, Windows and Linux. This Charles Proxy tutorial is for macOS specifically, and some things may be different on other platforms.

Launch Charles, and it will ask for permission to automatically configure your network settings.

Click Grant Privileges and enter your password if prompted. Charles starts recording network events as soon as it launches. You should already see events popping into the left pane.

Note: If you don’t see events, you may have not granted permissions or may have another proxy already setup. See Charles’ FAQ page for troubleshooting help.

The user interface is fairly simple to understand without too much experience. Many goodies are hidden behind buttons and menus, however, and the toolbar has a few items you should know about:

- The first “broom” button clears the current session of all recorded activity.

- The second “record/pause” button will be red when Charles is recording events, and gray when stopped.

- The middle buttons from the “turtle” to the “check mark” provide access to commonly-used actions, including throttling, breakpoints and request creation. Hover your mouse over each to see a short description.

- The last two buttons provide access to commonly-used tools and settings.

For now, stop recording by clicking the red record/pause button.

The left pane can be toggled between Structure and Sequence views. When Structure is selected, all activity is grouped by site address. You can see the individual requests by clicking the arrow next to a site.

Select Sequence to see all events in a continuous list sorted by time. You’ll likely spend most of your time in this screen when debugging your own apps.

Charles merges the request and response into a single screen by default. However, I recommend you split them into separate events to provide greater clarity.

Click Charles\Preferences and select the Viewers tab; uncheck Combine request and response; and press OK. You may need to restart Charles for the change to take effect.

Try poking around the user interface and looking at events. You’ll notice one peculiar thing: you can’t see most details for HTTPS events!

SSL/TLS encrypts sensitive request and response information. You might think this makes Charles pointless for all HTTPS events, right? Nope! Charles has a sneaky way of getting around encryption that you’ll learn soon.

More About Proxies

You may be wondering, “How does Charles do its magic?”

Charles is a proxy server, which means it sits between your app and computer’s network connections. When Charles automatically configured your network settings, it changed your network configuration to route all traffic through it. This allows Charles to inspect all network events to and from your computer.

Proxy servers are in a position of great power, but this also implies the potential for abuse. This is why SSL is so important: data encryption prevents proxy servers and other middleware from eavesdropping on sensitive information.

In our case, however, we want Charles to snoop on our SSL messages to let us debug them.

SSL/TLS encrypts messages using certificates generated by trusted third parties called “certificate issuers.”

Charles can also generate its own self-signed certificate, which you can install on your Mac and iOS devices for SSL/TLS encryption. Since this certificate isn’t issued by a trusted certificate issuer, you’ll need to tell your devices to explicitly trust it. Once installed and trusted, Charles will be able to decrypt SSL events!

When hackers use middleware to snoop on network communications, it’s called a “man-in-the-middle” attack. In general, you DON’T want to trust just any random certificate, or you can compromise your network security!

There are some cases where Charles’ sneaky man-in-the-middle strategy won’t work. For example, some apps use “SSL pinning” for extra security. SSL pinning means the app has a copy of the SSL certificate’s public key, and it uses this to verify network connections before communicating. Since Charles’ key wouldn’t match, the app would reject the communication.

In addition to logging events, you can also use Charles to modify data on the fly, record it for later review and even simulate bad network connections. Charles is really powerful!

Charles Proxy & Your iOS Device

It’s simple to set up Charles to proxy traffic from any computer or device on your network, including your iOS device.

First, turn off macOS proxying in Charles by clicking Proxy (drop-down menu)\macOS Proxy to uncheck it. This way, you’ll only see traffic from your iOS device.

Next, click Proxy\Proxy Settings, click the Proxies and make note of the port number, which by default should be 8888.

Then, click Help\Local IP Address and make note of your computer’s IP address.

Now grab your iOS device. Open Settings, tap on Wi-Fi and verify you’re connected to the same network as your computer. Then tap on the ⓘ button next to your WiFi network. Scroll down to the HTTP Proxy section and tap Manual.

Enter your Mac’s IP address for Server and the Charles HTTP Proxy port number for Port. Tap the back button, or press the Home button, and changes will be saved automatically.

If you previously stopped recording in Charles, tap the record/pause button button now to start recording again.

You should get a pop-up warning from Charles on your Mac asking to allow your iOS device to connect. Click Allow. If you don’t see this immediately, that’s okay. It may take a minute or two for it to show up.

You should now start to see activity from your device in Charles!

Next, still on your iOS device, open Safari and navigate to http://www.charlesproxy.com/getssl.

A window should pop up asking you to install a Profile/Certificate. You should see a self-signed Charles certificate in the details. Tap Install, then tap Install again after the warning appears, and then tap Install one more time. Finally, tap Done.

Apple really wants to make sure you want to install this! :] Again, don’t install just any random certificate or else you may comprise your network security! At the end of this Charles Proxy tutorial, you’ll also remove this certificate.

Snooping on Someone Else’s App

If you are like most developers, you’re curious about how things work. Charles enables this curiosity by giving you tools to inspect any app’s communication — even if it’s not your app.

Go to the App Store on your device and find and download Weather Underground. This free app is available in most countries. If it’s not available, or you want to try something else, feel free to use a different app.

You’ll notice a flurry of activity in Charles while you’re downloading Weather Underground. The App Store is pretty chatty!

Once the app is installed, launch the app and click the broom icon in Charles to clear recent activity.

Enter the zip code 90210 and select Beverley Hills as your location in the app. If you were to use your current location, the URL that the app fetches could change if your location changes which might make some later steps in this Charles Proxy tutorial harder to follow.

There are tons of sites listed in the Structure tab! This is a list of all activity from your iOS device, not just the Weather Underground app.

Switch to the Sequence tab and enter “wund” in the filter box to show only weather traffic.

You should now see just a few requests to api.wunderground.com. Click one of them.

The Overview section shows some request details but not much. Likewise, you won’t see many details in either the Request or Response yet either. The Overview gives the reason why: “SSL Proxying not enabled for this host: enable in Proxy Settings, SSL locations.” You need to enable this.

Click on Proxy\SSL Proxying Settings. Click Add; enter api.wunderground.com for the Host; leave the Port empty; and press OK to dismiss the window.

Back in the Wunderground app, pull down to refresh and refetch data. If the app doesn’t refresh, you might need to kill it from the multitasking view and try again.

Huzzah! Unencrypted requests! Look for one of the requests with a URL containing forecast10day. This contains the payload that’s used to populate the weather screen.

Let’s have some fun and change the data before the app gets it. Can you get the app to break or act funny?

Right-click the request within the Sequence list, and click the Breakpoints item in the pop-up list. Now each time a request is made with this URL, Charles will pause and let you edit both the request and response.

Again, pull down to refresh the app.

A new tab titled Breakpoints should pop up with the outgoing request. Simply click Execute without modifying anything. A moment later, the Breakpoints tab should again re-appear with the response.

Click on the Edit Response tab near the top. At the bottom, select JSON text. Scroll down and find temperature and change its value to something insane like 9800.

Note: If you take too long editing the request or response, the app may silently time out and never display anything. If the edited temperature doesn’t appear, try again a little quicker.

9800°F is crazy hot out! Seems like Wunderground can’t show temperatures over 1000°. I guess the app will never show forecasts for the surface of the sun. That’s a definite one-star rating. ;]

Delete the breakpoint you set by going to Proxy\Breakpoint Settings.

Uncheck the entry for api.wunderground.com to temporarily disable it, or highlight the row and click Remove to delete it. Pull down to refresh, and the temperature should return to normal.

Next, click the Turtle icon to start throttling and simulate a slow network. Click Proxy\Throttle Settings to see available options. The default is 56 kbps, which is pretty darn slow. You can also tweak settings here to simulate data loss, reliability issues and high latency.

Try refreshing the app, zooming the map and/or searching for another location. Painfully slow, right?

It’s a good idea to test your own app under poor network conditions. Imagine your users on a subway or entering an elevator. You don’t want your app to lose data, or worse, crash in these circumstances.

Apple’s Network Link Conditioner provides similar throttling capabilities, yet Charles allows for much finer control over network settings. For example, you can apply throttling to only specific URLs to simulate just your servers responding slowly instead of the entire connection.

Remember to turn off throttling when you’re done with it. There’s nothing worse than spending an hour debugging, only to find you never turned off throttling!

Troubleshooting Your Own Apps

Charles Proxy is especially great for debugging and testing your own apps. For example, you can check server responses to ensure you have JSON keys defined correctly and expected data types are returned for all fields. You can even use throttling to simulate poor networks and verify your app’s timeout and error-handling logic.

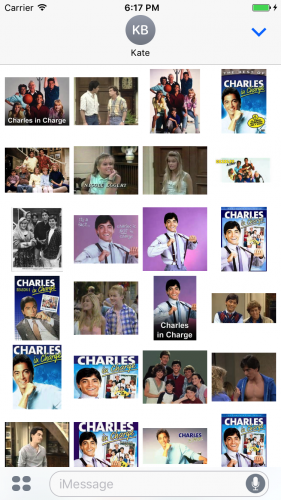

You’ll try this out using an iMessage app called “Charles in Charge” that I created for this tutorial.

If you’re a child of the ’80s, you may know the popular Charles in Charge comedy starring Scott Baio. The “Charles in Charge” iMessage app uses Microsoft Bing’s Image Search API to provide images of characters that you can send within your iMessages.

You’ll first need to get a free Bing Image Search API key to configure the demo app. Start by creating a Microsoft Cognitive Services account from here. Check your e-mail, and click the verification link to complete your account setup. This is required to generate API keys.

After signing up, click the link for either Get Started for Free or Subscribe to new free trials on your account page (either one may show depending on how you get to the page); select the checkbox for Bing Search – Free; click I agree next to the terms and conditions; and finally, click Subscribe.

After you complete the signup, you’ll get two access keys. Click copy next to either one to copy it to your keyboard. You’ll need this soon.

Next, download Charles in Charge from here and then open CharlesInCharge.xcodeproj in Xcode.

Open the MessagesExtension group, and you’ll see a few Swift files.

CharlesInChargeService manages the calls to Bing to search for images and then uses ImageDownloadService to download each image for use in the app.

In the findAndDownloadImages(completion:) method within the CharlesInChargeService class, you’ll see the subscriptionKey parameter for BingImageSearchService initializer is an empty string.

Paste your access key from the Microsoft Cognitive Services portal here.

Build and run the app within any iPhone Simulator and try it out.

Note: If it’s not already selected, choose the MessagesExtension scheme to build.

In Charles, click Proxy and select macOS Proxy to turn it back on (if it doesn’t show a checkmark already).

Then, click Proxy\SSL Proxying Settings and add api.cognitive.microsoft.com to the list.

You next need to install the Charles Proxy SSL certificate to allow proxying SSL requests in the Simulator. Before you do, quit the Simulator app. Then in Charles, click Help\SSL Proxying\Install Charles Root Certificate in iOS Simulators.

Back in Xcode, build and run the project in the Simulator. When iMessage launches, find Charles in Charge and select it. Then, wait for the images to load… but they never do! What’s going on here!?

In the console, you’ll see:

Bing search result count: 0

It appears the app isn’t getting search results, or there’s a problem mapping the data.

First verify whether or not you got data back from the API.

In Charles, enter “cognitive” in the filter box to make it easier to find the Bing Image Search request. Click on the request in the list, select the Response tab, and choose JSON text at the bottom. Look for the JSON entry value, and you’ll see there are indeed search results returned.

So, something strange must be happening within the BingImageSearchService.

Open this class in Xcode and look for where the search results are mapped from JSON to a SearchResult struct:

guard let title = hit["name"] as? String,

let fullSizeUrlString = hit["contenturl"] as? String,

let fullSizeUrl = URL(string: fullSizeUrlString),

let thumbnailUrlString = hit["thumbnailurl"] as? String,

let thumbnailUrl = URL(string: thumbnailUrlString),

let thumbnailSizes = hit["thumbnail"] as? [String: Any],

let thumbnailWidth = thumbnailSizes["width"] as? Float,

let thumbnailHeight = thumbnailSizes["height"] as? Float else {

return nil

}

Ah ha! The SearchResult will be nil if any of of the keys aren’t found. Compare each of these keys against the response data in Charles. Look closely: case matters.

If you have eagle eyes, you’ll see that both contentUrl and thumbnailUrl don’t have the capital U in the mapping code. Fix these keys and then build and run the app again.

Success! Charles is now in charge!

Remove Charles’ Certificate

In the past, Charles created a shared certificate across everyone’s devices that used it. Fortunately, Charles now creates unique certificates. This significantly reduces the chance of a man-in-the-middle attack based on this certificate, but it’s still technically possible. Therefore, you should always remember to remove the Charles’ certificates when you’re done with it.

First remove the certificate(s) from macOS. Open the Keychain Access application located in the folder Applications\Utilities. In the search box type in Charles Proxy and delete all the certificates that the search finds. There is most likely only one to delete. Close the application when you’re done.

Next remove the certificates from your iOS device. Open the Settings app and navigate to General\Profiles & Device Management. Under Configuration Profiles you should see one or more entries for Charles Proxy. Tap on one and then tap Delete Profile. Repeat this for each Charles Proxy certificate.

Profiles & Device Management isn’t available in the iOS Simulator. To remove the Charles Proxy certificates, reset the simulator by clicking the Simulator menu and then Reset Content and Settings.

Where to Go From Here?

We hope you enjoyed this Charles Proxy tutorial! Charles Proxy has a ton more features that aren’t covered in this tutorial, and there are many more details for those that we did cover. Check out Charles’ website for more documentation. The more you use Charles, the more features you’ll discover. You can download the final Charles in Charge app with the corrected JSON keys here.

You can also read more about SSL/TLS on Wikipedia at https://en.wikipedia.org/wiki/Transport_Layer_Security. Apple most likely will eventually require all apps to use secure network connections, so you should adopt this soon if you haven’t already.

Also, check out Paw for macOS. It’s a great companion to Charles for helping compose new API requests and for testing parameters.

Do you know of any other useful apps for debugging networking? Or do you have any debugging battle stories? Join the discussion below to share them!

The post Charles Proxy Tutorial for iOS appeared first on Ray Wenderlich.

Good news – we’ve been hard at work updating our popular book

Good news – we’ve been hard at work updating our popular book

For the past ten months, we’ve been working on a tremendously exciting new book:

For the past ten months, we’ve been working on a tremendously exciting new book: