![mapkit]()

Learn how to add an overlay views using MapKit!

Update note: This tutorial has been updated for Xcode 9, iOS 11 and Swift 4 by Owen Brown. The original tutorial was written by Ray Wenderlich.

Apple makes it very easy to add a map to your app using MapKit, but this alone isn’t very engaging. Fortunately, you can make maps much more appealing using custom overlay views.

In this MapKit tutorial, you’ll create an app to showcase Six Flags Magic Mountain. For you fast-ride thrill seekers out there, this app’s for you. ;]

By the time you’re done, you’ll have an interactive park map that shows attraction locations, ride routes and character locations.

Getting Started

Download the starter project here. This starter includes navigation, but it doesn’t have any maps yet.

Open the starter project in Xcode; build and run; and you’ll see a just blank view. You’ll soon add a map and selectable overlay types here.

![mapkit]()

Adding a MapView with MapKit

Open Main.storyboard and select the Park Map View Controller scene. Search for map in the Object Library and then drag and drop a Map View onto this scene. Position it below the navigation bar and make it fill the rest of the view.

![mapkit]()

Next, select the Add New Constraints button, add four constraints with constant 0 and click Add 4 Constraints.

![mapkit]()

Wiring Up the MapView

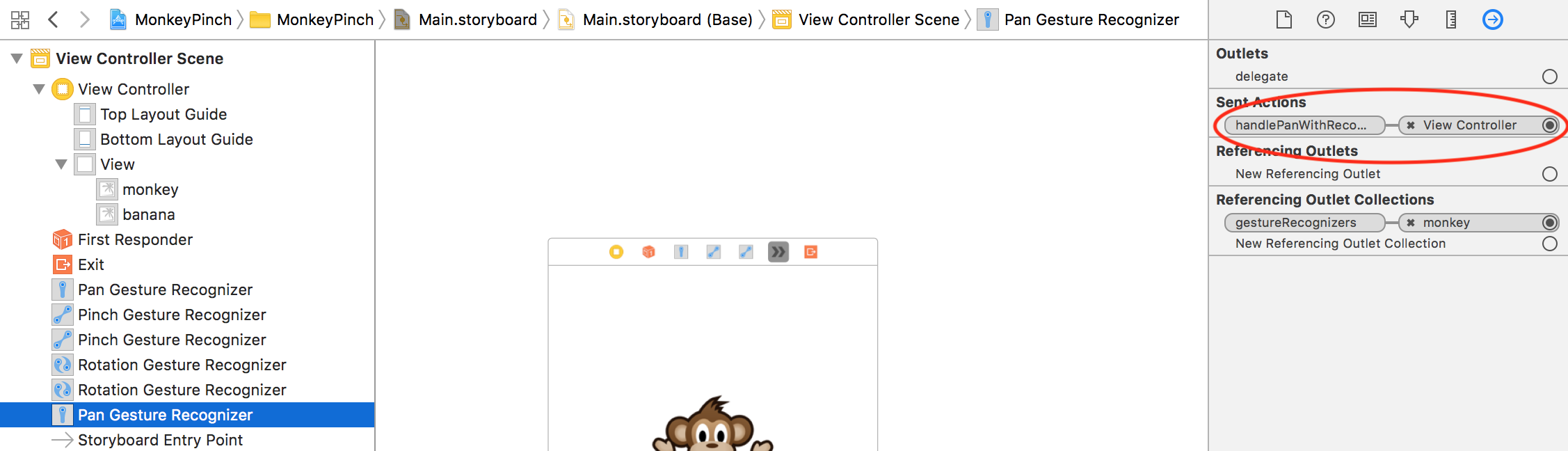

To do anything useful with a MapView, you need to do two things: (1) set an outlet to it, and (2) set its delegate.

Open ParkMapViewController in the Assistant Editor by holding down the Option key and left-clicking on ParkMapViewController.swift in the file hierarchy.

Then, control-drag from the map view to right above the first method like this:

![mapkit]()

In the popup that appears, name the outlet mapView, and click Connect.

To set the map view’s delegate, right-click on the map view object to open its context menu and then drag from the delegate outlet to Park Map View Controller like this:

![mapkit]()

You also need to make ParkMapViewController conform to MKMapViewDelegate.

First, add this import to the top of ParkMapViewController.swift:

import MapKit

Then, add this extension after the closing class curly brace:

extension ParkMapViewController: MKMapViewDelegate {

}

Build and run to check out your snazzy new map!

![mapkit]()

Wouldn’t it be cool if you could actually do something with the map? It’s time to add map interactions! :]

Interacting with the MapView

You’ll start by centering the map on the park. Inside the app’s Park Information folder, you’ll find a file named MagicMountain.plist. Open this file, and you’ll see it contains a coordinate for the park midpoint and boundary information.

You’ll now create a model for this plist to make it easy to use in the app.

Right-click on the Models group in the file navigation, and choose New File… Select the iOS\Source\Swift File template and name it Park.swift. Replace its contents with this:

import UIKit

import MapKit

class Park {

var name: String?

var boundary: [CLLocationCoordinate2D] = []

var midCoordinate = CLLocationCoordinate2D()

var overlayTopLeftCoordinate = CLLocationCoordinate2D()

var overlayTopRightCoordinate = CLLocationCoordinate2D()

var overlayBottomLeftCoordinate = CLLocationCoordinate2D()

var overlayBottomRightCoordinate = CLLocationCoordinate2D()

var overlayBoundingMapRect: MKMapRect?

}

You also need to be able to set the Park’s values to what’s defined in the plist.

First, add this convenience method to deserialize the property list:

class func plist(_ plist: String) -> Any? {

let filePath = Bundle.main.path(forResource: plist, ofType: "plist")!

let data = FileManager.default.contents(atPath: filePath)!

return try! PropertyListSerialization.propertyList(from: data, options: [], format: nil)

}

Next, add this next method to parse a CLLocationCoordinate2D given a fieldName and dictionary:

static func parseCoord(dict: [String: Any], fieldName: String) -> CLLocationCoordinate2D {

guard let coord = dict[fieldName] as? String else {

return CLLocationCoordinate2D()

}

let point = CGPointFromString(coord)

return CLLocationCoordinate2DMake(CLLocationDegrees(point.x), CLLocationDegrees(point.y))

}

MapKit’s APIs use CLLocationCoordinate2D to represent geographic locations.

You’re now finally ready to create an initializer for this class:

init(filename: String) {

guard let properties = Park.plist(filename) as? [String : Any],

let boundaryPoints = properties["boundary"] as? [String] else { return }

midCoordinate = Park.parseCoord(dict: properties, fieldName: "midCoord")

overlayTopLeftCoordinate = Park.parseCoord(dict: properties, fieldName: "overlayTopLeftCoord")

overlayTopRightCoordinate = Park.parseCoord(dict: properties, fieldName: "overlayTopRightCoord")

overlayBottomLeftCoordinate = Park.parseCoord(dict: properties, fieldName: "overlayBottomLeftCoord")

let cgPoints = boundaryPoints.map { CGPointFromString($0) }

boundary = cgPoints.map { CLLocationCoordinate2DMake(CLLocationDegrees($0.x), CLLocationDegrees($0.y)) }

}

First, the park’s coordinates are extracted from the plist file and assigned to properties. Then the boundary array is set, which you’ll use later to display the park outline.

You may be wondering, “Why wasn’t overlayBottomRightCoordinate set from the plist?” This isn’t provided in the plist because you can easily calculate it from the other three points.

Replace the current overlayBottomRightCoordinate with this computed property:

var overlayBottomRightCoordinate: CLLocationCoordinate2D {

get {

return CLLocationCoordinate2DMake(overlayBottomLeftCoordinate.latitude,

overlayTopRightCoordinate.longitude)

}

}

Finally, you need a method to create a bounding box based on the overlay coordinates.

Replace the definition of overlayBoundingMapRect with this:

var overlayBoundingMapRect: MKMapRect {

get {

let topLeft = MKMapPointForCoordinate(overlayTopLeftCoordinate)

let topRight = MKMapPointForCoordinate(overlayTopRightCoordinate)

let bottomLeft = MKMapPointForCoordinate(overlayBottomLeftCoordinate)

return MKMapRectMake(

topLeft.x,

topLeft.y,

fabs(topLeft.x - topRight.x),

fabs(topLeft.y - bottomLeft.y))

}

}

This getter generates an MKMapRect object for the park’s boundary. This is simply a rectangle that defines how big the park is, centered on the park’s midpoint.

Now it’s time to put this class to use. Open ParkMapViewController.swift and add the following property to it:

var park = Park(filename: "MagicMountain")

Then, replace viewDidLoad() with this:

override func viewDidLoad() {

super.viewDidLoad()

let latDelta = park.overlayTopLeftCoordinate.latitude -

park.overlayBottomRightCoordinate.latitude

// Think of a span as a tv size, measure from one corner to another

let span = MKCoordinateSpanMake(fabs(latDelta), 0.0)

let region = MKCoordinateRegionMake(park.midCoordinate, span)

mapView.region = region

}

This creates a latitude delta, which is the distance from the park’s top left coordinate to the park’s bottom right coordinate. You use it to generate an MKCoordinateSpan, which defines the area spanned by a map region. You then use MKCoordinateSpan along with the park’s midCoordinate to create an MKCoordinateRegion, which positions the park on the map view.

Build and run your app, and you’ll see the map is now centered on Six Flags Magic Mountain! :]

![mapkit]()

Okay! You’ve centered the map on the park, which is nice, but it’s not terribly exciting. Let’s spice things up by switching the map type to satellite!

Switching The Map Type

In ParkMapViewController.swift, you’ll notice this method:

@IBAction func mapTypeChanged(_ sender: UISegmentedControl) {

// TODO

}

Hmm, that’s a pretty ominous-sounding comment in there! :]

Fortunately, the starter project has much of what you’ll need to flesh out this method. Did you note the segmented control sitting above the map view that seems to be doing a whole lot of nothing?

That segmented control is actually calling mapTypeChanged(_:), but as you can see above, this method does nothing — yet!

Add the following implementation to mapTypeChanged():

mapView.mapType = MKMapType.init(rawValue: UInt(sender.selectedSegmentIndex)) ?? .standard

Believe it or not, adding standard, satellite, and hybrid map types to your app is as simple as the code above! Wasn’t that easy?

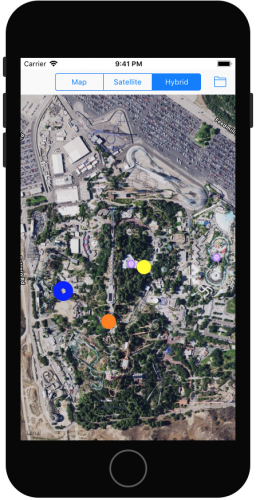

Build and run, and try out the segmented control to change the map type!

![mapkit]()

Even though the satellite view still is much better than the standard map view, it’s still not very useful to your park visitors. There’s nothing labeled — how will your users find anything in the park?

One obvious way is to drop a UIView on top of the map view, but you can take it a step further and instead leverage the magic of MKOverlayRenderer to do a lot of the work for you!

All About Overlay Views

Before you start creating your own overlay views, you need to understand two key classes: MKOverlay and MKOverlayRenderer.

MKOverlay tells MapKit where you want the overlays drawn. There are three steps to using the class:

- Create your own custom class that implements the MKOverlay protocol, which has two required properties:

coordinate and boundingMapRect. These properties define where the overlay resides on the map and the overlay’s size.

- Create an instance of your class for each area that you want to display an overlay. In this app, for example, you might create an instance for a rollercoaster overlay and another for a restaurant overlay.

- Finally, add the overlays to your Map View.

Now the Map View knows where it’s supposed to display overlays, but how does it know what to display in each region?

Enter MKOverlayRenderer. You subclass this to set up what you want to display in each spot. In this app, for example, you’ll draw an image of the rollercoaster or restaurant.

A MKOverlayRenderer is really just a special kind of UIView, as it inherits from UIView. However, you shouldn’t add an MKOverlayRenderer directly to a MKMapView. Instead, MapKit expects this to be an MKMapView.

Remember the map view delegate you set earlier? There’s a delegate method that allows you to return an overlay view:

func mapView(_ mapView: MKMapView, rendererFor overlay: MKOverlay) -> MKOverlayRenderer

MapKit will call this method when it realizes there is an MKOverlay object in the region that the map view is displaying.

To sum everything up, you don’t add MKOverlayRenderer objects directly to the map view; rather, you tell the map about MKOverlay objects to display and return them when the delegate method requests them.

Now that you’ve covered the theory, it’s time to put these concepts to use!

Adding Your Own Information

As you saw earlier, the satellite view still doesn’t provide enough information about the park. Your task is to create an object that represents an overlay for the entire park.

Select the Overlays group and create a new Swift file named ParkMapOverlay.swift. Replace its contents with this:

import UIKit

import MapKit

class ParkMapOverlay: NSObject, MKOverlay {

var coordinate: CLLocationCoordinate2D

var boundingMapRect: MKMapRect

init(park: Park) {

boundingMapRect = park.overlayBoundingMapRect

coordinate = park.midCoordinate

}

}

Conforming to the MKOverlay means you also have to inherit from NSObject. Finally, the initializer simply takes the properties from the passed Park object, and sets them to the corresponding MKOverlay properties.

Now you need to create a view class derived from the MKOverlayRenderer class.

Create a new Swift file in the Overlays group called ParkMapOverlayView.swift. Replace its contents with this:

import UIKit

import MapKit

class ParkMapOverlayView: MKOverlayRenderer {

var overlayImage: UIImage

init(overlay:MKOverlay, overlayImage:UIImage) {

self.overlayImage = overlayImage

super.init(overlay: overlay)

}

override func draw(_ mapRect: MKMapRect, zoomScale: MKZoomScale, in context: CGContext) {

guard let imageReference = overlayImage.cgImage else { return }

let rect = self.rect(for: overlay.boundingMapRect)

context.scaleBy(x: 1.0, y: -1.0)

context.translateBy(x: 0.0, y: -rect.size.height)

context.draw(imageReference, in: rect)

}

}

init(overlay:overlayImage:) effectively overrides the base method init(overlay:) by providing a second argument.

draw is the real meat of this class. It defines how MapKit should render this view when given a specific MKMapRect, MKZoomScale, and the CGContext of the graphic context, with the intent to draw the overlay image onto the context at the appropriate scale.

Details on Core Graphics drawing is quite far out of scope for this tutorial. However, you can see that the code above uses the passed MKMapRect to get a CGRect, in order to determine the location to draw the CGImage of the UIImage on the provided context. If you want to learn more about Core Graphics, check out our Core Graphics tutorial series.

Great! Now that you have both an MKOverlay and MKOverlayRenderer, you can add them to your map view.

In ParkMapViewController.swift, add the following method to the class:

func addOverlay() {

let overlay = ParkMapOverlay(park: park)

mapView.add(overlay)

}

This method will add an MKOverlay to the map view.

If the user should choose to show the map overlay, then loadSelectedOptions() should call addOverlay(). Replace loadSelectedOptions() with the following code:

func loadSelectedOptions() {

mapView.removeAnnotations(mapView.annotations)

mapView.removeOverlays(mapView.overlays)

for option in selectedOptions {

switch (option) {

case .mapOverlay:

addOverlay()

default:

break;

}

}

}

Whenever the user dismisses the options selection view, the app calls loadSelectedOptions(), which then determines the selected options, and calls the appropriate methods to render those selections on the map view.

loadSelectedOptions() also removes any annotations and overlays that may be present so that you don’t end up with duplicate renderings. This is not necessarily efficient, but it is a simple approach to clear previous items from the map.

To implement the delegate method, add the following method to the MKMapViewDelegate extension at the bottom of the file:

func mapView(_ mapView: MKMapView, rendererFor overlay: MKOverlay) -> MKOverlayRenderer {

if overlay is ParkMapOverlay {

return ParkMapOverlayView(overlay: overlay, overlayImage: #imageLiteral(resourceName: "overlay_park"))

}

return MKOverlayRenderer()

}

When the app determines that an MKOverlay is in view, the map view calls the above method as the delegate.

Here, you check to see if the overlay is of the class type ParkMapOverlay. If so, you load the overlay image, create a ParkMapOverlayView instance with the overlay image, and return this instance to the caller.

There’s one little piece missing, though – where does that suspicious little overlay_park image come from?

That’s a PNG file whose purpose is to overlay the map view for the defined boundary of the park. The overlay_park image (found in the image assets) looks like this:

![overlay_park mapkit]()

Build and run, choose the Map Overlay option, and voila! There’s the park overlay drawn on top of your map:

![mapkit]()

Zoom in, zoom out, and move around as much as you want — the overlay scales and moves as you would expect. Cool!

Annotations

If you’ve ever searched for a location in the Maps app, then you’ve seen those colored pins that appear on the map. These are known as annotations, which are created with MKAnnotationView. You can use annotations in your own app — and you can use any image you want, not just pins!

Annotations will be useful in your app to help point out specific attractions to the park visitors. Annotation objects work similarly to MKOverlay and MKOverlayRenderer, but instead you will be working with MKAnnotation and MKAnnotationView.

Create a new Swift file in the Annotations group called AttractionAnnotation.swift. Replace its contents with this:

import UIKit

import MapKit

enum AttractionType: Int {

case misc = 0

case ride

case food

case firstAid

func image() -> UIImage {

switch self {

case .misc:

return #imageLiteral(resourceName: "star")

case .ride:

return #imageLiteral(resourceName: "ride")

case .food:

return #imageLiteral(resourceName: "food")

case .firstAid:

return #imageLiteral(resourceName: "firstaid")

}

}

}

class AttractionAnnotation: NSObject, MKAnnotation {

var coordinate: CLLocationCoordinate2D

var title: String?

var subtitle: String?

var type: AttractionType

init(coordinate: CLLocationCoordinate2D, title: String, subtitle: String, type: AttractionType) {

self.coordinate = coordinate

self.title = title

self.subtitle = subtitle

self.type = type

}

}

Here you first define an enum for AttractionType to help you categorize each attraction into a type. This enum lists four types of annotations: misc, rides, foods and first aid. Plus a handy function to grab the correct annotation image.

Next you declare that this class conforms to the MKAnnotation Protocol. Much like MKOverlay, MKAnnotation has a required coordinate property. You define a handful of properties specific to this implementation. Lastly, you define an initializer that allows you to assign values to each of the properties.

Now you need to create a specific instance of MKAnnotation to use for your annotations.

Create another Swift file called AttractionAnnotationView.swift under the Annotations group. Replace its contents with the following:

import UIKit

import MapKit

class AttractionAnnotationView: MKAnnotationView {

// Required for MKAnnotationView

required init?(coder aDecoder: NSCoder) {

super.init(coder: aDecoder)

}

override init(annotation: MKAnnotation?, reuseIdentifier: String?) {

super.init(annotation: annotation, reuseIdentifier: reuseIdentifier)

guard let attractionAnnotation = self.annotation as? AttractionAnnotation else { return }

image = attractionAnnotation.type.image()

}

}

MKAnnotationView requires the init(coder:) initializer. Without its definition, an error will prevent you from building and running the app. To prevent this, simply define it and call its superclass initializer. Here, you also override init(annotation:reuseIdentifier:) based on the annotation’s type property, you set a different image on the image property of the annotation.

Now having created the annotation and its associated view, you can start adding them to your map view!

To determine the location of each annotation, you’ll use the info in the MagicMountainAttractions.plist file, which you can find under the Park Information group. The plist file contains coordinate information and other details about the attractions at the park.

Go back to ParkMapViewController.swift and insert the following method:

func addAttractionPins() {

guard let attractions = Park.plist("MagicMountainAttractions") as? [[String : String]] else { return }

for attraction in attractions {

let coordinate = Park.parseCoord(dict: attraction, fieldName: "location")

let title = attraction["name"] ?? ""

let typeRawValue = Int(attraction["type"] ?? "0") ?? 0

let type = AttractionType(rawValue: typeRawValue) ?? .misc

let subtitle = attraction["subtitle"] ?? ""

let annotation = AttractionAnnotation(coordinate: coordinate, title: title, subtitle: subtitle, type: type)

mapView.addAnnotation(annotation)

}

}

This method reads MagicMountainAttractions.plist and enumerates over the array of dictionaries. For each entry, it creates an instance of AttractionAnnotation with the attraction’s information, and then adds each annotation to the map view.

Now you need to update loadSelectedOptions() to accommodate this new option and execute your new method when the user selects it.

Update the switch statement in loadSelectedOptions() to include the following:

case .mapPins:

addAttractionPins()

This calls your new addAttractionPins() method when required. Notes that the call to removeOverlays also hides the pins overlay.

You’re almost there! Last but not least, you need to implement another delegate method that provides the MKAnnotationView instances to the map view so that it can render them on itself.

Add the following method to the MKMapViewDelegate class extension at the bottom of the file:

func mapView(_ mapView: MKMapView, viewFor annotation: MKAnnotation) -> MKAnnotationView? {

let annotationView = AttractionAnnotationView(annotation: annotation, reuseIdentifier: "Attraction")

annotationView.canShowCallout = true

return annotationView

}

This method receives the selected MKAnnotation and uses it to create the AttractionAnnotationView. Since the property canShowCallout is set to true, a call-out will appear when the user touches the annotation. Finally, the method returns the annotation view.

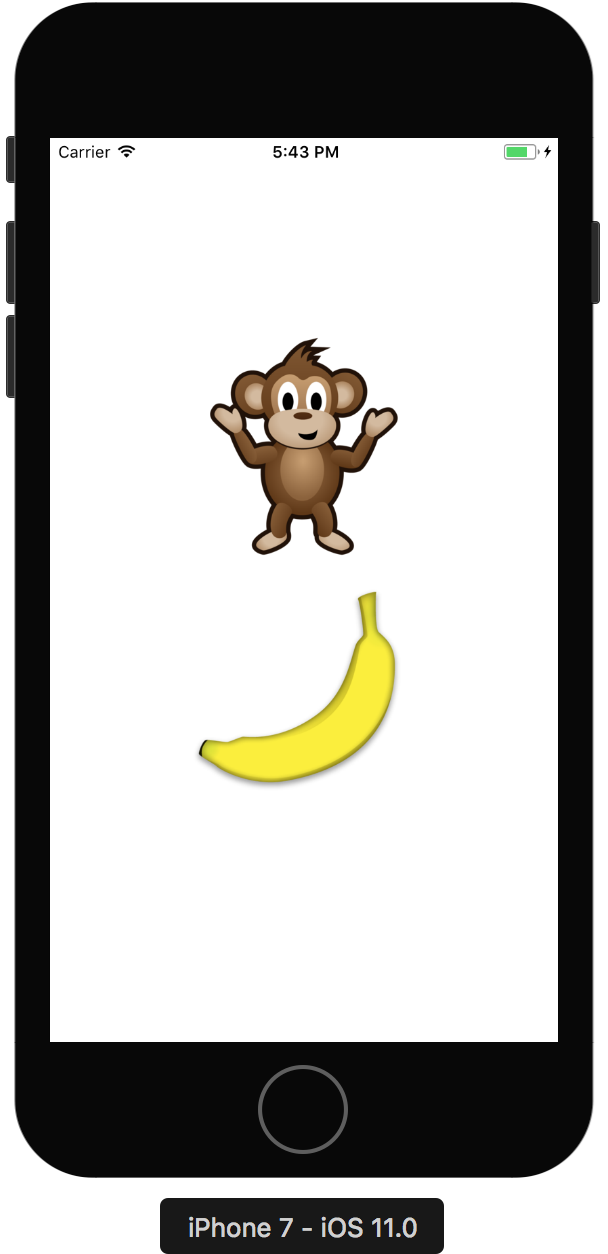

Build and run to see your annotations in action!

Turn on the Attraction Pins to see the result as in the screenshot below:

![mapkit]()

The Attraction pins are looking rather “sharp” at this point! :]

So far you’ve covered a lot of complicated bits of MapKit, including overlays and annotations. But what if you need to use some drawing primitives, like lines, shapes, and circles?

The MapKit framework also gives you the ability to draw directly on a map view. MapKit provides MKPolyline, MKPolygon, and MKCircle for just this purpose.

I Walk The Line – MKPolyline

If you’ve ever been to Magic Mountain, you know that the Goliath hypercoaster is an incredible ride, and some riders like to make a beeline for it once they walk in the gate! :]

To help out these riders, you’ll plot a path from the entrance of the park to the Goliath.

MKPolyline is a great solution for drawing a path that connects multiple points, such as plotting a non-linear route from point A to point B.

To draw a polyline, you need a series of longitude and latitude coordinates in the order that the code should plot them.

The EntranceToGoliathRoute.plist (again found in the Park Information folder) contains the path information.

You need a way to read in that plist file and create the route for the riders to follow.

Open ParkMapViewController.swift and add the following method to the class:

func addRoute() {

guard let points = Park.plist("EntranceToGoliathRoute") as? [String] else { return }

let cgPoints = points.map { CGPointFromString($0) }

let coords = cgPoints.map { CLLocationCoordinate2DMake(CLLocationDegrees($0.x), CLLocationDegrees($0.y)) }

let myPolyline = MKPolyline(coordinates: coords, count: coords.count)

mapView.add(myPolyline)

}

This method reads EntranceToGoliathRoute.plist, and converts the individual coordinate strings to CLLocationCoordinate2D structures.

It’s remarkable how simple it is to implement your polyline in your app; you simply create an array containing all of the points, and pass it to MKPolyline! It doesn’t get much easier than that.

Now you need to add an option to allow the user to turn the polyline path on or off.

Update loadSelectedOptions() to to include another case statement:

case .mapRoute:

addRoute()

This calls the addRoute() method when required.

Finally, to tie it all together, you need to update the delegate method so that it returns the actual view you want to render on the map view.

Replace mapView(_:rendererForOverlay) with this:

func mapView(_ mapView: MKMapView, rendererFor overlay: MKOverlay) -> MKOverlayRenderer {

if overlay is ParkMapOverlay {

return ParkMapOverlayView(overlay: overlay, overlayImage: #imageLiteral(resourceName: "overlay_park"))

} else if overlay is MKPolyline {

let lineView = MKPolylineRenderer(overlay: overlay)

lineView.strokeColor = UIColor.green

return lineView

}

return MKOverlayRenderer()

}

The change here is the additional else if branch to look for MKPolyline objects. The process of displaying the polyline view is very similar to previous overlay views. However, in this case, you do not need to create any custom view objects. You simply use the MKPolyLineRenderer framework provided, and initialize a new instance with the overlay.

MKPolyLineRenderer also provides you with the ability to change certain attributes of the polyline. In this case, you’ve modified the stroke color to show as green.

Build and run your app, enable the Route option, and it’ll appear on the screen:

![mapkit]()

Goliath fanatics will now be able to make it to the coaster in record time! :]

It would be nice to show the park patrons where the actual park boundaries are, as the park doesn’t actually occupy the entire space shown on the screen.

Although you could use MKPolyline to draw a shape around the park boundaries, MapKit provides another class that is specifically designed to draw closed polygons: MKPolygon.

Don’t Fence Me In – MKPolygon

MKPolygon is remarkably similar to MKPolyline, except that the first and last points in the set of coordinates are connected to each other to create a closed shape.

You’ll create an MKPolygon as an overlay that will show the park boundaries. The park boundary coordinates are already defined in MagicMountain.plist; go back and look at init(filename:) to see where the boundary points are read in from the plist file.

Add the following method to ParkMapViewController.swift:

func addBoundary() {

mapView.add(MKPolygon(coordinates: park.boundary, count: park.boundary.count))

}

The implementation of addBoundary() above is pretty straightforward. Given the boundary array and point count from the park instance, you can quickly and easily create a new MKPolygon instance!

Can you guess the next step here? It’s very similar to what you did for MKPolyline above.

Yep, that’s right — insert another case in the switch in loadSelectedOptions to handle the new option of showing or hiding the park boundary:

case .mapBoundary:

addBoundary()

MKPolygon conforms to MKOverlay just as MKPolyline does, so you need to update the delegate method again.

Update the delegate method in ParkMapViewController.swift as follows:

func mapView(_ mapView: MKMapView, rendererFor overlay: MKOverlay) -> MKOverlayRenderer {

if overlay is ParkMapOverlay {

return ParkMapOverlayView(overlay: overlay, overlayImage: #imageLiteral(resourceName: "overlay_park"))

} else if overlay is MKPolyline {

let lineView = MKPolylineRenderer(overlay: overlay)

lineView.strokeColor = UIColor.green

return lineView

} else if overlay is MKPolygon {

let polygonView = MKPolygonRenderer(overlay: overlay)

polygonView.strokeColor = UIColor.magenta

return polygonView

}

return MKOverlayRenderer()

}

The update to the delegate method is as straightforward as before. You create an MKOverlayView as an instance of MKPolygonRenderer, and set the stroke color to magenta.

Run the app to see your new boundary in action:

![mapkit]()

That takes care of polylines and polygons. The last drawing method to cover is drawing circles as an overlay, which is neatly handled by MKCircle.

Circle In The Sand – MKCircle

MKCircle is again very similar to MKPolyline and MKPolygon, except that it draws a circle, given a coordinate point as the center of the circle, and a radius that determines the size of the circle.

It would be great to mark general locations where park characters are spotted. Draw some circles on the map to simulate the location of those characters!

The MKCircle overlay is a very easy way to implement this functionality.

The Park Information folder also contains the character location files. Each file is an array of a few coordinates where the user spotted characters.

Create a new Swift file under the Models group called Character.swift. Replace its contents with the following code:

import UIKit

import MapKit

class Character: MKCircle {

var name: String?

var color: UIColor?

convenience init(filename: String, color: UIColor) {

guard let points = Park.plist(filename) as? [String] else { self.init(); return }

let cgPoints = points.map { CGPointFromString($0) }

let coords = cgPoints.map { CLLocationCoordinate2DMake(CLLocationDegrees($0.x), CLLocationDegrees($0.y)) }

let randomCenter = coords[Int(arc4random()%4)]

let randomRadius = CLLocationDistance(max(5, Int(arc4random()%40)))

self.init(center: randomCenter, radius: randomRadius)

self.name = filename

self.color = color

}

}

The new class that you just added conforms to the MKCircle protocol, and defines two optional properties: name and color. The convenience initializer accepts a plist filename and color to draw the circle. Then it reads in the data from the plist file and selects a random location from the four locations in the file. Next, it choses a random radius to simulate the time variance. The MKCircle returned is set and ready to be put on the map!

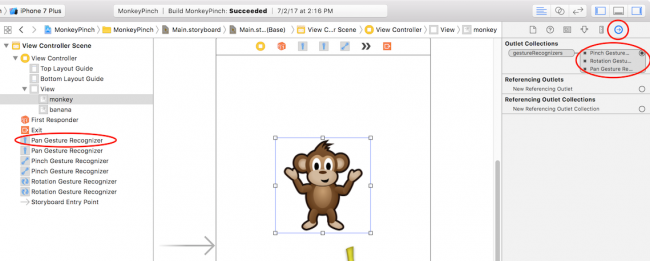

Now you need a method to add each character. Open ParkMapViewController.swift and add the following method to the class:

func addCharacterLocation() {

mapView.add(Character(filename: "BatmanLocations", color: .blue))

mapView.add(Character(filename: "TazLocations", color: .orange))

mapView.add(Character(filename: "TweetyBirdLocations", color: .yellow))

}

The method above performs pretty much performs the same operations for each character. It passes the plist filename for each one, decides on a color and adds it to the map as an overlay.

You’re almost done! Can you recall what the last few steps should be?

Right, you still need to provide the map view with an MKOverlayView, which is done through the delegate method.

Update the delegate method in ParkMapViewController.swift with this::

func mapView(_ mapView: MKMapView, rendererFor overlay: MKOverlay) -> MKOverlayRenderer {

if overlay is ParkMapOverlay {

return ParkMapOverlayView(overlay: overlay, overlayImage: #imageLiteral(resourceName: "overlay_park"))

} else if overlay is MKPolyline {

let lineView = MKPolylineRenderer(overlay: overlay)

lineView.strokeColor = UIColor.green

return lineView

} else if overlay is MKPolygon {

let polygonView = MKPolygonRenderer(overlay: overlay)

polygonView.strokeColor = UIColor.magenta

return polygonView

} else if let character = overlay as? Character {

let circleView = MKCircleRenderer(overlay: character)

circleView.strokeColor = character.color

return circleView

}

return MKOverlayRenderer()

}

And finally, update loadSelectedOptions() to give the user an option to turn the character locations on or off:

case .mapCharacterLocation:

addCharacterLocation()

You can also remove the default: and break statements now since you’ve covered all the possible cases.

Build and run the app, and turn on the character overlay to see where everyone is hiding out!

![mapkit]()

Where to Go From Here?

Congratulations! You’ve worked with some of the most important functionality that MapKit provides. With a few basic functions, you’ve implemented a full-blown and practical mapping application complete with annotations, satellite view, and custom overlays!

Here’s the final example project that you developed in the tutorial.

There are many different ways to generate overlays that range from very easy, to the very complex. The approach in this tutorial that was taken for the overlay_park image provided in this tutorial was the easy — yet tedious — route.

There are much more advanced — and perhaps more efficient — methods to create overlays. A few alternate methods are to use KML files, MapBox tiles, or other 3rd party provided resources.

I hope you enjoyed this tutorial, and I hope to see you use MapKit overlays in your own apps. If you have any questions or comments, please join the forum discussion below!

The post MapKit Tutorial: Overlay Views appeared first on Ray Wenderlich.

Jessi Chartier is focused on what’s going on — or rather, going wrong — in the classroom. According to a recent study, she says that a million jobs in computer science will go unfilled by 2020. Less than 25% of high schools participate in Advanced Placement computer science courses, and many of those AP programs put theory before practice. Misguided information about what businesses require leads the curriculum to cover things such as Java development, instead of real-world needs like iOS development and mobile app development in general.

Jessi Chartier is focused on what’s going on — or rather, going wrong — in the classroom. According to a recent study, she says that a million jobs in computer science will go unfilled by 2020. Less than 25% of high schools participate in Advanced Placement computer science courses, and many of those AP programs put theory before practice. Misguided information about what businesses require leads the curriculum to cover things such as Java development, instead of real-world needs like iOS development and mobile app development in general.