![Learn some intermediate Xcode debugging techniques!]()

Learn some intermediate Xcode debugging techniques!

Update note: This tutorial has been updated to Xcode 8 and Swift 3 by George Andrews. The original tutorial was written by Brian Moakley.

The one constant in software development is bugs. Let’s face it, no one gets it right the first time. From fat fingers to incorrect assumptions, software development is like baking cakes in a roach motel – except that developers supply the critters!

Luckily, Xcode gives you a myriad of tools to keep the nasties at bay. There’s obviously the debugger you know and love, but there’s a lot more it can do for you than simply examine variables and step over code!

This is a tutorial for intermediate iOS developers, where you’ll get hands-on experience with some of the lesser-known but extremely useful debugging techniques, such as:

- Getting rid of NSLog in favor of breakpoint logging

- Using a build script to produce compiler warnings for comment TODOs and FIXMEs

- Breaking on conditions with expressions

- Dynamically modifying data with LLDB

- And much more!

My own goal is to become a truly lazy developer. I’d rather do the heavy work up front so I can relax on the backend. Thankfully, Xcode values my martini time. It provides great tools so I don’t have to be glued to my computer all day and all night.

Let’s take a look at these tools. Pull up a bean bag chair. Crack open your favorite beverage. It is time to get lazy! :]

Note that this tutorial assumes you already know the basics about the Xcode debugger. If you are completely new to debugging with Xcode, check out this beginner debugging tutorial first.

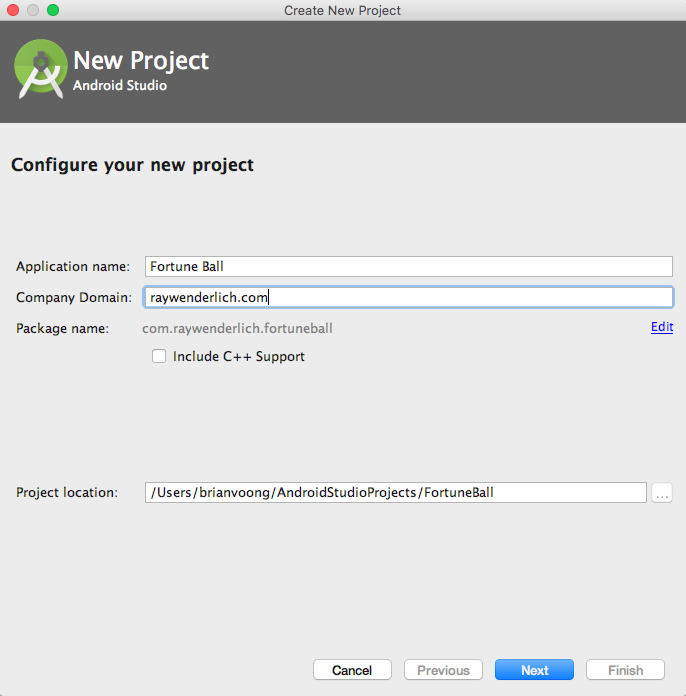

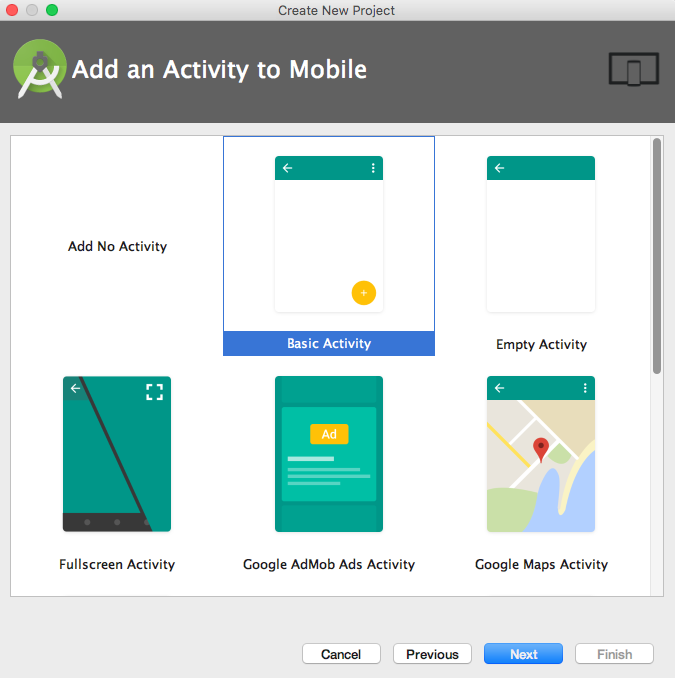

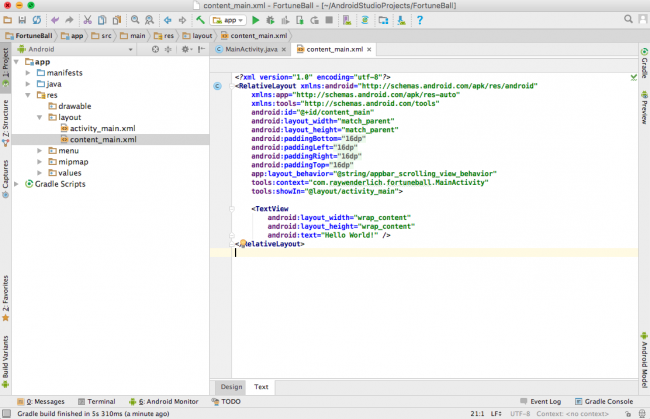

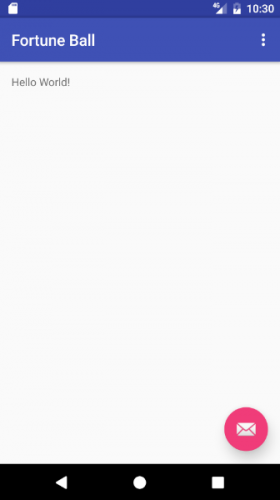

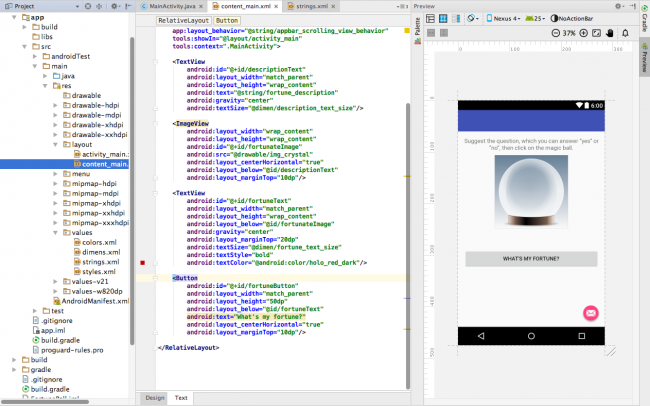

Getting Started

I put together a sample app for this project. You can download it here.

The app is called Gift Lister, and tracks gifts you might want to buy for people. It’s like Gifts 2 HD which was awarded Most Visually Impressive Reader’s App by this site way back in 2012. Gift Lister is like Gifts 2 HD… but far, far worse.

For one thing, it’s filled with bugs. The developer (myself in a different shirt) was ambitious and tried to fix the app the old fashioned way…and yes, it’s still broken :]

This tutorial will walk you through fixing the app while being as lazy as possible.

Okay, it’s time to get started — but don’t feel like you have to rush. :]

Open up the project and take a look around the various files. You’ll notice the app is a simple front end to a basic Core Data persistent store.

Note: If you don’t know Core Data, don’t worry! Core Data is an object persistence framework which is a whole tutorial to itself. In this tutorial, you will not dive into the framework, nor will you interact with Core Data objects in any meaningful way, so you don’t need to know much about it. Just keep in mind that Core Data loads objects and saves them so you don’t have to.

Now that you’ve taken a look around, you can set up the debugger.

Setting up the Debugger Console

The first thing to do whenever you start a debugging session is to open the debugging console. Open it by clicking this button on the main toolbar:

![Debug Console Button]()

While the button is nice and convenient, clicking it for every debug session will provide unnecessary wear and tear on your fingertip. :] Wouldn’t you prefer that Xcode do it for you?

To do so, open the Xcode preferences by clicking ⌘, or by going to the application menu and selecting Xcode\Preferences. Click the Behaviors button (the button with the gear over it).

![Behaviors Dialog]()

Click the Running\Starts item on the left hand side of the dialog. You will see a bunch of options appear on the right hand side. On the right hand side, click the seventh checkbox and then select Variables & Console View on the last dropdown.

Do this for the Pauses and the Generates Output items, which are located just underneath the Starts item.

The Variables & Console View option tells the debugger to show the list of local variables, as well as the console output each time a debugger session starts. If you wanted to view just the console output, you would select Console View. Likewise, if you wanted to see just the variables, you would select the Variable View.

The Current Views option defaults to the last debugger view on your last debugger session. For example, if you closed Variables and opted to just the view the console, then only the console would open the next time the debugger was started.

Close the dialog, then build and run.

The debugger will now open each time you build and run your app – without having to go through the major bother of clicking that button. Although it only takes a second to do that, it adds up to minutes per week. And after all, you’re trying to be lazy! :]

The NSLog Jam

Before continuing, it’s important to review the definition of a breakpoint.

A breakpoint is a point of time in a program that allows you to perform actions on the running program. Sometimes, the program may pause at the designated point to allow you to inspect the program’s state and/or step through the code.

You can also run code, change variables, and even have the computer quote Shakespeare. You will be doing all these things later in the tutorial.

Note: This tutorial will be covering some of the advanced uses of breakpoints. If you are still wrapping your head around some of its basic uses such as stepping-in, stepping-out, and stepping-over, please read over the My App Crashed, Now What? tutorial.

Build and run the app. Then, try to add a new Friend to track gifts for. Not surprisingly, the app crashes when you try to add a new Friend. Let’s fix it up.

This is the result of your first attempt at running this app:

![Stack Trace Goes Boom]()

Can you feel the hours ticking away?

This project needs a little sanity. Currently, you can’t see the source of the compile error. To find it, you need to add an exception breakpoint to track down the source of the error.

Switch to the breakpoint navigator as shown below:

![Breakpoint Navigator]()

Then, click the plus sign at the bottom of the pane. From the menu, select Exception Breakpoint… .

![Add Exception Breakpoint]()

You should now see this dialog:

![Exception Breakpoint]()

The Exception field gives you the option of activating the breakpoint in Objective-C, C++, or All. Keep the default option of All.

The Break field in the dropdown allows you to pause execution depending on whether an error is thrown or caught. Keep it selected on thrown. If you’re actually making use of exception handling in your code, then select On Catch. For the purposes of this tutorial, leave it at On Throw.

You’ll cover the final two fields later in this tutorial. Click away to dismiss the dialog, then build and run.

This time the result is cleaner:

![]()

Take a look at the debugger console — it’s filled with log messages, and a lot of them appear unnecessary.

Logging is critical to debugging code. Log messages need to be actively pruned, or else the console will become littered with noise. Sifting through all that noise takes away from time on the golf course, so it’s important to remove it, otherwise you’ll waste more time on a problem than it deserves.

Open AppDelegate.swift and you should see a bunch of old messages in didFinishLaunchingWithOptions. Select them all and delete them.

Time to find the next set of log statements. Open up the search navigator, and look for in viewDidLoad.

![Search Dialog]()

Click the search results and FriendSelectionViewController.swift will open to the line with the log statement.

Notice that this time the code uses print to create the log statement instead of NSLog. Generally, in Swift, you will use print to write to standard output, although you can use NSLog when needed.

It’s critical that the log message appears in the log; if you’re logging from multiple threads you don’t want to have to synchronize them yourself. Either approach can be used to display a message to the Console in a debug session.

At this point, the effort you’re putting into managing your log statements is starting to accumulate. It may not seem like a lot, but every minute adds up. By the end of a project cycle, those stray minutes can easily equate to hours.

Another disadvantage to hard-coding log statements is that each time you add one to the code base, you take a risk of injecting new bugs into your code. All it takes is a few keystrokes, a little autocomplete, then a small distraction – and your once-working app now has a bug.

It’s time to move those log statements out of the code to where they belong: breakpoints.

First, comment out both of the print statements. Next, add a breakpoint by left-clicking in the gutter beside each of the statements.

Your code window should look like this:

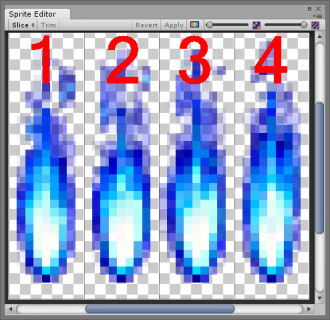

![Logging Breakpoints]()

Control-click or right-click the first breakpoint and select Edit Breakpoint. From the dialog, click Add Action, then select Log Message from the Action dropdown. In the text field, type in viewDidLoad. The dialog should now look like the following:

![viewDidLoad Breakpoint Dialog]()

Click away to dismiss the dialog, then build and run. You should now see in viewDidLoad in the console – but now it’s done with breakpoints instead of NSLog statements!

Note: Throughout this tutorial, you will be clicking build and run after each breakpoint modification, as this is easier to explain. The key point to remember: breakpoints are a runtime addition. You can add as many of them as you want during the execution of your program. This includes NSLog statements.

There is one major problem, though: the program stops at that breakpoint when you want it to continue, but changing that behavior is simple.

Control-click or right-click the breakpoint and select Edit Breakpoint. At the bottom of the dialog, click the Automatically continue after evaluating checkbox.

Now build and run again. This time it correctly logs the message…but it pauses on the second breakpoint.

Control-click or right-click the second breakpoint. Click Add Action, then select Log Message in the action dropdown, then type Loading friends…. At the bottom of the dialog, click the Automatically continue after evaluating checkbox.

Now build and run again. The app works great… until you try to add a Friend again and it crashes. You can’t have everything, it seems. :]

Believe it or not, you’re still doing too much work. Control-click or right-click the first breakpoint and replace in viewDidLoad with %B. Now run the app again. The console should look like this:

![Console Log]()

%B prints out the name of the containing method. You can also use %H to print out the number of times the method is being touched. Simple expressions can also be included.

So you could write: %B has been touched %H times. The console will read: viewWillLoad() has been touched 1 times.

Build and run, try to add a Friend, and then let the program crash. If you hit the exception breakpoint you set up earlier, click continue so you can see the crash description. The stack trace reads:

*** Terminating app due to uncaught exception 'NSInvalidArgumentException', reason: '+entityForName: nil is not a legal NSManagedObjectContext parameter searching for entity name 'Friend''

Something is not working in Core Data.

Scanning the code, you see that the entity is created from the persistentContainer's viewContext. You have a hunch that persistentContainer is probably the cause of the problem.

Take a look further up in the Console view and you will find the stack trace also reads:

Failed to load model named GiftList

CoreData: error: Failed to load model named GiftList

Could not fetch. Error Domain=Foundation._GenericObjCError Code=0 "(null)", [:]

The error message is informing you that CoreData failed to load a data model named “GiftList”. If you look at the data model provided in the project, you will find it is actually named “GiftLister.”

Take another look at the code in AppDelegate.swift.

In my haste, I made a typo when providing the name argument for the Core Data stack’s persistentContainer. Instead of naming it “GiftLister”, I named it “GiftList.”

Change the name argument from “GiftList” to “GiftLister.”

let container = NSPersistentContainer(name: "GiftLister") |

Build and run. Now try to add a Friend. Hooray — the app is (kind of) working!

![Gift Lister - now running!]()

Breakpoints and Expressions

So far so good, but you may have noticed that the breakpoint logging doesn’t show a timestamp of when the log message occurs, which can be useful for debugging purposes. The good news is that it’s easy to fix with breakpoint expressions!

Note: Date logging is indeed useful, but keep in mind it also makes the logging a bit slower as the system has to query all the date information. Keep that in mind if you ever find your logging calls lagging behind your application.

Let’s restore your log statements to their previous glory. Right-click or Control-click the second breakpoint in FriendSelectionViewController.swift. Click Edit Breakpoint. In the action, change from Log Message to Debugger Command and add the following to the text field:

expression NSLog("Loading friends...")

|

It should look like this:

![Expression NSLog]()

The Debugger command will now evaluate an expression in real time.

Build and run. You should now see the following:

2012-12-20 08:57:39.942 GiftLister[1984:11603] Loading friends...

|

Being able to add NSLog messages with a breakpoint means you no longer have to stop the program just to log important data, so there’s little chance you’ll introduce new bugs because you aren’t touching the code — but best of all, there’s no last-minute scrambles to remove all your debug statements the night before release.

Now to disable logging in the app. It’s simply a matter of clicking the breakpoints button in the debugger view.

![Disable Breakpoints Button]()

Click that button and then build and run. Your logs are now nice and clean. You can also turn off individual log calls in the breakpoint navigator.

The days of filling your codebase with commented-out log statements are now over! :]

MARKSs, TODOs, FIXMEs, oh my!

The next thing to do is to create some friends so you can keep a list of gift suggestions for them.

Build and run the app. When the app starts, press the Add a friend cell. The app loads another view controller with a name text field and a date picker. Enter a name and select a birthday, then press the OK button.

You’ll be returned back to the root controller with your new friend added to the table. Click Add a friend once again.

Enter the name of another friend, only this time select February 31st, 2010 for the birthday.

In a typical date picker, such a date would not be selectable. This is not the case with this amazing app! In a fit of delirium, I decided to be ambitious and use a regular picker, instead of the date picker. This meant I was forced to rewrite all of the date validation logic which, of course, created some new bugs.

Press the OK button. Tragically, the invalid date is recorded. It’s time to do a little debugging to see what is wrong.

Open AddFriendViewController.swift and add a breakpoint at the start of the method saveFriend.

Note: Finding methods in large files can take a lot of time. The long way is to manually scan each line until you stumble into it. The second way is to use the jump bar, then scroll through the list of method names. I actually prefer to do a search, although not in the search navigator, but in the jump bar itself. To do so, click on the jump bar then just start typing. Your method name should show up like it were in a regular search field.

![Searching in the Jump Bar]()

In the simulator, press the Add a friend button, like your previous entry, and add an invalid date. Step down the method until you reach this line:

if name.hasText, isValidDateComposedOf(month: selectedMonth, day: selectedDay, year: selectedYear) {

|

Step into isValidDateComposedOf. The validation code failure is clear — there isn’t any! There’s just a comment promising to do it sometime in the future.

Comments are a nice way to describe the particular meaning of code chunks, but using them for task management is futile. Even on tiny projects, there are just too many items to juggle, and comment tasks will be forgotten.

The best way to prevent them from being lost is to really make them stand out. One way to make them stand out is to leave messages in the jump bar.

Open the jump bar. You should see something like this:

![TODO Message]()

You can also write FIXME: or MARK:.

MARK:, TODO:, and FIXME: comments you add to your code will appear in the jump bar. In addition, if you add a hyphen to a MARK: comment after the colon (e.g. MARK: - UIPickerViewDataSource), the jump bar message will add a horizontal rule above the comment to make it even easier to read!

These statements don’t have the same emphasis as a compiler warning or error, but they at least have a greater visibility than a lone comment at the bottom of a method. It’s best to leave comments for, well, comments and keep a list of required tasks outside of the codebase.

Now, wouldn’t it be great if Xcode gave you a compiler warning whenever you have TODO: or FIXME: comments in your code? I thought so!

To do this, you’ll add a build script to the project that will search the code for all TODO: and FIXME: comments and then flag them as compiler warnings.

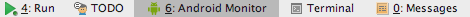

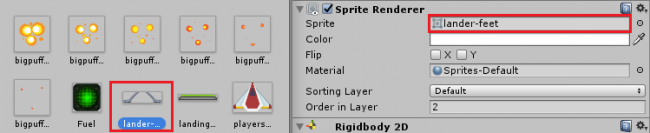

To add a build script, choose the project from the Project Navigator and select Build Phases. From here click on the + button to add a New Run Script Phase.

![Add Run Script Phase]()

Next, add the following code to your build script:

TAGS="TODO:|FIXME:"

echo "searching ${SRCROOT} for ${TAGS}"

find "${SRCROOT}" \( -name "*.swift" \) -print0 | xargs -0 egrep --with-filename --line-number --only-matching "($TAGS).*\$" | perl -p -e "s/($TAGS)/ warning: \$1/"

|

Your Run Script code should look like this:

![Run Script Code]()

Now build your project and show the issue navigator:

![Shell Script Invocation Warning]()

The TODO: comment now shows up as a Shell Script Invocation Warning and you won’t be able to forget about it! :]

Variables View & Return Values

Now let’s take a quick look at a nice little feature included since Xcode 4.4.

Restart the app, keeping the breakpoint fixed in the empty validation method. Now, step out of the code. Look at the Variables view in the debugger. You should see this:

![Variables view and Return Values]()

Displaying the return value is a feature that hasn’t received much attention, but it makes your life so much easier. Consider that the code was being called from here:

if name.hasText, isValidDateComposedOf(month: selectedMonth, day: selectedDay, year: selectedYear) {

|

The code that calls isValidDateComposedOf immediately uses the return value in an expression.

Before this was added to Xcode, you needed to break apart the line, then log out the value if you wanted to inspect return values. Now, you can simply step out of a method and see the return value right in the debugger.

Conditions for Successful Debugging

There are times when it’s necessary to change the state of your program at certain intervals. Sometimes these changes occur in the middle of large sequences of events, which makes normal debugging quite difficult. That’s where conditions come into play.

Now that you have some friends listed in the app, tap one of their names in the root view controller to bring up the gift interface. It’s just a simple grouped table that can be sorted on whether the gift can be purchased or not.

Press the add button on the navigation bar to add a new item. For the name, put shoes. For the price, put 88.00. Tap the OK button. The shoes should now appear in the gift table.

Now add the following items:

- Candles / 1.99

- Sleigh / 540.00

- XBox / 299.99

- iPad / 499.99

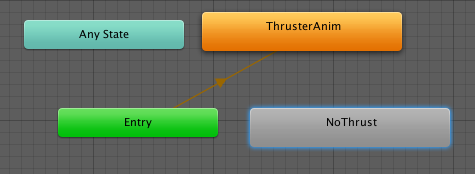

Yikes. You realized that you actually wanted to record a PS4 instead of an XBox. You could simply tap the cell to edit it, but for the sake of demonstration, you will edit it through the debugger.

Open up GiftListsViewController.swift and look for cellForRowAtIndexPath. Add a breakpoint on the line underneath the code that reads:

Like so:

![Gift Breakpoint]()

Now right-click or Control-click the breakpoint, and select Edit Breakpoint.

It’s time to add your condition. Think of this like a simple if statement. Add the following code:

![Condition Breakpoint]()

Now, press the Bought segmented control button. The table reloads new data, but the breakpoint doesn’t trip.

Press the Saved segmented control button. This time everything should pause with the highlighted item selected in the debugger console.

In the debugger console, add the following:

expression gift.name = "PS4"

Now, press the Run button and the table will continue to load. The PS4 replaces the XBox in the gift results.

You can get the same results by setting the number of iterations. Control-click or right-click the break point, and select Edit Breakpoint. This time, remove the condition from its text field, and select the number 2. Click Done.

![Ignore Stepper]()

Now press the Bought segmented control then the Saved segmented control. You should hit the same breakpoint.

To confirm that you are at the correct object, type:

Now revert the object back to its previous state:

(lldb) expression gift.name = "Xbox"

|

The table should now reflect the update. Isn’t real-time editing just great?

Starting Up by Tearing Down

When developing data driven apps, it’s often important to wipe the data store clean. There are a number of ways of doing this, from reseting the iPhone simulator to locating the actual datastore on your computer and deleting it. Doing this over and over can be a bit tedious, so get a little lazy and have Xcode do it for you.

You’ll start by creating a shell script. A shell script is a list of commands that automate some actions of the operating system. To create a shell script, create a new file from the application menu. Click File\New\File or Command-N. From the category listings, select Other and then select Shell Script as the type.

![Shell Script Dialog]()

For the name, put wipe-db.sh.

![Shell Script Name]()

In order to wipe out the actual datastore, you need to use the remove command along with the full path to the data store (including the name for the current user). You could use Finder or Terminal to find the data store and then copy/paste its path into the shell script, but in Xcode 8, the name of the folder that contains the data store will continuously change each time you build and run the application.

To overcome this issue, you can use the whoami command to output the current user and the wildcard character * to provide for the changing folder names.

So enter the following into your script:

rm /Users/$(whoami)/Library/Developer/CoreSimulator/Devices/*/data/Containers/Data/Application/*/Library/Application\ Support/GiftLister.sqlite |

Save the shell script and close it.

By default, shell scripts are read-only. You can use Terminal to set this script as executable.

If you don’t know where Terminal is located, you can find it in your Application folder inside of the Utilities folder.

Start Terminal and change your location to your home directory by entering the following:

Now, list the contents of the directory by typing:

You will have to navigate to the location of your project folder. If you placed it on your desktop, you would navigate to it by typing:

YourComputer$ cd Desktop

YourComputer$ cd GiftLister

|

If you have to navigate up a directory, type the following:

After a long crawl through Terminal, you should see all the project files. To make the shell script executable, type the following:

YourComputer$ chmod a+x wipe-db.sh

|

chmod changes the permissions of a file. a+x allows the file to be executable for all users, groups, and others.

Wow… that was a lot. Take a breather. You deserve it. Sometimes being lazy takes a lot of work. :]

Close Terminal and return to Xcode. Open AppDelegate.swift.

Set a breakpoint on the first line of didFinishLaunchingWithOptions. Right-click or Control-click the breakpoint and select Edit Breakpoint. Add an action and select Shell Command. In the next dialog, click Choose and select the shell script you just created. Click the Automatically continue after evaluating checkbox, and click away.

Stop the simulator if it is running.

Now build and run; the database has been deleted.

The simulator tends to cache a lot of data, so I find the best thing to do is perform a clean build by selecting Clean from Xcode’s product menu, then build and run. Otherwise, you can run the app, stop it, then run it again. The cached data will be gone with a brand-spanking new database.

While it did take some bit of work to setup, clearing out the database can now be performed with the press of a button. When not in use, simply disable the breakpoint.

Note: You just created a shell script and wrote a simple Unix command to delete the file. You could just as easily have loaded a PHP file within the shell script to do the same thing. You could also launch a Java program, Python script, or any other program on the machine. The key point is that you don’t need to learn shell scripting to manipulate the underlying operating system through a breakpoint.

Bonus Material: Sounding Out Your Save Methods

At this point, you should have plenty of data in the app. It’s time to save it all.

With apps like this, saving should be done frequently so that nothing is lost. That’s not the case with this app. It only saves when the user exits the application.

If you aren’t already there, click Back on the navbar to return to the root view controller, then simulate a Home button press. You can do this from the Simulator’s menu by selecting Hardware\Home or by pressing Shift-Command-H.

Now stop the program from Xcode, and build and run. The tableview is empty. The app failed to save anything. Hm.

Open AppDelegate.swift. In applicationDidEnterBackground, you should see the problem at once in doLotsOfWork. The work isn’t being finished in time, so iOS is terminating your app before it finishes its cleanup. The result of this early termination is that saveData is not being called.

You’ll need to make sure that data is saved first. In applicationDidEnterBackground, move the saveContext call above doLotsOfWork call like so:

saveContext()

doLotsOfWork()

|

Now, add a breakpoint on the doLotsOfWork line. Right-click or Control-click the breakpoint and select Edit Breakpoint. Select a sound action and choose Submarine as the sound. When dealing with sound actions, I try to avoid system sounds, as I may easily overlook them.

Next, click the checkbox next to Automatically continue after evaluating.

Finally, click build and run.

![Sound Breakpoint]()

When the app starts again, add a new user then press the Home button in the simulator. Just after the app closes, you should hear the submarine sound, indicating that the data has been saved.

Stop the app in Xcode, then press Run. You should see the data in all its glory.

Playing a sound is a good way to know if a certain code path has been reached without having to look through the logs. You can also provide your own custom sounds in case you want to play an explosion for a particularly bad crash.

To do so, just drop your sound files in this folder:

YOUR_HOME_DIRECTORY/Library/Sounds

You’ll have to restart Xcode before you can use them, but think of all the potential shenanigans. :]

Time for one last bit of fun. Find your first breakpoint in FriendSelectionViewController and Control-click or right-click the breakpoint. Click Edit Breakpoint from the menu. In the dialog, click the plus button; this lets you add multiple actions to a single breakpoint.

Select the Log Message action, only this time, type To be, or not to be. Select the Speak Message radio button, then click Done. The dialog should look like this:

![Shakespeare Dialog]()

Now build and run and enjoy the performance!

Note: Novelty aside, this feature can be quite useful! Audio messages can be especially useful when debugging complicated networking code and the like.

Where to Go from Here?

You can download the finished project here.

As you can see, Xcode debugging tools have a lot of flexibility in meeting the day-to-day challenges you face in your development process. For example, LLDB provides the ability to dynamically inspect and update your code without having to worry about injecting any additional bugs.

Believe it or not, this is just the beginning. LLDB provides a host of other features such as custom variable summaries, dynamic breakpoints, and even custom scripting of the debugger through Python.

Granted, moving beyond NSLog() or

If you want to learn more, LLDB is a good place to start. A great place to begin with LLDB is Brian Moakley’s Video Tutorial: Using LLDB in iOS.

New features for LLDB were also highlighted in the WWDC 2016 session: Debugging Tips and Tricks.

If you have any questions or comments, feel free to join the discussion below!

The post Intermediate Debugging with Xcode 8 appeared first on Ray Wenderlich.