![]() This is an abridged chapter from our book watchOS by Tutorials, which has been completely updated for Swift 4 and watchOS 4. This tutorial is presented as part of our iOS 11 Launch Party — enjoy!

This is an abridged chapter from our book watchOS by Tutorials, which has been completely updated for Swift 4 and watchOS 4. This tutorial is presented as part of our iOS 11 Launch Party — enjoy!

Core Bluetooth has been around since 2011 on macOS and iOS, since 2016 on tvOS, and now it’s available on watchOS, with an Apple Watch Series 2.

What’s Core Bluetooth? It’s Apple’s framework for communicating with devices that support Bluetooth 4.0 low-energy, often abbreviated as BLE. And it opened up standard communication protocols to read and/or write from external devices.

Back in 2011, there weren’t many BLE devices, but now? Well, this is from the Bluetooth site (bit.ly/2j1DqpU):

“More than 31,000 Bluetooth member companies introducing over 17,000 thousand new products per year and shipping more than 3.4 billion units each year.”

BLE is everywhere: in health monitors, home appliances, fitness equipment, Arduino and toys. In July 2017, Apple announced its collaboration with hearing-aid implant manufacturer Cochlear, to create the first “Made for iPhone” implant. (bit.ly/2vZahUU)

Note: Cochlear is an Australian company, and the app was built here in Australia!

(bit.ly/2uztOHX)

But you don’t have to acquire a specific gadget to work through this tutorial: the sample project uses an iPhone as the BLE device. In the app you’ll build, the iOS device provides a two-part service: it transfers text to the Watch, or the Watch can open Maps at the user’s location on the iOS device.

The first part is a Swift translation of Apple’s BLE_Transfer sample app. It’s a very useful example, because it shows how to send 20-byte chunks of data. I added the Maps part to show you how to send a control instruction to the BLE device, and I thought it’s something you’d want to do from the Watch Maps app: open Maps on a larger display so you can find what you need more easily!

Getting Started

Note: The simulator does not support Core Bluetooth. To run the starter app, you need two iOS 11 devices. To run the finished app, you need an Apple Watch Series 2 and an iOS 11 device.

Download the starter app for this tutorial here.

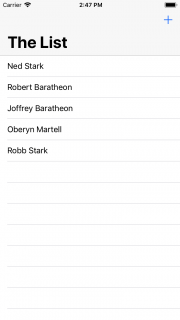

Open the starter app. Build and run on two iOS devices. Go into Settings to trust the developer, then build and run again.

Select Peripheral Mode on one device, and Central Mode on the other. Tap the peripheral’s Advertising switch to start advertising.

![]()

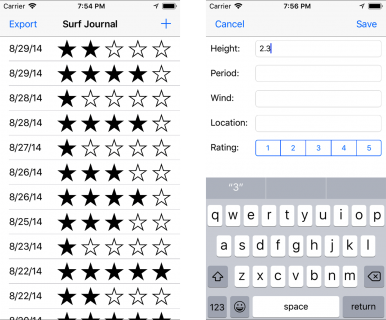

PeripheralViewController has a textView, prepopulated with some text. When the central manager subscribes to textCharacteristic, the peripheral sends this text in 20-byte chunks to the central, where it appears in a textView:

![]()

Modify the peripheral’s text, and tap Done. The peripheral sends the updated value to the central:

![]()

Note: I deleted “sample” from the first sentence.

When the central has discovered mapCharacteristic, CentralViewController bar button’s title changes to Map Me. Tap this bar button, allow the app to use your location, then tap Map Me again: the peripheral device opens the Maps app, at your location:

![]()

Stop the app on both devices. It’s time to learn about Core Bluetooth, and look at some code!

Note: You can tap TextMeMapMe to go back to the peripheral view, but sometimes, Maps keeps re-opening.

What is Core Bluetooth?

Lets’s start with some vocabulary.

A Generic Attributes (GATT) profile describes how to bundle, present and transfer data using Bluetooth Low Energy. It describes a use case, roles and general behaviors. A device’s GATT database can describe a hierarchy of services, characteristics and attributes.

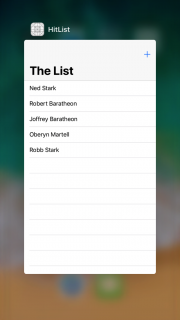

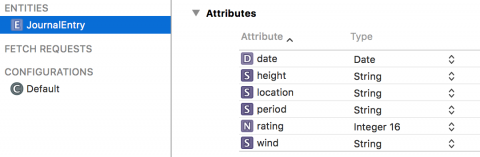

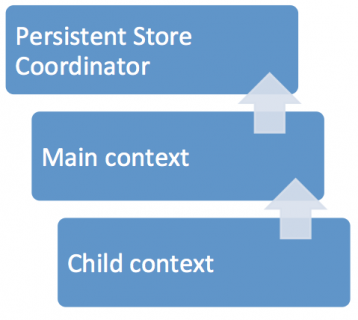

The classic server/client roles are the central app and the peripheral or accessory. In the starter app, either iOS device can be central or peripheral. When you build the Watch app, it can only be the central device:

A peripheral offers services. For example, Blood Pressure monitor is a pre-defined GATT service (bit.ly/2lfpqwB). In the starter app, the service is TextOrMap.

![]()

A service has characteristics. The Blood Pressure service has pre-defined GATT characteristics (bit.ly/2vOhGqa): Blood Pressure Feature, which blood pressure monitor features this sensor supports, and Blood Pressure Measurement. A characteristic has a value, properties to indicate operations the characteristic supports, and security permissions.

A central app can read or write a service’s characteristics, such as reading the user’s heart rate from a heart-rate monitor, or writing the user’s preferred temperature to a room heater/cooler. In the starter app, the TextOrMap service has two characteristics: the peripheral sends updates of the textCharacteristic value to the central; when the central writes the mapCharacteristic value, the peripheral opens the Maps app at the user’s location.

Services and characteristics have UUIDs: universally unique identifiers. There are predefined UUIDs for standard peripheral devices, like heart monitors or home appliances. You can use the command line utility uuidgen to create custom UUID strings, then use these to initialize CBUUID objects.

The Maximum Transmission Unit (MTU) is 27 bytes, but really 20 bytes, because each packet uses 7 bytes as it travels through three protocol layers. You can improve throughput using write without response, if the characteristic allows this, because you don’t have to wait for the peripheral’s response. If your central app and peripheral are running on iPhone 7, the new iPad Pro, or Apple Watch Series 2, you get the Extended Data Length of 251 bytes! I’m testing the sample app on an iPhone SE, so I’m stuck with 20-byte chunks.

Overview

The most interesting classes in the Core Bluetooth framework are CBCentralManager and CBPeripheralManager. Each has methods and a comprehensive delegate protocol, to monitor activity between central and peripheral devices. There’s also a peripheral delegate protocol. Everything comes together in an intricate dance!

Think about what the devices need to do:

-

Central devices need to scan for and connect to peripherals. Peripherals need to advertise their services.

-

Once connected, the central device needs to discover the peripheral’s services and characteristics, using peripheral delegate methods. Often at this point, an app might present a list of these for the user to select from.

-

If the central app is interested in a characteristic, it can subscribe to notifications of updates to the characteristic’s value, or send a read/write request to the peripheral. The peripheral then responds by sending data to the central device, or doing something with the write request’s value. The central app receives updated data from another peripheral delegate method, and usually uses this to update its UI.

-

Eventually, the central device might disable a notification, triggering delegate methods of the peripheral and the peripheral manager. Or the central device disconnects the peripheral, which triggers a central manager delegate method, usually used to clean up.

Now look at what each participant does.

Central Manager

A central manager’s main jobs are:

-

If Bluetooth LE is available and turned on, the central manager scans for peripherals.

-

If a peripheral’s signal is in range, it connects to the peripheral. It also discovers services and characteristics, which it may display to the user to select from, subscribes to characteristics, or requests to read or write a characteristic’s value.

Central Manager Methods & Properties:

-

Initialize with delegate, queue and optional options.

-

Connect to a peripheral, with options,

-

Retrieve known peripherals (array of UUIDs) or connected peripherals (array of service UUIDs).

-

Scan for peripherals with services and options, or stop scanning.

-

Properties:

delegate, isScanning

Peripheral Manager

A peripheral manager’s main jobs are to manage and advertise the services in the GATT database of the peripheral device. You would implement this for an Apple device acting as a peripheral. Non-Apple accessories have their own manager APIs. Most of the sample BLE apps you can find online use non-Apple accessories like Arduino.

If Bluetooth LE is available and turned on, the peripheral manager sets up characteristics and services. And it can respond when a central device subscribes to a characteristic, requests to read or write a characteristic value, or unsubscribes from a characteristic.

Peripheral Manager Methods & Properties:

-

Initialize with delegate, queue and optional options.

-

Start or stop advertising peripheral manager data.

-

updateValue(_:for:onSubscribedCentrals:)

-

respond(_:withResult:)

-

Add or remove services.

-

setDesiredConnectionLatency(_:for:)

-

Properties:

delegate, isAdvertising

Central Manager Delegate Protocol

Methods in this protocol indicate availability of the central manager, and monitor discovering and connecting to peripherals. Follow along in the CBCentralManagerDelegate extension of CentralViewController.swift, as you work through this list:

-

centralManagerDidUpdateState(_:) is the only required method. If the central is poweredOn — Bluetooth LE is available and turned on — you should start scanning for peripherals. You can also handle the cases poweredOff, resetting, unauthorized, unknown and unsupported, but you must not issue commands to the central manager when it isn’t powered on.

-

When the central manager discovers a peripheral,

centralManager(_:didDiscover:advertisementData:rssi:) should save a local copy of the peripheral. Check the received signal strength indicator (RSSI) to see if the peripheral’s signal is strong enough: -22dB is good, but two iOS devices placed right next to each other produce a much lower RSSI, often below -35dB. If the peripheral’s RSSI is acceptable, try to connect to it with the central manager’s connect(_:options:) method.

-

If the connection attempt fails, you can check the error in the delegate method

centralManager(_:didFailToConnect:error:). If the error is something transient, you can call connect(_:options:) again.

-

When the connection attempt succeeds, implement

centralManager(_:didConnect:) to stop scanning, reset characteristic values, set the peripheral’s delegate property, then call the peripheral’s discoverServices(_:) method. The argument is an array of service UUIDs that your app is interested in. After this, it’s up to the peripheral delegate protocol to discover characteristics of the services.

-

In

centralManager(_:didDisconnectPeripheral:error:), you can clean up, then start scanning again.

Peripheral Manager Delegate Protocol

Methods in this protocol indicate availability of the peripheral manager, verify advertising, and monitor read, write and subcription requests from central devices. Follow along in the CBPeripheralManagerDelegate extension of PeripheralViewController.swift, as you work through this list:

-

peripheralManagerDidUpdateState(_:) is the only required method. You handle the same cases as the corresponding centralManagerDidUpdateState(_:). If the peripheral is poweredOn, you should create the peripheral’s services, and their characteristics.

-

peripheralManagerDidStartAdvertising(_:error:) is called when the peripheral manager starts advertising the peripheral’s data.

-

When the central subscribes to a characteristic, by enabling notifications,

peripheralManager(_:central:didSubscribeTo:) should start sending the characteristic’s value.

-

When the central disables notifications for a characteristic, you can implement

peripheralManager(_:central:didUnsubscribeFrom:) to stop sending updates of the characteristic’s value.

-

To send a characteristic’s value,

sendData() uses the peripheral manager method updateValue(_:for:onSubscribedCentrals:). This method returns false if the transmit queue is full. When the transmit queue has space, the peripheral manager calls peripheralManagerIsReady(toUpdateSubscribers:). You should implement this delegate method to resend the value.

-

The central can send read or write requests, which the peripheral handles with

peripheralManager(_:didReceiveRead:) or peripheralManager(_:didReceiveWrite:). When implementing these methods, you should call the peripheral manager method peripheral.respond(to:withResult:) exactly once. The sample app implements only peripheralManager(_:didReceiveWrite:); reading the text data is accomplished by subscribing to textCharacteristic.

Peripheral Delegate Protocol

A peripheral delegate can respond when a central device discovers its services or characteristics, or requests to read a characteristic, or when a characteristic’s value is updated. It can also respond when a central device writes a characteristic’s value, or disconnects a peripheral. Follow along in the CBPeripheralDelegate extension of CentralViewController.swift, as you work through this list:

-

The sample app just checks the error in

peripheral(_:didDiscoverServices:), but some apps might present a list of peripheral.services for the user to select from.

-

And similarly for

peripheral(_:didDiscoverCharacteristicsFor:error:).

-

When the peripheral manager updates a value that the central subscribed to, or requested to read, implement

peripheral(_:didUpdateValueFor:error:) to use that value in your app. The sample app collects the chunks, then displays the complete text in the view controller’s text view.

-

Implement

peripheral(_:didUpdateNotificationStateFor:error:) to handle the central device enabling or disabling notifications for a characteristic. The sample app just logs the information.

-

There’s a runtime warning if you don’t implement

peripheral(_:didModifyServices:), so I added this stub.

watchOS vs iOS

iOS apps can be central or peripheral, and can continue using CoreBluetooth in the background.

watchOS and tvOS both rely on Bluetooth as their main system input, so Core Bluetooth has restrictions, to ensure system activities can run. Both can be only the central device, and can use at most two peripherals at a time. Peripherals are disconnected when the app is suspended.And the minimum interval between connections is 30ms, instead of 15ms for iOS and macOS.

Now finally, you’re going to build the Watch app!

Building the Watch App

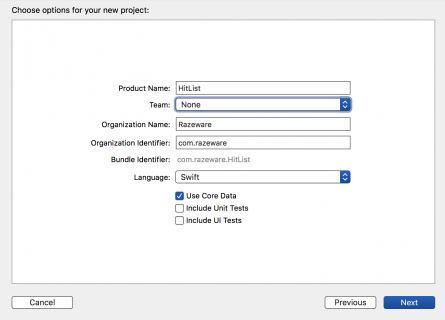

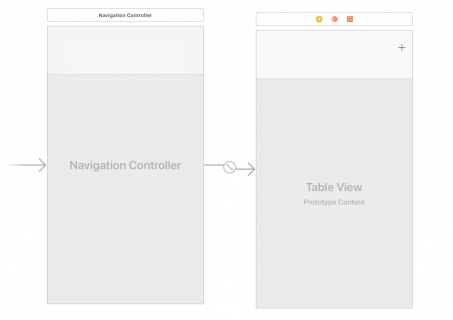

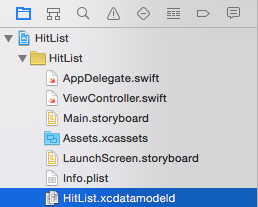

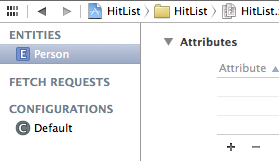

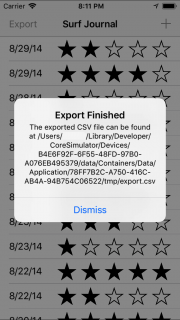

As you’ve done many times already, select Xcode\File\New\Target… and choose watchOS\WatchKit App:

![]()

Name the product BT_WatchKit_App, uncheck Include Notification Scene, and select Finish:

![]()

There are now three targets: TextMeMapMe, BT_WatchKit_App and BT_WatchKit_App Extension. Check that all three have the same team.

Creating the Interface

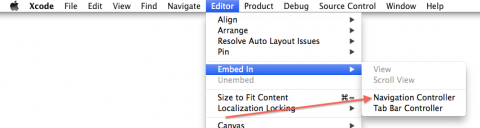

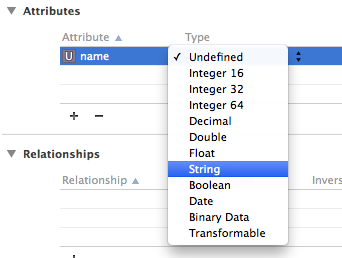

Open BT_WatchKit_App/Interface.storyboard, and drag two buttons, two labels, and a menu onto the scene. Set the background color of the buttons to different colors, and set their titles to wait…:

![]()

Select the two buttons, then select Editor\Embed in\Horizontal Group. Set each button’s width to 0.5 Relative to Container, and leave Height Size To Fit Content:

Set each label’s Font to Footnote, and Lines to 0, and set the second label’s Text to Transferred text appears here:

![]()

Set the Menu Item‘s Title to Reset, with Image Repeat:

![]()

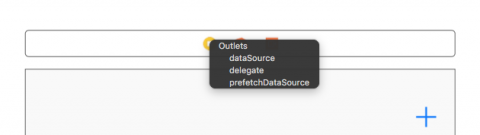

Open the assistant editor, and create outlets (textButton, mapButton, statusLabel, textLabel) and actions (textMe, mapMe, resetCentral) in InterfaceController.swift.

![]()

Reduce the Amount of Text to Send

Data transfer to the Watch is slower than to an iOS device, so open the iOS app’s Main.storyboard, and delete the second sentence from PeripheralViewController‘s textView, leaving only Lorem ipsum dolor sit er elit lamet sample text.

Copy-Pasting and Editing CentralViewController code

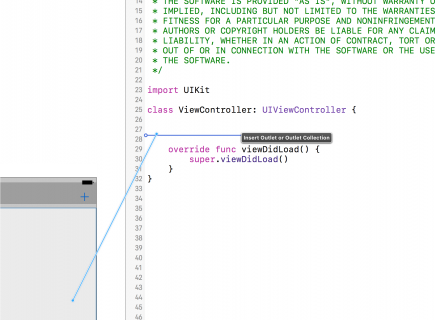

First, select SharedConstants.swift, and open the file inspector to add BT_WatchKit_App Extension to its target membership:

![]()

Now you’ll mostly copy code from CentralViewController.swift, paste it into InterfaceController.swift, and do a small amount of editing.

First, import CoreBluetooth:

import CoreBluetooth

Below the outlets, copy and paste the central manager, peripheral, characteristic and data properties, then edit mapCharacteristic to set the title of mapButton, and add a similar observer to textCharacteristic:

var centralManager: CBCentralManager!

var discoveredPeripheral: CBPeripheral?

var textCharacteristic: CBCharacteristic? {

didSet {

if let _ = self.textCharacteristic {

textButton.setTitle("Text Me")

}

}

}

var data = Data()

var mapCharacteristic: CBCharacteristic? {

didSet {

if let _ = self.mapCharacteristic {

mapButton.setTitle("Map Me")

}

}

}

This lets the user know that the Watch app has discovered the text and map characteristics, so it’s now safe to read or write them.

Copy and paste the helper methods scan() and cleanup(), then copy and paste the two delegate extensions. Change the two occurrences of extension CentralViewController to extension InterfaceController:

// MARK: - Central Manager delegate

extension InterfaceController: CBCentralManagerDelegate {

and

// MARK: - Peripheral Delegate

extension InterfaceController: CBPeripheralDelegate {

In peripheral(_:didDiscoverCharacteristicsFor:error:), delete the line that subscribes to textCharacteristic:

peripheral.setNotifyValue(true, for: characteristic)

Subscribing causes the peripheral to send the text data, so you’ll move this to the Text Me button’s action, giving the user more control over how the Watch app spends its restricted BLE allowance.

In peripheral(_:didUpdateValueFor:error:), replace the textView.text line (where the error is) with these two lines:

statusLabel.setHidden(true)

textLabel.setText(String(data: data, encoding: .utf8))

And add this line just below the line that creates stringFromData:

statusLabel.setText("received \(stringFromData ?? "nothing")")

Everything happens more slowly on the Watch, so you’ll use statusLabel to tell the user what’s happening while they wait. Just before the transferred text appears, you hide statusLabel, to make room for the text.

Use statusLabel in other places: add this line to scan():

statusLabel.setText("scanning")

And this line to centralManager(_:didDiscover:advertisementData:rssi:):

statusLabel.setText("discovered peripheral")

And to centralManager(_:didConnect:):

statusLabel.setText("connected to peripheral")

Add similar log statements to peripheral delegate methods, when services and characteristics are discovered.

Next, scroll up to awake(withContext:), and copy-paste this line from viewDidLoad():

centralManager = CBCentralManager(delegate: self, queue: nil)

Delete the methods willActivate() and didDeactivate().

Now fill in the actions. Add these lines to textMe():

guard let characteristic = textCharacteristic else { return }

discoveredPeripheral?.setNotifyValue(true, for: characteristic)

Tapping the Text Me button subscribes to textCharacteristic, which triggers peripheralManager(_:central:didSubscribeTo:) to send data.

Next, copy these lines from mapUserLocation() into mapMe():

guard let characteristic = mapCharacteristic else { return }

discoveredPeripheral?.writeValue(Data(bytes: [1]), for: characteristic, type: .withoutResponse)

mapUserLocation() and mapMe() do the same thing: write the value of mapCharacteristic, which triggers peripheralManager(_:didReceiveWrite:) to open the Maps app.

Before you implement resetCentral(), add this helper method:

fileprivate func resetUI() {

statusLabel.setText("")

statusLabel.setHidden(false)

textLabel.setText("Transferred text appears here")

textButton.setTitle("wait")

mapButton.setTitle("wait")

}

You’re just setting the label and button titles back to what they were, and unhiding statusLabel.

Now add these two lines to resetCentral():

cleanup()

resetUI()

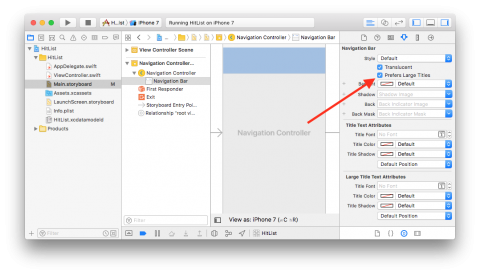

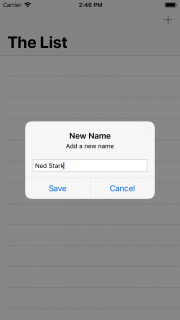

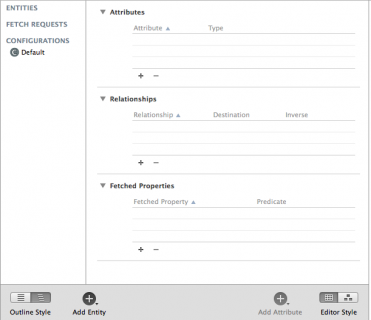

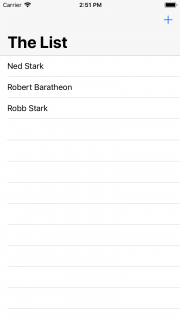

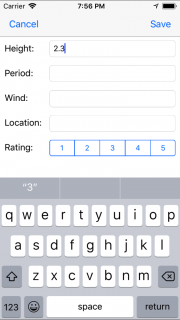

Build and run on your Apple Watch + iPhone. You’ll probably have to do this a couple of times, to “trust this developer” on the iPhone and on the Watch. At the time of writing, instead of telling you what to do, the Watch displays this error message:

![]()

Press the digital crown to manually open the app on your Watch, and trust this developer. The app will then start, but stop it, then build and run again. Select Peripheral mode on the iPhone, and turn on Advertising.

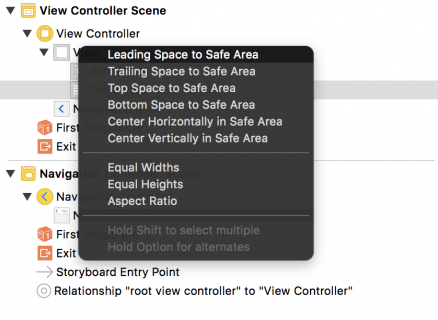

And wait. Scanning, connection and discovery take longer when the central is a Watch instead of an iPhone. When the Watch app discovers the TextOrMap characteristics, it updates the button titles:

![]()

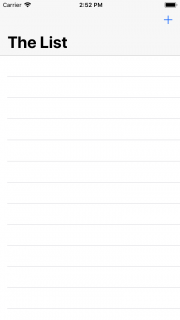

Tap Text Me, and wait. You’ll see the text appear on the Watch, and statusLabel disappears:

![]()

Tap Map Me, and allow use of your location. Tap Map Me again, and the iPhone opens the Maps app at your location.

![]()

Stop the Watch app in Xcode, and close the iPhone app.

Congratulations! You’ve built a Watch app that uses an iPhone as a Bluetooth LE peripheral! Now you can connect all the things!

Where to Go From Here?

You can download the completed project for the tutorial here.

The audio recording and playback API of watchOS 4 makes it possible to deliver a smooth multimedia experience on the Apple Watch even when the paired iPhone isn’t in proximity. This is a technology with endless possibilities.

![]() If you enjoyed what you learned in this tutorial, why not check out the complete watchOS by Tutorials book, available in our store?

If you enjoyed what you learned in this tutorial, why not check out the complete watchOS by Tutorials book, available in our store?

Here’s a taste of what’s in the book:

Chapter 1, Hello, Apple Watch!: Dive straight in and build your first watchOS 4 app — a very modern twist on the age-old “Hello, world!” app.

Chapter 2, Designing Great Watch Apps: Talks about the best practices based on Apple recommendations in WWDC this year, and how to design a Watch app that meets these criteria.

Chapter 3, Architecture: watchOS 4 might support native apps, but they still have an unusual architecture. This chapter will teach you everything you need to know about this unique aspect of watch apps.

Chapter 4, UI Controls: There’s not a `UIView` to be found! In this chapter you’ll dig into the suite of interface objects that ship with WatchKit–watchOS’ user interface framework.

Chapter 5, Pickers: `WKInterfacePicker` is one of the programmatic ways to work with the Digital Crown. You’ll learn how to set one up, what the different visual modes are, and how to respond to the user interacting with the Digital Crown via the picker.

Chapter 6, Layout: Auto Layout? Nope. Springs and Struts then? Nope. Guess again. Get an overview of the layout system you’ll use to build the interfaces for your watchOS apps.

Chapter 7, Tables: Tables are the staple ingredient of almost any watchOS app. Learn how to set them up, how to populate them with data, and just how much they differ from `UITableView`.

Chapter 8, Navigation: You’ll learn about the different modes of navigation available on watchOS, as well as how to combine them.

Chapter 9, Digital Crown and Gesture Recognizers: You’ll learn about accessing Digital Crown raw data, and adding various gesture recognizers to your watchOS app interface.

Chapter 10, Snapshot API: Glances are out, and the Dock is in! You’ll learn about the Snapshot API to make sure that the content displayed is always up-to-date.

Chapter 11, Networking: `NSURLSession`, meet Apple Watch. That’s right, you can now make network calls directly from the watch, and this chapter will show you the ins and outs of doing just that.

Chapter 12, Animation: The way you animate your interfaces has changed with watchOS 3, with the introduction of a single, `UIView`-like animation method. You’ll learn everything you need to know about both animated image sequences and the new API in this chapter.

Chapter 13, CloudKit: You’ll learn how to keep the watch and phone data in sync even when the phone isn’t around, as long as user is on a known WiFi network.

Chapter 14, Notifications: watchOS offers support for several different types of notifications, and allows you to customize them to the individual needs of your watch app. In this chapter, you’ll get the complete overview.

Chapter 15, Complications: Complications are small elements that appear on the user’s selected watch face and provide quick access to frequently used data from within your app. This chapter will walk you through the process of setting up your first complication, along with introducing each of the complication families and their corresponding layout templates.

Chapter 16, Watch Connectivity: With the introduction of native apps, the way the watch app and companion iOS app share data has fundamentally changed. Out are App Groups, and in is the Watch Connectivity framework. In this chapter you’ll learn the basics of setting up device-to-device communication between the Apple Watch and the paired iPhone.

Chapter 17, Audio Recording: As a developer, you can now record audio directly on the Apple Watch inline in your apps, without relying on the old-style system form sheets. In this chapter, you’ll gain a solid understanding of how to implement this, as well as learn about some of the idiosyncrasies of the APIs, which are related to the unique architecture of a watch app.

Chapter 18, Interactive Animations with SpriteKit and SceneKit: You’ll learn how to apply SpriteKit and SceneKit in your Watch apps, and how to create interactive animations of your own.

Chapter 19, Advanced Watch Connectivity: In Chapter 15, you learned how to set up a Watch Connectivity session and update the application context. In this chapter, you’ll take a look at some of the other features of the framework, such as background transfers and real-time messaging.

Chapter 20, Advanced Complications: Now that you know how to create a basic complication, this chapter will walk you through adding Time Travel support, as well giving you the lowdown on how to efficiently update the data presented by your complication.

Chapter 21, Handoff Video Playback: Want to allow your watch app users to begin a task on their watch and then continue it on their iPhone? Sure you do, and this chapter will show exactly how to do that through the use of Handoff.

Chapter 22, Core Motion: The Apple Watch doesn’t have every sensor the iPhone does, but you can access what is available via the Core Motion framework. In this chapter, you’ll learn how to set up Core Motion, how to request authorization, and how to use the framework to track the user’s steps.

Chapter 23, HealthKit: The HealthKit framework allows you to access much of the data stored in user’s health store, including their heart rate! This chapter will walk you through incorporating HealthKit into your watch app, from managing authorization to recording a workout session.

Chapter 24, Core Location: A lot of apps are now location aware, but in order to provide this functionality you need access to the user’s location. Developers now have exactly that via the Core Location framework. Learn everything you need to know about using the framework on the watch in this chapter.

Chapter 25, Core Bluetooth: In watchOS 4, you can pair and interact with BLE devices directly. Learn how to send control instructions and other data directly over Bluetooth.

Chapter 26, Localization: Learn how to expand your reach and grow a truly international audience by localizing your watch app using the tools and APIs provided by Apple.

Chapter 27, Accessibility: You want as many people as possible to enjoy your watch app, right? Learn all about the assistive technologies available in watchOS, such as VoiceOver and Dynamic Type, so you can make your app just as enjoyable for those with disabilities as it is for those without.

One thing you can count on: after reading this book you’ll have all the experience necessary to build rich and engaging apps for the Apple Watch platform.

And to help sweeten the deal, the digital edition of the book is on sale for $49.99! But don’t wait — this sale price is only available for a limited time.

Speaking of sweet deals, be sure to check out the great prizes we’re giving away this year with the iOS 11 Launch Party, including over $9,000 in giveaways!

To enter, simply retweet this post using the #ios11launchparty hashtag by using the button below:

We hope you enjoy this update, and stay tuned for more book releases and updates!

The post Core Bluetooth in watchOS Tutorial appeared first on Ray Wenderlich.

Aaron Douglas was that kid taking apart the mechanical and electrical appliances at five years of age to see how they worked. He never grew out of that core interest – to know how things work. He took an early interest in computer programming, figuring out how to get past security to be able to play games on his dad’s computer. He’s still that feisty nerd, but at least now he gets paid to do it. Aaron works for Automattic (WordPress.com, Akismet, Simplenote) as a Mobile Maker primarily on the WordPress for iOS app. Find Aaron on Twitter as

Aaron Douglas was that kid taking apart the mechanical and electrical appliances at five years of age to see how they worked. He never grew out of that core interest – to know how things work. He took an early interest in computer programming, figuring out how to get past security to be able to play games on his dad’s computer. He’s still that feisty nerd, but at least now he gets paid to do it. Aaron works for Automattic (WordPress.com, Akismet, Simplenote) as a Mobile Maker primarily on the WordPress for iOS app. Find Aaron on Twitter as  Saul Mora is trained in the mystical and ancient arts of manual memory management, compiler macros and separate header files. Saul is a developer who honors his programming ancestors by using Optional variables in swift on all UIs created from Nib files. Despite being an Objective C neckbeard, Saul has embraced the Swift programming language. Currently, Saul resides in Shanghai, China working at 流利说 (Liulishuo) helping Chinese learn English while he is learning 普通话 (mandarin).

Saul Mora is trained in the mystical and ancient arts of manual memory management, compiler macros and separate header files. Saul is a developer who honors his programming ancestors by using Optional variables in swift on all UIs created from Nib files. Despite being an Objective C neckbeard, Saul has embraced the Swift programming language. Currently, Saul resides in Shanghai, China working at 流利说 (Liulishuo) helping Chinese learn English while he is learning 普通话 (mandarin). Matthew Morey is an engineer, author, hacker, creator and tinkerer. As an active member of the iOS community and Director of Mobile Engineering at MJD Interactive he has led numerous successful mobile projects worldwide. When not developing apps he enjoys traveling, snowboarding, and surfing. He blogs about technology and business at

Matthew Morey is an engineer, author, hacker, creator and tinkerer. As an active member of the iOS community and Director of Mobile Engineering at MJD Interactive he has led numerous successful mobile projects worldwide. When not developing apps he enjoys traveling, snowboarding, and surfing. He blogs about technology and business at  Pietro Rea is a software engineer at Upside Travel in Washington D.C. Pietro’s work has been featured in Apple’s App Stores across several different categories: media, e-commerce, lifestyle and more. From Fortune 500 companies to venture-backed startups, Pietro has a passion for mobile software development done right. You can find Pietro on Twitter as

Pietro Rea is a software engineer at Upside Travel in Washington D.C. Pietro’s work has been featured in Apple’s App Stores across several different categories: media, e-commerce, lifestyle and more. From Fortune 500 companies to venture-backed startups, Pietro has a passion for mobile software development done right. You can find Pietro on Twitter as

bela

bela

Ehab Amer is a software developer in Cairo, Egypt. In the day, he leads mobile development teams create cool apps, In his spare time he spends dozens of hours improving his imagination and finger reflexes playing computer games… or at the gym!

Ehab Amer is a software developer in Cairo, Egypt. In the day, he leads mobile development teams create cool apps, In his spare time he spends dozens of hours improving his imagination and finger reflexes playing computer games… or at the gym!